We say xerox speaking of any photocopy, whether or not it was created by a machine from the Xerox corporation. We describe an information search on the Internet with just one word — google. We photoshop pictures instead of editing them on the computer. And COVID-19 made zoom a synonym for a videoconference.

Kafka can be added to the list of brand names that became generic terms for an entire type of technology. Similar to Google in web browsing and Photoshop in image processing, it became the gold standard in data streaming, preferred by 70 percent of Fortune 500 companies. In this article, we’ll explain why businesses choose Kafka and what problems they face when using it.

What is Kafka?

Apache Kafka is an open-source, distributed streaming platform for messaging, storing, processing, and integrating large data volumes in real time. It offers high throughput, low latency, and scalability that meets Big Data requirements.

The technology was written in Java and Scala in LinkedIn to solve the internal problem of managing continuous data flows. After trying all options existing on the market — from messaging systems to ETL tools — in-house data engineers decided to design a totally new solution for metrics monitoring and user activity tracking that would handle billions of messages a day.

Data streaming, explained

How Apache Kafka streams relate to Franz Kafka’s books

What does the high-performance data project have to do with the real Franz Kafka’s heritage? Practically, nothing. As Jay Kreps, the original author of the streaming solution, put it, “I thought that since Kafka was a system optimized for writing, using a writer’s name would make sense. I had taken a lot of lit classes in college and liked Franz Kafka. Plus the name sounded cool for an open-source project.”

Following this logic, any other writer with a short and memorable name — say, Gogol, Orwell, or Tolkien — could have become a symbol of endless data streams. If only Jay had different tastes.

What Kafka is used for

No matter who reads what, the technology found its way to virtually every industry — from healthcare to travel to retail. Among companies benefitting from Kafka’s capabilities are Walmart, Netflix, Amadeus, Airbnb, Tesla, Cisco, Twitter, Etsy, Oracle, and Spotify — to name just a few heavyweights.

Banks, car manufacturers, marketplaces, and others are building their processes around Kafka to

- track user activity on the website which was the initial use case for Kafka at LinkedIn;

- send notifications to end users or enable asynchronous messaging between microservices;

- collect system metrics for monitoring, alerting, and analysis;

- aggregate logs (records of database changes) for tracking what’s happening in the system in real-time, replicating data between nodes, and recovering lost or damaged data; and

- process data in real time and run streaming analytics.

In other words, Kafka can serve as a messaging system, commit log, data integration tool, and stream processing platform. The number of possible applications tends to grow due to the rise of IoT, Big Data analytics, streaming media, smart manufacturing, predictive maintenance, and other data-intensive technologies.

But to understand why Kafka is omnipresent we have to look at how it works — in other words, to get familiar with its concepts and architecture.

Kafka architecture

Let’s start with an asynchronous communication pattern Kafka revolves around — publish/subscribe or simply Pub/Sub. This scenario involves three main characters — publishers, subscribers, and a message or event broker.

A publisher (say, telematics or Internet of Medical Things system) produces data units, also called events or messages, and directs them not to consumers but to a middleware platform — a broker.

A subscriber is a receiving program such as an end-user app or business intelligence tool. It links to the broker to be aware of and fetch certain updates.

So publishers and subscribers know nothing about each other. All data goes through the middleman — in our case, Kafka — that manages messages and ensures their security. Though Kafka is not the only option available in the market, it definitely stands out from other brokers and merits special attention.

Read our article on event-driven architecture and Pub/Sub to learn more about this powerful communication paradigm. Or stay here to make sense of Kafka's concepts and structure.

Kafka cluster and brokers

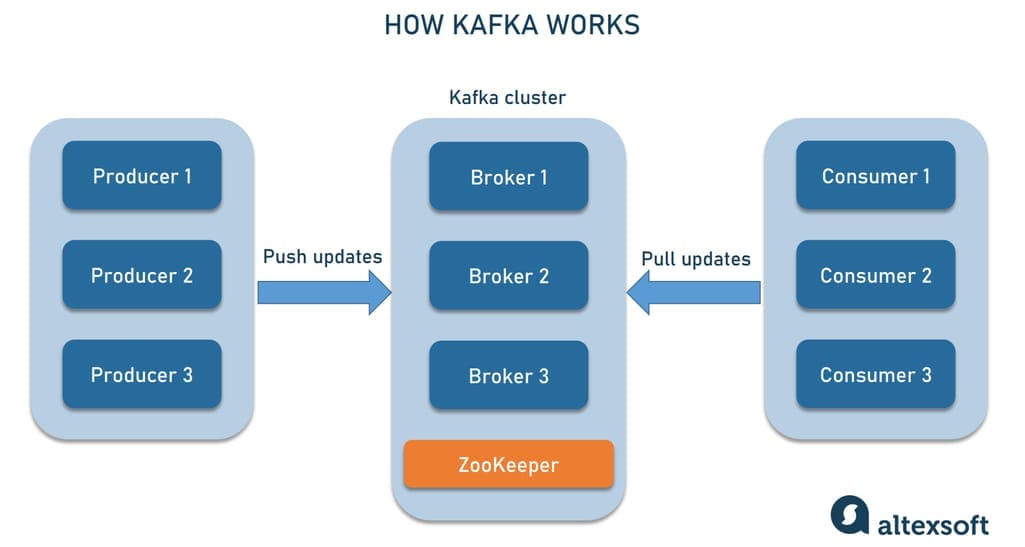

Kafka is set up and run as a cluster — a group of servers or brokers that manage communication between two types of clients, producers and consumers (publishers and subscribers in general Pub/Sub terms).

Depending on the hardware characteristics, even a single broker is enough to form a cluster handling tens and hundreds of thousands of events per second. But for high availability and data loss prevention, it’s recommended that you have at least three brokers.

Kafka cluster architecture

One broker in a cluster is automatically elected as the controller. It takes charge of administrative tasks like failure monitoring. To coordinate brokers within a cluster, Kafka employs a separate service — Apache ZooKeeper.

Kafka topic and partition

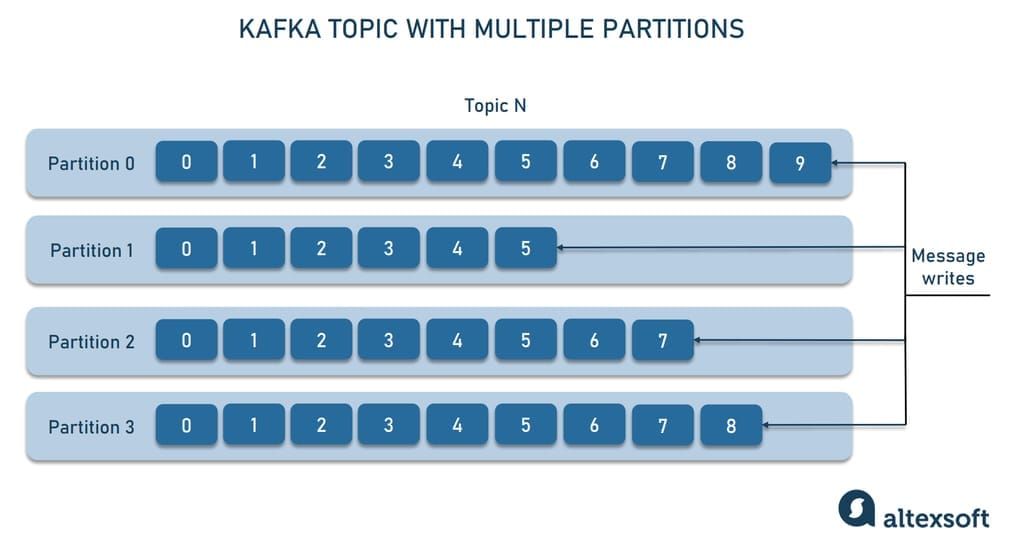

Kafka groups related messages in topics that you can compare to folders in a file system. A topic, in turn, is divided into partitions — the smallest units of storage space, hosting an ordered sequence of messages. It’s possible to append new records to the end of the partition but not to rewrite or replace them.

Each topic is divided into partitions with immutable message sequences

Messages in the sequence have their unique id numbers called offsets. They serve as a type of cursor for consumers letting them keep track of what has been read.

Pull-based consumption

Unlike many other message brokers that immediately push updates to all subscribers, Kafka sticks to a pull-based consumption approach. To receive messages, a consumer has to initiate the communication — or make a request. After pulling updates and processing them, the consumer calls again for a new portion.

Kafka APIs

Kafka delivers its functionality via five main APIs:

- the Producer API for publishing data feeds to Kafka;

- the Consumer API for subscribing to topics and reading updates as they come. It supports batch processing;

- the Streams API for real-time processing and analytics. It can both read data and write it to Kafka;

- the Connect API for direct data streaming between Kafka and external data systems; and

- the Admin API for monitoring and managing topics, brokers, and other Kafka components.

By the way, you can watch our video to understand how APIs work in general.

API principles explained

With these basic concepts in mind, we can proceed to the explanation of Kafka’s strengths and weaknesses.

Kafka advantages

As we said above, Kafka is not the only Pub/Sub platform on the market. Still, it’s the number one choice for data-driven companies, and here’re some reasons why.

Scalability

Scalability is one of Kafka’s key selling points. Its very architecture is centered around the idea of painless expansion the moment your business needs it. A single cluster can span across multiple data centers and cloud facilities. A single topic can place partitions on different brokers. This allows for easy horizontal scaling — just add new servers or data centers to your existing infrastructure to handle a greater amount of data.

You can start with as little as one broker for proof of concept, then scale to three to five brokers for development, and go live with tens or even hundreds of servers. It’s important that the expansion doesn’t result in system downtime — the cluster will remain online and continue serving clients.

Besides increasing a single cluster, you can scale up by deploying several clusters. The benefits of this approach become obvious as the business grows. It enables enterprises to segregate workloads by data types, isolate processes to meet specific security requirements, or make configurations to support particular use cases. For example, LinkedIn employs over 100 clusters with more than 4,000 brokers. Together, they handle 7 trillion messages a day.

The way Kafka treats clients also contributes to the overall system’s scalability.

Multiple producers and consumers

Kafka is designed to handle numerous clients from both sides. Multiple producers can commit streams of messages to the same topic or across several topics. This enables systems using Kafka to aggregate data from many sources and to make it consistent.

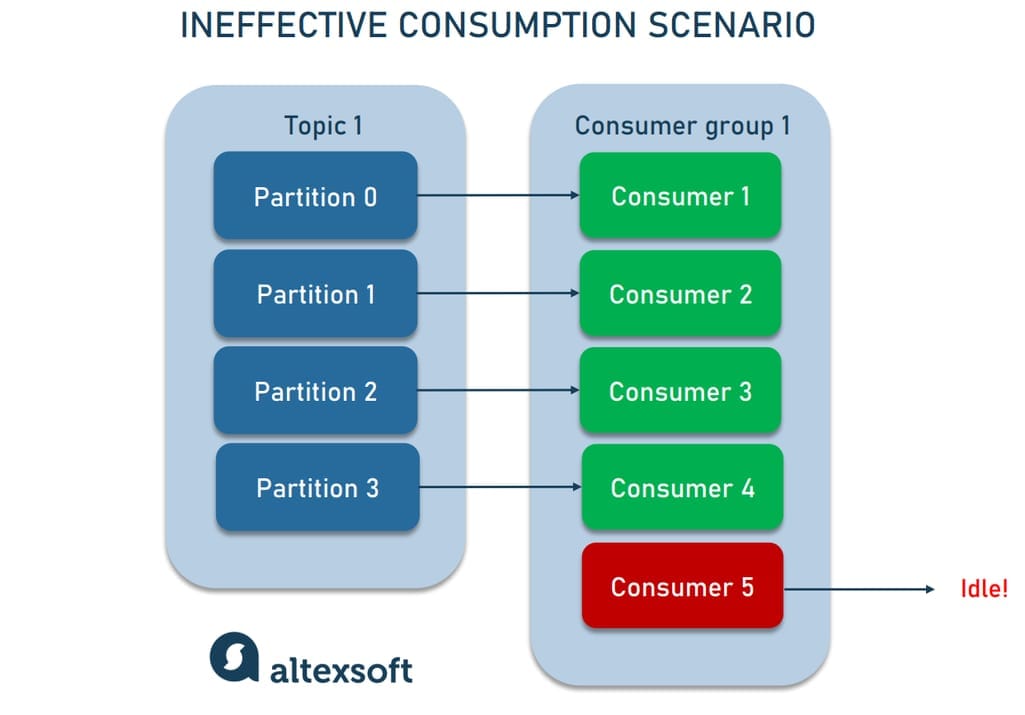

At the same time, the platform allows multiple apps to subscribe to the same topic. Instead of interfering with each other, Kafka consumers create groups and split data among themselves. Apps in a group will pull messages from different partitions in parallel, sharing the load and increasing throughput.

Note that when you have more consumers than partitions some subscribers will remain idle. The rule of thumb here is to design topics with a larger number of partitions — this way, you’ll be able to add more consumers when data volumes grow.

More consumers than partitions lead to idling

Support for different consumption needs

Kafka allows you to not only easily scale consumption. It also caters to the needs and capabilities of different clients.

The pull-based approach enables apps to read data at individual rates and choose how to consume information — in real-time or in batch mode. Kafka also lets you set data retention rules for a particular topic. They will dictate how long the messages will be stored on the disk, depending on consumer requirements.

Durable retention guarantees that a data stream won’t disappear if an app subscribed to it lags or goes offline. The consumer can resume processing information later, from the point it left off. The default retention time is 168 hours which is equal to one week. But you can configure this parameter.

High performance

All of the above-mentioned result in high performance under high load. You can scale up producers, consumers, and brokers to transmit large volumes of messages with the minimum possible delay (in real time or near real time.)

The performance consists of two main metrics — throughput and latency. The former measures how much information can be sent per unit of time (the higher — the better). The latter is about how fast the data reach its destination (the lower — the better).

Kafka was designed with high throughput rather than with low latency in mind. According to the official specifications, “A single Kafka broker can handle hundreds of megabytes of reads and writes per second from thousands of clients.” Yet, you can configure cluster parameters to optimize performance according to your use case.

Give preference to higher throughput for apps that tolerate delays. Set latency as low as possible for real-time processing. Or strike a balance between the two metrics when you aim at both high throughput and low latency.

High availability and fault tolerance

Kafka has proved itself as an extremely reliable system. It achieves high availability and fault tolerance through replication at the partition level. Put simply, Kafka makes multiple copies of data and hosts them on different brokers. If one broker fails or goes offline, data won’t be lost and the information will be still available to consumers.

That’s why a one-broker cluster isn’t the best idea: It ensures high performance but lacks reliability. The usual number of replicas set for production is three but you can fine-tune it to your needs.

Kafka Connect for simple integration

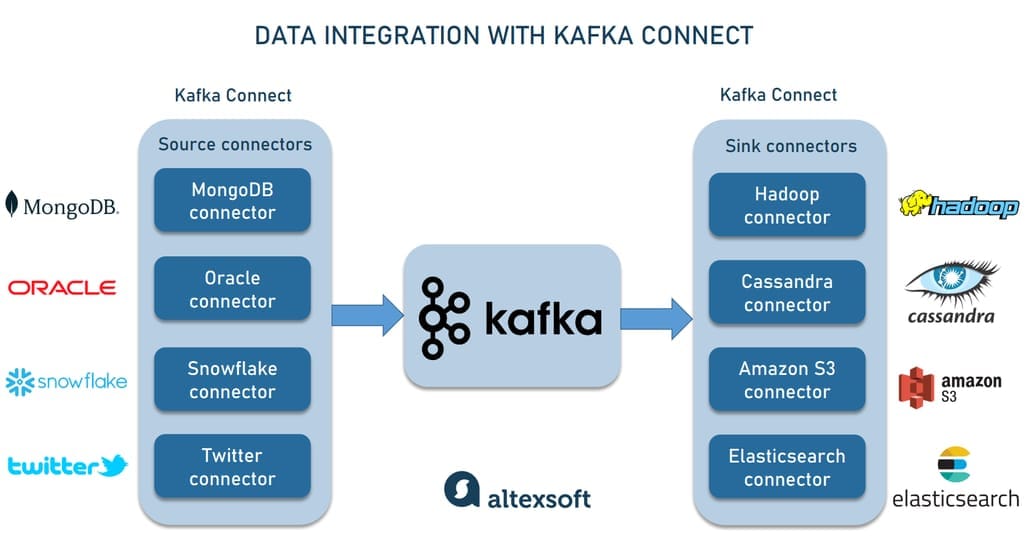

Kafka Connect is a free component of the Kafka ecosystem that enables simple streaming integration between Kafka and its clients.

Kafka Connect simplifies data pushing and pulling processes

The tool standardizes work with connectors — programs that enable external systems to import data to Kafka (source connectors) or export it from the platform (sink connectors). There are hundreds of ready-to-use connector plugins maintained by community or service providers. You can find off-the-shelf links for

- popular SQL and NoSQL database management systems including Oracle, SQL Server, Postgres, MySQL, MongoDB, Cassandra, and more;

- cloud storage services — Amazon S3, Azure Blob, and Google Cloud Storage;

- message brokers such as ActiveMQ, IBM MQ, and RabbitMQ;

- Big Data processing systems like Hadoop; and

- cloud data warehouses — for example, Snowflake, Google BigQuery, and Amazon Redshift.

Kafka Connect simplifies the implementation and management of those connectors as well as the development of new, high-performance, and reliable plugins.

Flexibility

Obviously, Kafka strives to cover as many use cases as possible — and it has succeeded in this not least due to its extreme flexibility.

The platform allows customers to configure brokers and topics according to their unique requirements. Developers, infrastructure engineers, or other experts can decide how much reliability or throughput they need considering other important factors — like latency or hardware costs.

Multi-language environment

Kafka used to work only with Java, and it is still the only language of the main Kafka project. However, there is a range of open-source client libraries enabling you to build Kafka data pipelines with practically any popular programming language or framework. This list includes but is not limited to C++, Python, Go, .NET, Ruby, Node.js, Perl, PHP, Swift, and more.

Security

Kafka offers different protection mechanisms like data encryption in motion, authentication, and authorization. So, if your topic contains sensitive information, it can’t be pulled by an unauthorized consumer. Another security measure is an audit log to track access. You can also monitor where messages come from and who modifies what — yet, this option requires some extra coding.

Large user community

Similar to other popular open-source technologies, Kafka has a vast community of users and contributors. It’s one of the five most active Apache Software Foundation projects, with hundreds of dedicated conferences globally. Learn about scheduled online and live meetups on the Kafka Events page.

There are over 29,000 questions with the tag Apache Kafka on StackOverflow and more than 79,000 Kafka-related repositories and 2,000 discussions on GitHub. You may join a Kafka community on Reddit or a forum of Kafka experts on the Confluent website. In other words, you won’t be out in the cold with your Kafka problems should .you have any

Multiple Kafka vendors

Though Kafka remains open-source and free, many vendors made it a part of their commercial offerings. They provide additional features, user-friendly interfaces, ready-to-use integrations, technical support, and other extras that make Kafka implementation and management much easier. Though these services cost money, they definitely save you time and wear and tear your nerves. Anyway, you have a choice, which is always better than none.

Among the most reliable Kafka distributors

- Confluent, founded by Kafka’s authors. It’s a fully-managed service that continuously contributes to the Kafka ecosystem, maintains the platform, and provides tech support and training. The company also nurtures the community of Kafka developers and offers resources to learn Kafka.

- Cloudera, focusing on Big Data analytics. The hybrid data platform supports numerous Big Data frameworks including Hadoop and Spark, Flink, Flume, Kafka, and many others. Engineers from Cloudera actively participate in Kafka communities, make improvements to the platform and work on its security.

- Red Hat, acquired by IBM. Kafka is a part of its AMQ product — a messaging platform that enables real-time integration and building IoT systems.

Keep in mind that those vendors mitigate most challenges businesses commonly face when implementing the “raw” Kafka from the official website.

Rich documentation, guides, and learning resources

Apache Software maintains detailed documentation explaining Kafka concepts, use cases, how it works and how to get started with the Kafka environment. It also provides books, academic papers, and educational videos to explore the technology in more detail.

You may also learn Kafka with Confluent which offers white papers and guides on different aspects of Kafka along with video courses and tutorials for developers.

Kafka disadvantages

It’s pretty hard to criticize Kafka since for now, it serves as a gold standard in the world of data streaming. But we’ve made an effort and found a couple of pitfalls to be aware of.

Steep learning curve and technological complexity

The abundance of documentation, guides, and tutorials doesn’t mean that you’ll easily resolve all technological challenges. Kafka is complex in terms of cluster setup and configuration, maintenance, and data pipeline design. It takes time to master the platform even for people with strong tech backgrounds.

Depending on the type of deployment (cloud or on-premise), cluster size, and the number of integrations, the deployment may take days to weeks to even months. Many companies end up asking for help from Kafka vendors (mainly, Confluent) or other third-party service providers.

Need for extra tech experts

You can’t just install Kafka and let it go by itself. A couple of in-house experts are necessary to continuously monitor what’s going on and tune up the system. If you don’t have proper tech talent in your company, you need to hire specialists which adds to the project cost.

No managing and monitoring tools

Apache Kafka doesn’t provide UI for monitoring, though it’s critical for running jobs correctly. Tech teams must spend a significant time performing this part of daily operations and ensuring that data streams don’t stop and that nothing is lost. There are third-party management and monitoring tools for Kafka but many developers wish such instruments were embedded in the platform.

ZooKeeper issue

From the very beginning, Kafka has been relying on the third-party instrument — ZooKeeper — to coordinate brokers inside the cluster. The tool takes care of storing metadata about partitions and brokers. Besides, it defines which broker will have control over others.

The dependency on the external solution irritates many developers as it adds difficulty to the already complex deployment and configuration of Kafka. To make things worse, ZooKeeper creates a performance bottleneck. For example, it doesn’t allow a Kafka cluster to support more than 200,000 partitions and significantly slows the system’s work when a broker joins or leaves the cluster.

Today, the dream of Kafka without ZooKepeer is closer to realization than ever. KRaft, an internal tool, was introduced to replace the outsider, improve scalability, and simplify installation and maintenance. Now, it’s available for development only. But the hope is we’ll see it ready for production sooner or later.

Kafka and its alternatives

Due to the variety of capabilities, Kafka can’t be compared with other technologies feature by feature. Still, it has alternatives to consider when it comes to particular use cases.

Kafka vs Hadoop

In some way, Kafka resembles Hadoop: They both store data and process it at a large scale. Hadoop works with batches while Kafka deals with streams, but all the same, the similarities are obvious.

However, these systems serve different use cases. Hadoop fits heavy analytics applications that are not time-critical and generate insights for long-term planning and strategic decisions. Kafka is more about building services that power daily business operations.

Kafka vs ETL

It’s quite common to see Kafka as a faster ETL. Yet, there are significant differences between them. Extract-Transform-Loading technologies appeared when all software products interacted directly with databases. Moving information from database to database has always been the key activity for ETL tools. Today, more and more apps deal with streams of events rather than with data stores — something that ETL can’t cope with.

Many companies resolve this problem by introducing two technologies — an ETL and a kind of data bus allowing business applications to share updates and workflows. In other words, they maintain two separate data projects.

Kafka, on the contrary, enables you to join all data flows across different use cases. At the same time, ETL developers might use the data streaming platform for their workloads — especially, when real-time transformations are required.

This way, an ETL process becomes Kafka’s producer or consumer rather than a rival.

Kafka vs MQ software

One of the popular use cases for Kafka is messaging. So, it’s often thought of as another message queue (MQ) platform — similar to IBM MQ, ActiveMQ, and RabbitMQ. However, Kafka is faster, more scalable, and capable of handling all data streams in the company. Besides that, Kafka can store data as long as you like and process streams in real time.

Yet, it doesn’t mean that Kafka is always a better choice. For example, when your daily loads of data are small (say, no more than several thousand messages), it’s more reasonable to stay with traditional message queues.

How to Get Started with Apache Kafka

Though you’ve already come across information on Kafka documentation and tutorials earlier in this article, we decided to collect all useful links in one place for your convenience.

Apache Kafka official documentation. That is where you can download the latest version of Kafka and get familiar with the main concepts and components of Kafka, the terminology it uses, and its main APIs.

Apache Kafka Quick Start. The section will help you set up the Kafka environment and begin to work with streams of data.

Ecosystem. Here you can find a list of Kafka tools that doesn’t come pre-packaged with the main product. You need to integrate them separately.

Books and papers. This is a collection of downloadable books and publications explaining Kafka in general, its specific aspects, and event-driven systems and stream processing in general.

Best Kafka Summit videos. The Apache Kafka team provides video recordings of the best presentations at Kafka Summits organized by Confluent. The event takes place two to three times a year.

Events. If you want to join meetups and conferences focused on Kafka and its ecosystem, this page will let you know about all events, schedules, and locations.

Learn Apache Kafka. The education hub from Confluent offers a range of video courses to learn Kafka basic, internal architecture, security mechanisms, and more specific aspects — like Kafka Connect, Kafka Streams, or event stream design.

A Confluent podcast about Apache Kafka. Listen to Kris Jenkins, a senior developer advocate for Confluent, talking with his guests from the Kafka community about the latest news, use cases, data streaming patterns, modern IT architectures, and more.

Apache Kafka courses on Udemy. Udemy offers several courses for beginners covering different aspects of working with Kafka — from cluster setup and administration to launching Kafka Connect and real-time stream processing.

This article is a part of our “The Good and the Bad” series. If you are interested in web development, take a look at our blog post on

The Good and the Bad of Angular Development

The Good and the Bad of JavaScript Full Stack Development

The Good and the Bad of Node.js Web App Development

The Good and the Bad of React Development

The Good and the Bad of React Native App Development

The Good and the Bad of TypeScript

The Good and the Bad of Swift Programming Language

The Good and the Bad of Selenium Test Automation Tool

The Good and the Bad of Android App Development

The Good and the Bad of .NET Development

The Good and the Bad of Ranorex GUI Test Automation Tool

The Good and the Bad of Flutter App Development

The Good and the Bad of Ionic Development

The Good and the Bad of Katalon Automation Testing Tool

The Good and the Bad of Java Development

The Good and the Bad of Serverless Architecture

The Good and the Bad of Power BI Data Visualization

The Good and the Bad of Hadoop Big Data Framework

The Good and the Bad of Snowflake Data Warehouse

The Good and the Bad of C# Programming

The Good and the Bad of Python Programming