“He seems to have rough skin, muscular limbs, and deep-set beady eyes. I extend my hand to give him a base to walk on, and I swear I feel a tingling in my palm in expectation of his little feet pressing into it. When, a split second later, my brain remembers that this is just an impressively convincing 3-D image displayed in the real space in front of me, all I can do is grin.” - Rachel Metz, a senior editor at MIT Technology Review wrote in 2015.

She was remarking on an augmented reality (AR) technology that developers from Magic Leap gave the journalist a chance to experience. Specifically, she was looking at a small monster that was neatly superimposed on our boring physical world, projected onto her eyes via a pair of high-tech goggles.

Back then, Magic Leap was an overhyped, augmented reality startup to which Wired journalists awarded the adjective “secretive.” Consider this. The team didn’t settle in vibrant yet predictable Silicon Valley. Instead, they opened an office in Florida to avoid tech parties and subsequent information leaks. They managed to acquire an unprecedented $1.4 billion in investments from Google and Alibaba without even a prototype. And they showed impressive video spoilers of our augmented future.

Rachel Metz was criticized for fueling the hype instead of describing the state of the technology she really saw. And later, when comparing Magic Leap with its less secretive competitor HoloLens from Microsoft, she reduced the exuberant tone saying: “In that demonstration, 3-D monsters and robots looked amazingly detailed and crisp, fitting in well with the surrounding world, though they were visible only with lenses attached to bulky hardware sitting on a cart.”

Today, Magic Leap encounters all sorts of reputational problems that overhyped and secretive startups tend to end up with. The video was fake, made by a graphic designer team, the technology seems to be way behind HoloLens, and on top of that the company got involved in a sex discrimination lawsuit. They haven’t yet showcased the prototype either.

On the other hand, the HoloLens team does the right things. They’ve released an SDK with proper documentation, provided the engineering community with a developer kit headset, and businesses with the corporate suite which integrates with the enterprise mobility ecosystem. Microsoft acknowledges the problems and paves the way to the revolution of human-computer interfaces. Another emerging player, ODG (Osterhout Design Group), has been developing augmented reality devices for the military since the 80s, just recently targeting the mass market making the competition even more intriguing. There are many players to consider. Even Apple joined the race this March.

This time we’re looking at the state of technology in augmented reality or mixed reality, whichever way you prefer. What’s done and what’s yet to be done before the last smartphone joins its predecessors in the junk drawer graveyard? It looks inevitable that by that time we’ll put goggles on.

But today, VR and AR should be considered as entirely different branches of engineering that aren’t going to intersect any time soon. The use cases are also different as VR inevitably transitions you into the new environment while AR is always about the combination of physical and virtual reality.

The first thing to note about augmented reality is that the main technological advancements and existing challenges revolve around the optics, particularly, the lenses that make all the magic happen. The way optics are built (and how physics works) defines the set of problems AR engineers struggle to solve:

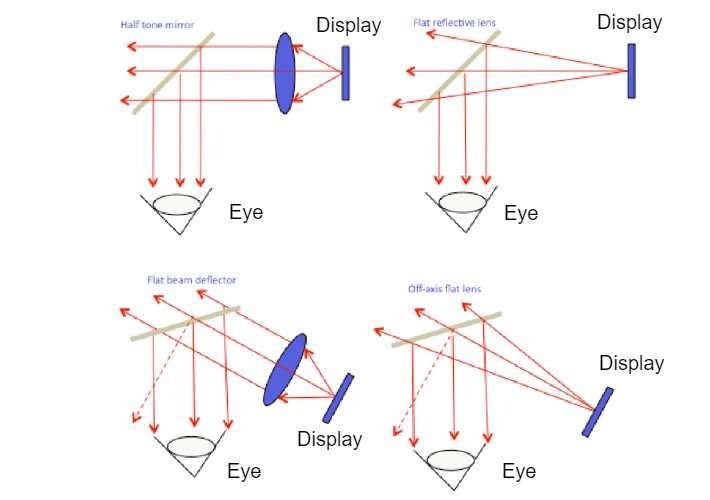

Conventional HMD (head-mounted displays). The combiners of the conventional type are well-established and have been used for decades for military and scientific purposes. The beam of light from the display reaches the surface of a combiner, a semi-transparent mirror, and reflects to the eye. There’s a handful of types within conventional HMDs but the main principle is preserved. Most of the displays are either too bulky or, if they aren’t, provide such a narrow field of view that it can’t be considered a sustainable solution for a consumer-focused AR product.

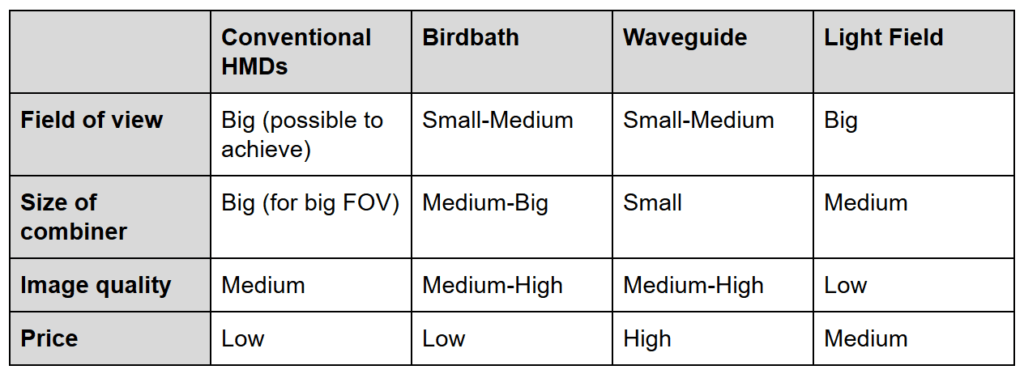

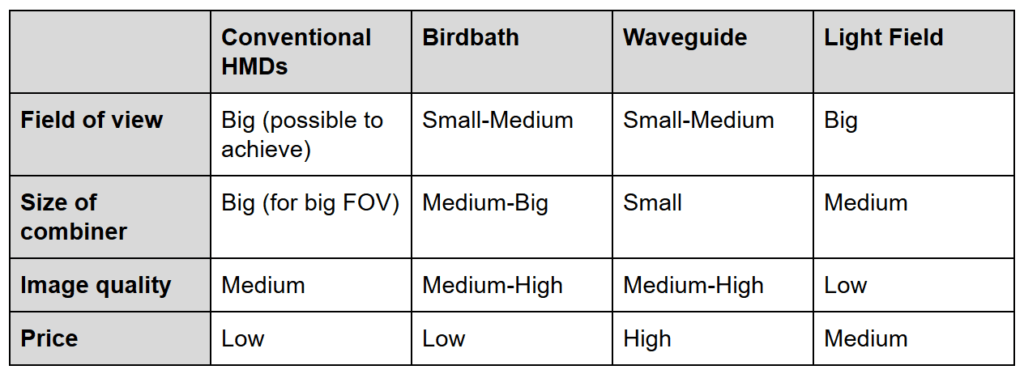

Let’s roughly sum up benefits and trade-offs.

So, here they are – the most common optics currently used in AR devices. So how are the main players using them and what have they achieved so far?

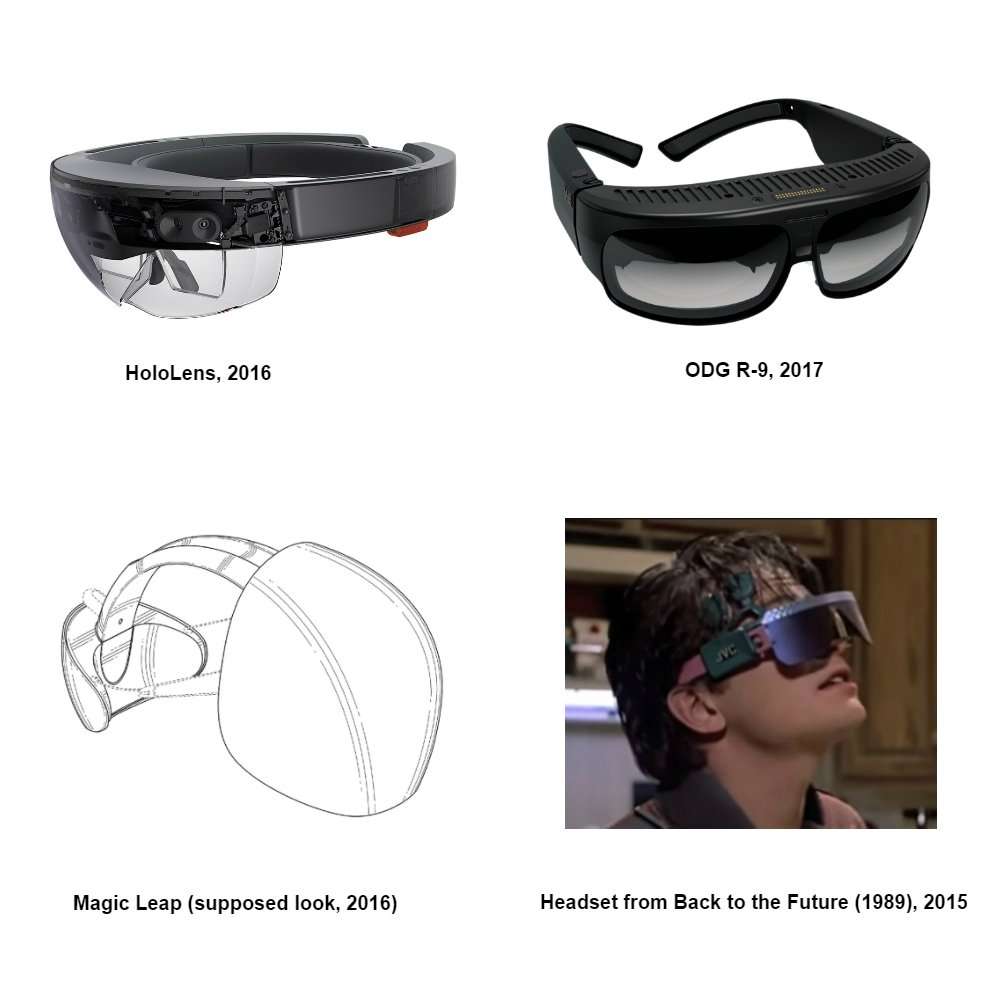

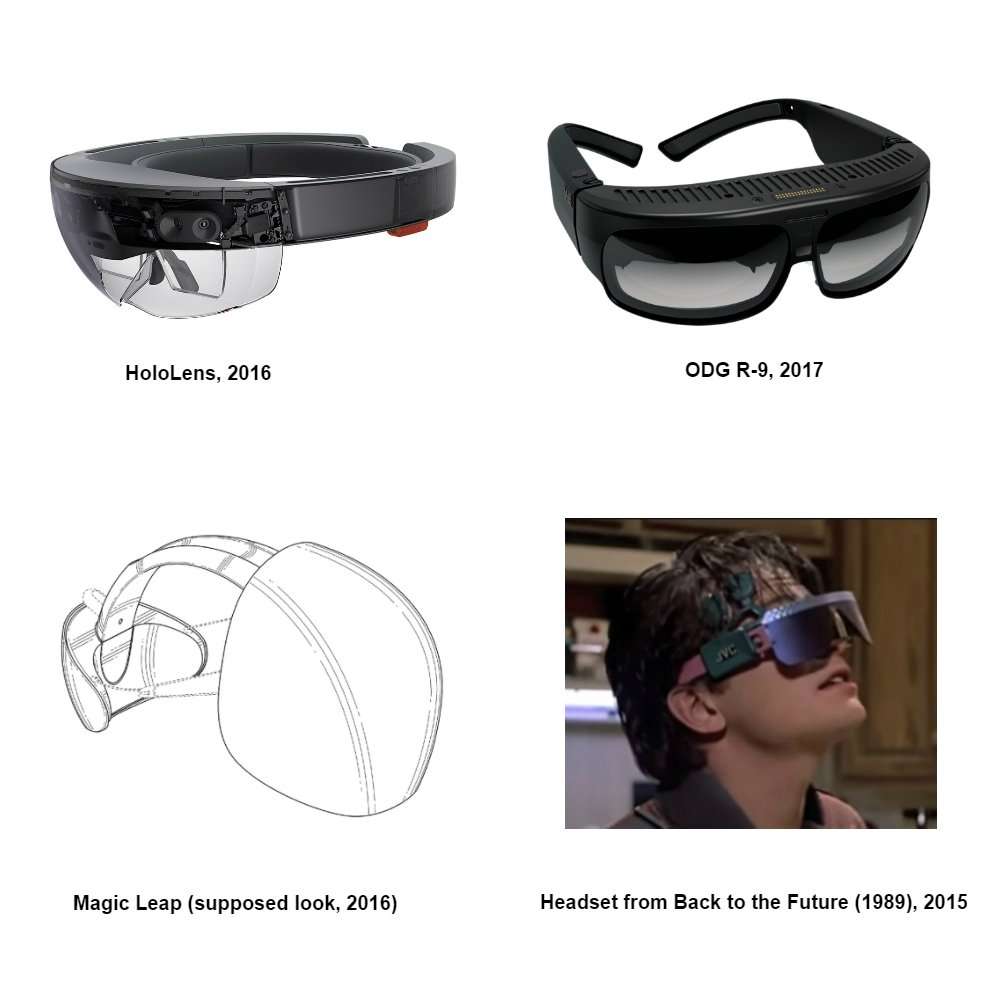

Before they reach mass adoption, headsets should be first accepted by a society, both by the people wearing them and those who aren’t. Modern AR devices look more like bulky retro-futuristic props and less like something that you want to wear when hanging out with friends or buying groceries. If we consider mass adoption, our future AR goggles should look like Snapchat Spectacles.

Perhaps, aesthetics (and a $3K price tag) is the reason why HoloLens today aims mostly at corporate consumers – designers, construction workers, and doctors, rather than mass users. The closest form to that of eyeglasses has been introduced by ODG. But even their headset looks bulky.

Interestingly though, ODG applies a larger birdbath combiner compared to the flat waveguide that HoloLens has. But the form factor is still heavily reliant on other hardware. Engineers need some space to squeeze it all into a headset. The newest version of ODG, for instance, will be powered by the Qualcomm Snapdragon 835 8-core processor, which is currently in the flagship smartphone Samsung Galaxy S8. It also carries 6 gigs of RAM and 128 gigs of internal storage besides 4 cameras, sensors, communicators, speakers, and see-thru displays. These are impressive specs for smartphones, let alone wearables.

Not only must engineers minimize required space, but also figure out the passive cooling system (no fans) that would keep you from burning your head on a daily basis. Another top competitor, Meta 2, solves the hardware problem by making a detour and providing a headset that needs to be tethered to a computer. Are you ready to carry an additional hardware box around?

The form factor problem is still unsolved, but it’s at the point where we can be pretty optimistic considering that Moore's law is still working and we continue to gradually increase computing power of processing units. But it’s very unlikely that we’ll mistake an AR device for sunglasses very soon.

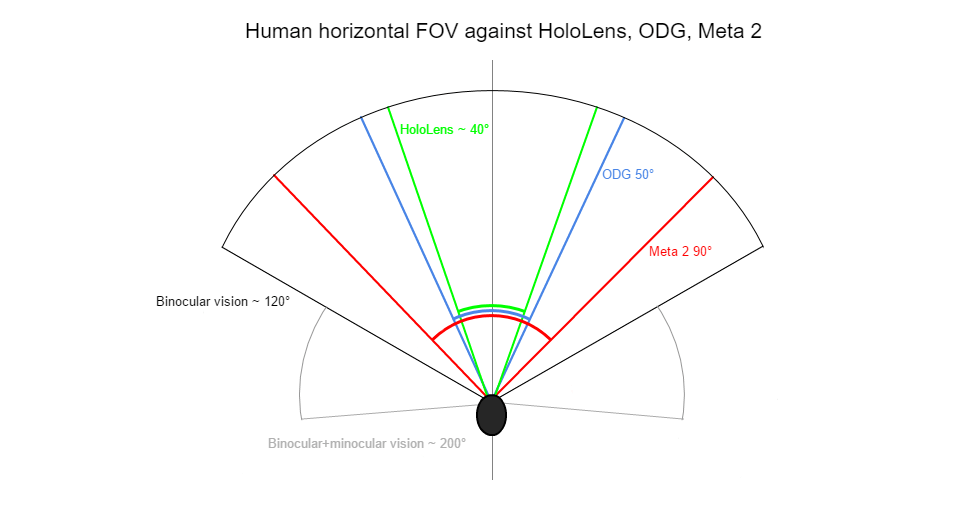

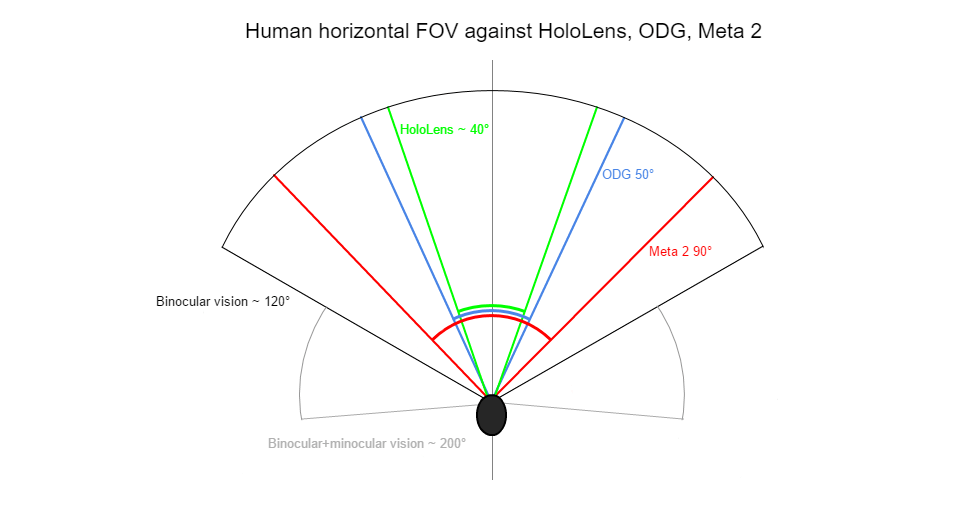

The main complaint about HoloLens is the narrow field of view, just about 40° horizontally and 18° vertically. But Microsoft isn’t leading here. Let’s have a look at horizontal FOV of an average person put against fields of view suggested by HoloLens, ODG, and Meta 2.

Basically, when you wear HoloLens, AR objects are visible in a near-rectangular opening in the middle, just like you’re looking at a desktop monitor. ODG is a bit better and the winner is Meta 2 with 90° FOV, which doesn’t even cover binocular vision. It’s an arguable point which FOV will be enough to get the full immersion. If you’ve ever tried VR headsets Oculus Rift or HTC Vive, their horizontal FOVs are pretty much the same, about 110°, and for many, this is not enough as you still notice borders on peripheries.

Why is it such a problem to make at least a 120° FOV? And, by the way, why are FOVs so different across devices?

The field-of-view problem stems from two factors:

But how did Meta 2 achieve an impressive 90° FOV? Well, they basically sacrificed aesthetics.

As mentioned, HoloLens uses waveguide and ODG has birdbath combiners. The waveguide combiner, a flat and relatively ergonomic one, exceeds conventional HMDs in all parameters except for the field of view. The waveguide architecture has a very limited angular bandwidth, which results in a narrow FOV. And if Microsoft were to scale FOV – and if they could – the price tag for the headset would skyrocket. Today the rig costs $3000.

The birdbath can provide better FOVs, but it remains more space-consuming than waveguide.

We can’t resolve the FOV problem now. We either need large devices that won’t match the sought-after eyeglasses form or we must settle for a very narrow FOV in exchange for good image quality and flat, elegant lenses.

Well, there are some signs from Microsoft that may inspire a bit of optimism. Are you wondering why HoloLens would mount a super expensive waveguide combiner if it doesn’t make much difference in terms of the form factor? Why wouldn’t they use a cheaper birdbath instead? It seems they wanted to stick with waveguides and test to later use the technology in future generations of AR devices.

In late December 2016, when HoloLens already had the look we’re familiar with, Microsoft patented the technology that combines waveguides with the pinlight (light field) approach. Pinlight produces a very poor image quality compared to waveguide. But it can support a wide FOV.

What does Microsoft suggest? According to the patent, they consider using configurations selectively. If a virtual object appears in the peripheral vision, it can be rendered with low-quality but high FOV pinlight while objects in the middle of the view will be rendered with waveguides.

So how does it work for near-eye displays?

We can’t measure the resolution of an eye with pixels directly. According to estimates, the near-eye displays should have at least 60 pixels per degree of our field of view. Then we’ll achieve a sharp image with no pixels visible. But even for a relatively narrow field of view – that existing AR devices have – our conventional resolutions fall short.

For example, ODG R-9 will have 1920 x 1080 resolution per eye. To reach the level of 60 pixels per degree horizontally with 50° FOV that R-9 has, the display should have 3000 pixels horizontally instead of 1920.

But do we really need 6600 pixels per eye to make a sharp image to cover say 110° FOV?

In fact, our eyes have variable resolution that changes depending on the object we focus on. So, the real FOV where the highest resolution is applied is only about 40° wide. But the problem is that our eyes tend to move, instead of focusing only on the objects in the middle of the FOV. This means two things:

As Karl Guttag, a heads-up and near-eye display devices engineer, says: “I don’t see any technology that will be practical in high volume (or even very expensive at low volume) that is going to simultaneously solve the angular resolution and FOV that some people want.”

The problem with AR devices is that the light beam will always stay in focus if we don’t have multiple focal planes, at least five. This will result in eye fatigue and generally will impact the immersion.

Magic Leap claimed that they would use the light field technology to make accommodation possible. If you remember, the pinlight version of light fields breaks the image into individual light rays allowing our eyes to adjust to distances and focus correctly. But the resolution problem of light field deters companies from using it, except one of them besides Magic Leap.

Avegant, the AR company that just recently introduced the Glyph Light Field headset, claims that they use the light field approach to make multiple digital focus planes. And here’s when things start being a bit enigmatic, because technically they use birdbath optics and wouldn’t say exactly how their focal planes adjust. The only thing that we know is that they’ve achieved impressive accommodation results without adding moving parts and eye tracking.

In other aspects, the Glyph Light Field is a bulky headset tethered to a computer. But hey, they acknowledge it to be a prototype! So maybe Avegant will soon become the main competitor to HoloLens, at least in terms of accommodation.

To place virtual objects into the physical reality, we need to create a virtual copy that the device is going to deal with. Then we must track the position of a person wearing the device to correctly render virtual objects. There are many videos on the web showing how it works. Check the one from HoloLens, for example.

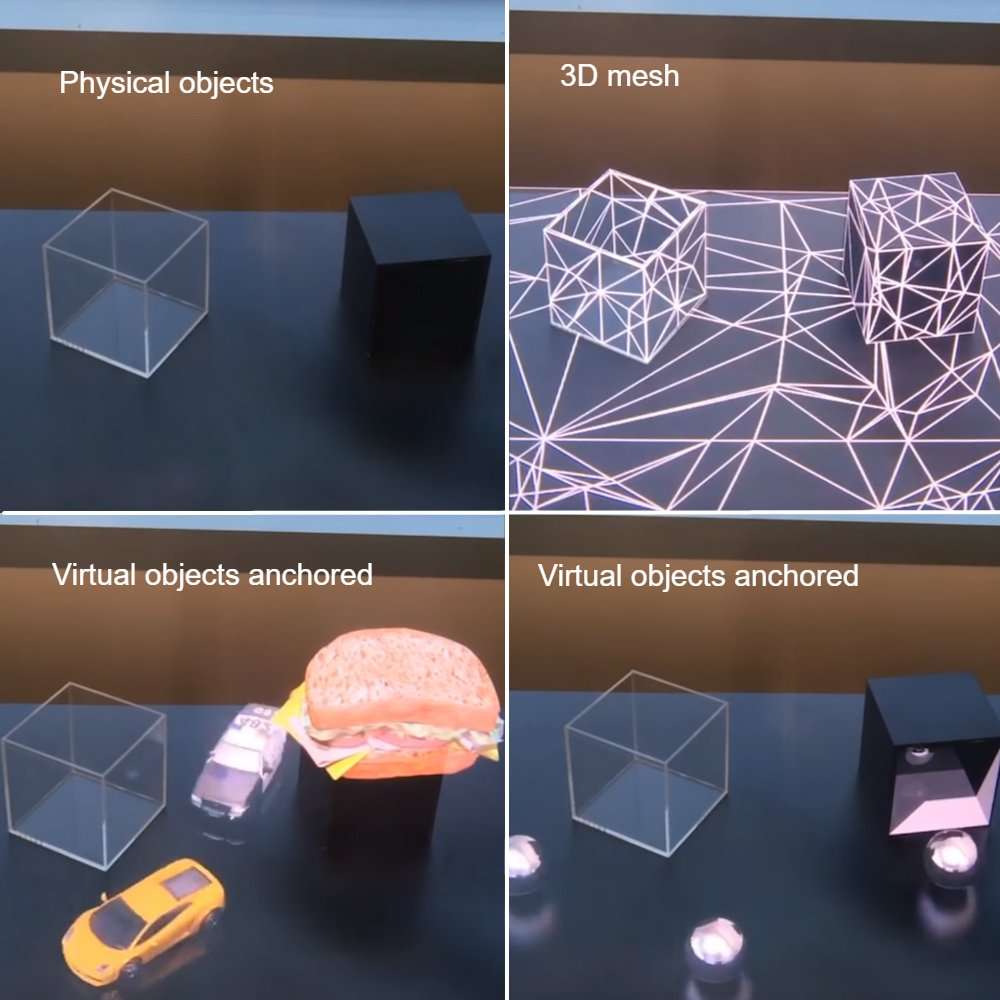

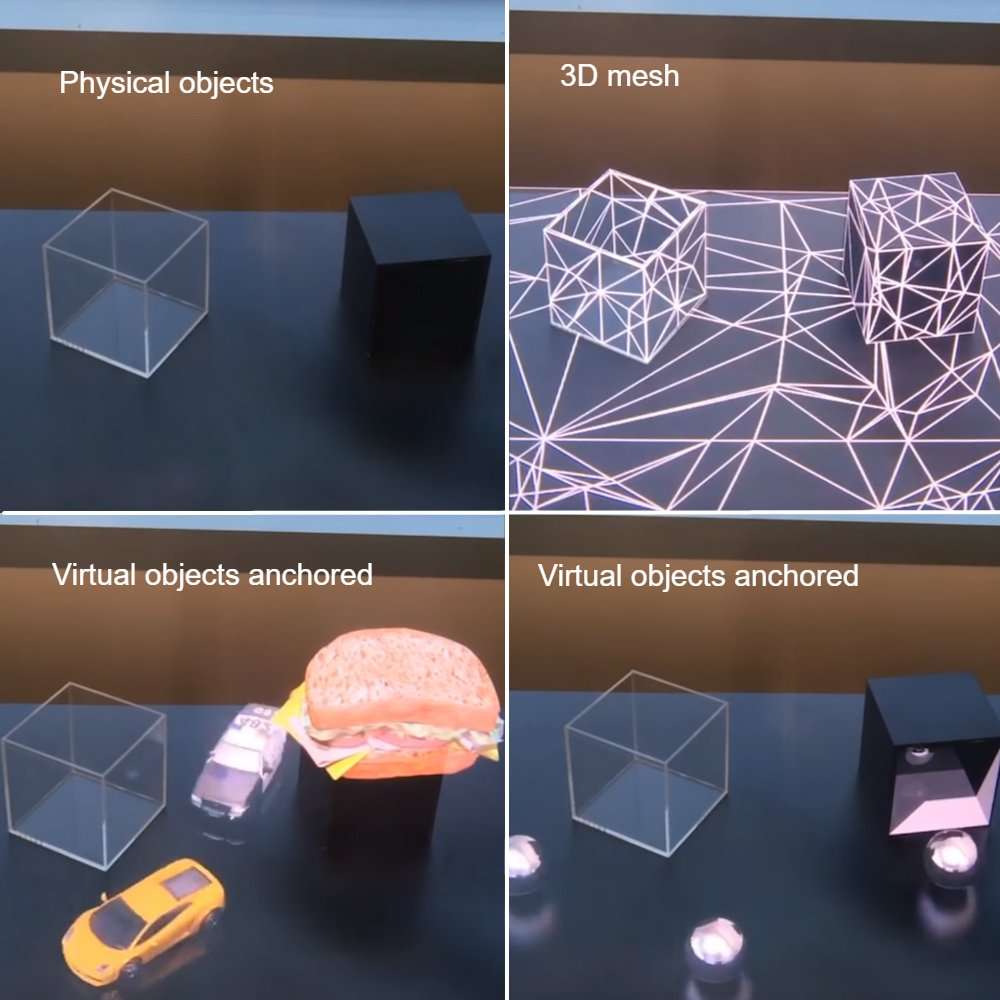

The most common approach to spatial mapping is using depth-sensing cameras. They would capture the environment’s image, sending it to a processing unit to analyze the depths of objects. Then the system would create a mesh, basically, a 3D model of the environment, that would allow for the placing of virtual objects.

If you ever watched Hyper-Reality, the video which portrays the morbid AR future, note that we still can’t do anything like that in terms of mapping. The video shows how the protagonist goes through streets and shops encountering hundreds of virtual objects anchored to the physical environment.

But before placing any virtual objects, the AR device must see the environment in advance to create its 3-D copy. Imagine that you’ve decided to create an action game that takes place on your block. You’d likely want enemies coming at you from all directions. You want them to sit on rooftops, unexpectedly come from around the corner of your house, or say shoot at you from your neighbor's windows. That would be cool, right?

Well, to do this with the existing technology, you first have to explore your neighborhood to the nth degree, in all possible directions to let the device map your playground. If you’re using HoloLens, you’d have to make sure that you approach and look at all objects within 3.1 meters in front of you. If you don’t, the camera won’t capture all details that you want to interact with.

Is there a way around it? Well, yes. Eventually, we may map streets and public places beforehand, just like Google does with Street View, but applying the depth cameras. Or an AR community may contribute to it once the technology reaches mass adoption. So, it’s possible as soon as we deal with the optics problems.

Let's sum up.

The look. There’s still no device that would have an acceptable form, namely looking like glasses. The waveguide optics allow for that but they don’t seem to make a big impact on the device look in the case of HoloLens. It will probably take a few years to make the rest of hardware small enough.

Field of view. Here comes the painful part. There are pathways to decent FOV but the trade-offs are too significant to make them useful. It seems that by far we must accept what we have in terms of FOV, if we want a sharp image.

Resolution. The resolution section above comes down hard on existing devices saying that current resolutions are nowhere near the desired result, and things would get even worse if we were to increase FOV. Well, the resolution problem doesn’t seem so problematic, at least for HoloLens reviewers. You may try Oculus Rift or HTC Vive. They also fall short with the desired resolution bar. If you’re okay with them, maybe you’ll be okay with AR as well.

Accommodation. That’s the factor that we don’t know much about. We’ve seen a decent prototype by Avegant, but it leaves many things in the uncertainty area. Will they be able to make the headset smaller and independent of a PC? Will accommodation work as smoothly with third-party applications as it does with demos? Will they be able to make smaller lenses? Too many questions.

Environment mapping. It’s too early to say that we’re entirely through with the mapping question. But it seems that once AR adoption becomes a mass thing, the existing technologies will be enough to support ubiquitous mapping.

Things that we didn’t cover. Obviously, the list of challenges described isn’t exhaustive. For example, engineers still have to figure out how to make the right occlusion, i.e. selectively block certain parts of the real image to avoid visible transparency of the virtual ones. The only technology that can handle occlusion right is pinlight. The patent from Microsoft we mentioned also covers this problem claiming that combined pinlight+waveguide optics could solve the occlusion issue. If you are impressed with ODG R-9, we should mention that they are likely to have a very low see-thru capacity, blocking around 95 percent of real-world light. This could make them very impractical in dark conditions.

And there are many other things blocking AR’s future so far that aren’t so important. But let’s stay optimistic. The high competition among players is inspiring in and of itself. Yes, the price levels and unbaked technology aren’t promising AR to become a mass thing in 10 or so years. But it’s likely that we’ll see corporate adoption soon. Designers, construction workers, and surgeons can already benefit from AR headsets. And they won’t ask for “immersive experiences,” just some necessary and practical basics. So, let’s stick with that for now. It’s an exciting place to be.

She was remarking on an augmented reality (AR) technology that developers from Magic Leap gave the journalist a chance to experience. Specifically, she was looking at a small monster that was neatly superimposed on our boring physical world, projected onto her eyes via a pair of high-tech goggles.

Back then, Magic Leap was an overhyped, augmented reality startup to which Wired journalists awarded the adjective “secretive.” Consider this. The team didn’t settle in vibrant yet predictable Silicon Valley. Instead, they opened an office in Florida to avoid tech parties and subsequent information leaks. They managed to acquire an unprecedented $1.4 billion in investments from Google and Alibaba without even a prototype. And they showed impressive video spoilers of our augmented future.

Rachel Metz was criticized for fueling the hype instead of describing the state of the technology she really saw. And later, when comparing Magic Leap with its less secretive competitor HoloLens from Microsoft, she reduced the exuberant tone saying: “In that demonstration, 3-D monsters and robots looked amazingly detailed and crisp, fitting in well with the surrounding world, though they were visible only with lenses attached to bulky hardware sitting on a cart.”

Today, Magic Leap encounters all sorts of reputational problems that overhyped and secretive startups tend to end up with. The video was fake, made by a graphic designer team, the technology seems to be way behind HoloLens, and on top of that the company got involved in a sex discrimination lawsuit. They haven’t yet showcased the prototype either.

On the other hand, the HoloLens team does the right things. They’ve released an SDK with proper documentation, provided the engineering community with a developer kit headset, and businesses with the corporate suite which integrates with the enterprise mobility ecosystem. Microsoft acknowledges the problems and paves the way to the revolution of human-computer interfaces. Another emerging player, ODG (Osterhout Design Group), has been developing augmented reality devices for the military since the 80s, just recently targeting the mass market making the competition even more intriguing. There are many players to consider. Even Apple joined the race this March.

This time we’re looking at the state of technology in augmented reality or mixed reality, whichever way you prefer. What’s done and what’s yet to be done before the last smartphone joins its predecessors in the junk drawer graveyard? It looks inevitable that by that time we’ll put goggles on.

Combiners: optical devices behind AR

You may be wondering how virtual reality (VR) relates to augmented reality (AR) as they are usually mentioned together. Virtual reality provides entirely virtual experiences without messing with the physical reality image. Augmented reality tries to mix a physical image with the virtual one, which makes things much more complicated in terms of optics engineering. And if we consider augmented reality as the next generation of interfaces, not only must it render images, it must also make all computational operations within a device so that we can discard our smartphones for wearable AR devices, i.e. glasses.But today, VR and AR should be considered as entirely different branches of engineering that aren’t going to intersect any time soon. The use cases are also different as VR inevitably transitions you into the new environment while AR is always about the combination of physical and virtual reality.

The first thing to note about augmented reality is that the main technological advancements and existing challenges revolve around the optics, particularly, the lenses that make all the magic happen. The way optics are built (and how physics works) defines the set of problems AR engineers struggle to solve:

- How is the device going to look? Is it going to be a bulky helmet or will they eventually be mistaken for regular glasses?

- What’s the field of view (FOV) going to be? What’s the width and height of the image we can get compared to our natural field of view?

- Is the graphical image going to be sharp enough? We don’t want to see the pixels.

- Will accommodation be available? Will we be able to focus on different graphical objects that are mapped to physical reality at different distances, as we do with the real objects?

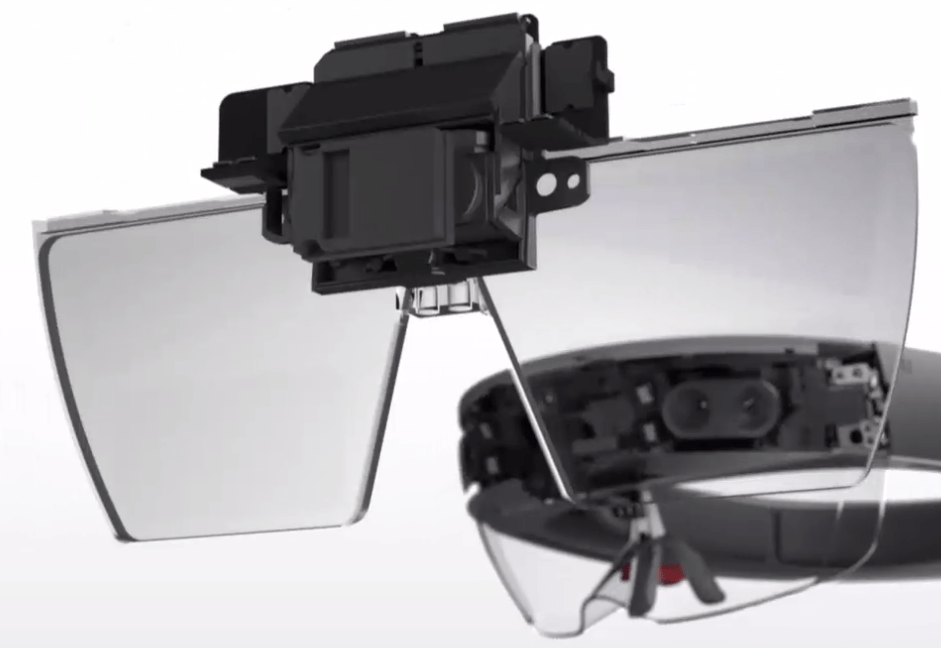

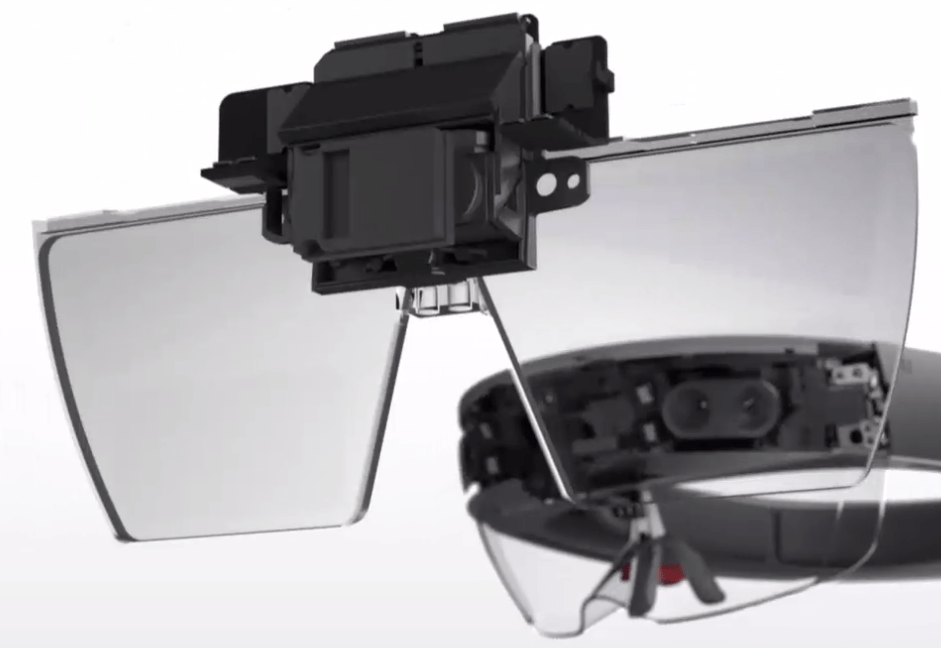

HoloLens combiner Source: HoloLens Hardware

What types of combiners are there? First, there’s no best approach to making combiners. We’ve seen just the first generation of AR devices and all types of them have significant pros and cons. There’s no winner yet, but there are a lot of trade-offs for each type. Let’s outline the main competitors to later see how they perform against the problems mentioned.Conventional HMD (head-mounted displays). The combiners of the conventional type are well-established and have been used for decades for military and scientific purposes. The beam of light from the display reaches the surface of a combiner, a semi-transparent mirror, and reflects to the eye. There’s a handful of types within conventional HMDs but the main principle is preserved. Most of the displays are either too bulky or, if they aren’t, provide such a narrow field of view that it can’t be considered a sustainable solution for a consumer-focused AR product.

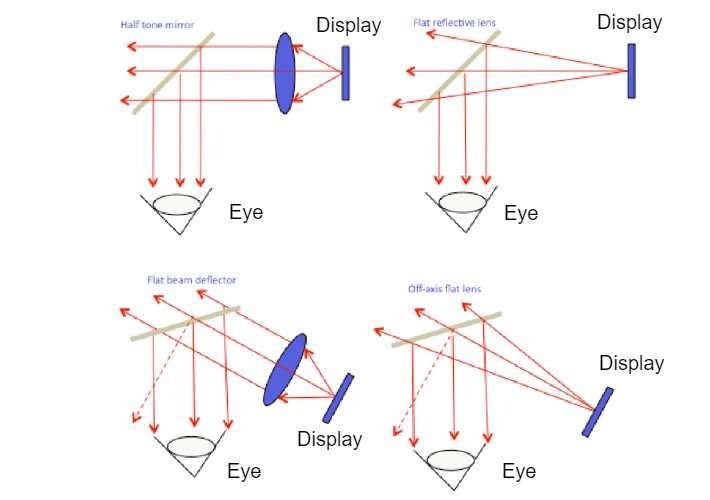

Variations of conventional combiners Source: Diffractive and Holographic Optics as Optical Combiners in Head Mounted Displays

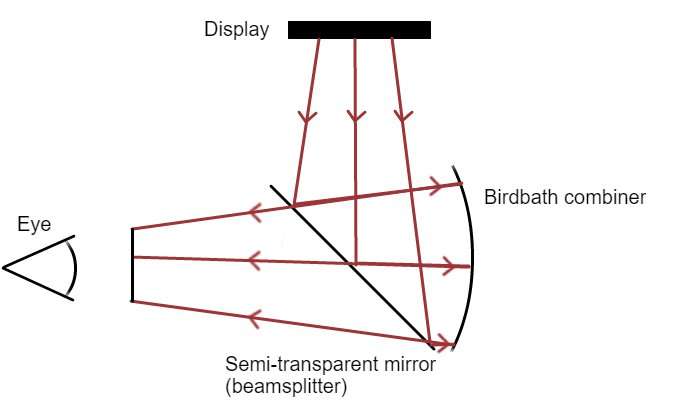

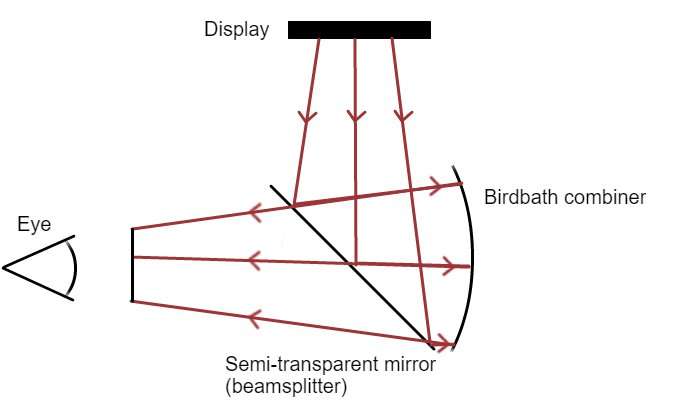

Birdbath combiners. These can also be considered as conventional combiners, but let’s examine this technology as it plays an important role in the world of modern AR. The name for the birdbath combiner comes from the specific shape of the mirror that looks like... a birdbath. According to this design, the light coming from a display first gets redirected to this “birdbath” and then it reflects the light back to the eye. Birdbath combiners also require ample room, and their major drawback is the loss of light that must pass through a semi-transparent mirror (beamsplitter) twice. Look at the picture below. The birdbath design is quite popular because it is technically simple, cheap, and provides slightly better results with FOV than the next one we’ll talk about.

Birdbath combiner

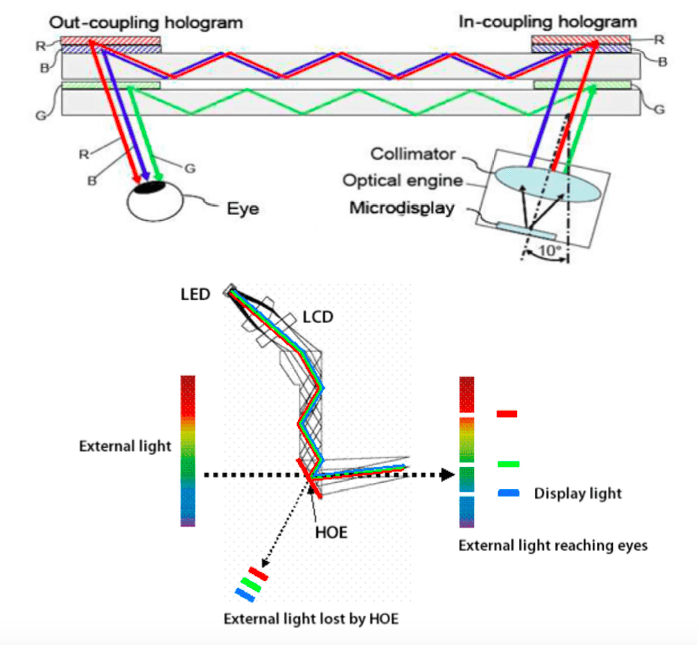

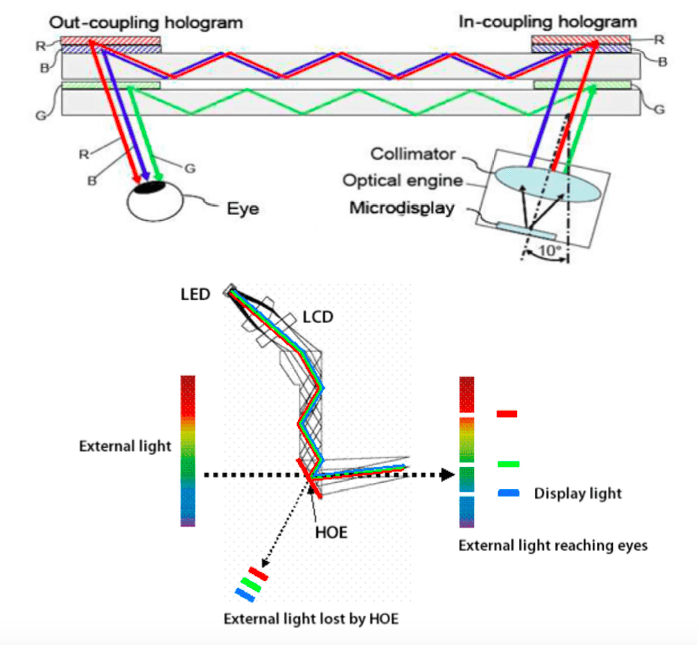

Waveguide combiners. Waveguide combiners are nascent, and today can be considered a cutting-edge technology. Waveguide doesn’t have an established supply chain and costs roughly 10 times more than birdbath. The main player that uses waveguides is HoloLens. The approach differs from conventional combiners. The light beam goes into a waveguide pipe and bounces through it before reaching the eye. This is by far the most compact type, resembling regular glasses. But as we’ll discuss below, it’s hard to achieve high FOV with them.

Waveguide combiners, top: Sony; bottom: Konika Source: Diffractive and Holographic Optics as Optical Combiners in Head Mounted Displays

Light Field displays. This technology stands apart from the rest because it uses an LCD panel stacked with microlenses or another type of splitting device that divides an image into individual light rays. The difference from the previous types is in the fact that the image isn’t reflected through additional optics but is projected directly into the eye. In terms of augmented reality, the most noticeable technique of light fields is called pinlight. To engage in oversimplification, you can say someone looks through a transparent monitor where some pixels appear and some disappear once you need to render a virtual image. To get a better understanding, look at the video explainer. Pinlight technique allows the human eye to accommodate and it also supports a reasonably wide FOV. The main drawback of a pinlight see-thru display, however, is poor image quality. Most AR companies use either conventional combiners or waveguides for this reason.Let’s roughly sum up benefits and trade-offs.

So, here they are – the most common optics currently used in AR devices. So how are the main players using them and what have they achieved so far?

We need glasses

In 2012, augmented reality explorer Steve Mann was attacked in a Parisian McDonald's for wearing a digital eye headset. The man himself considers the accident to be the first case of an assault against a cyborg, because the set is physically attached to his skull and needs special instruments to be removed. And this is not the only case. Multiple accidents were reported when people reacted aggressively seeing such augmented reality devices like Google Glass.Before they reach mass adoption, headsets should be first accepted by a society, both by the people wearing them and those who aren’t. Modern AR devices look more like bulky retro-futuristic props and less like something that you want to wear when hanging out with friends or buying groceries. If we consider mass adoption, our future AR goggles should look like Snapchat Spectacles.

Perhaps, aesthetics (and a $3K price tag) is the reason why HoloLens today aims mostly at corporate consumers – designers, construction workers, and doctors, rather than mass users. The closest form to that of eyeglasses has been introduced by ODG. But even their headset looks bulky.

Interestingly though, ODG applies a larger birdbath combiner compared to the flat waveguide that HoloLens has. But the form factor is still heavily reliant on other hardware. Engineers need some space to squeeze it all into a headset. The newest version of ODG, for instance, will be powered by the Qualcomm Snapdragon 835 8-core processor, which is currently in the flagship smartphone Samsung Galaxy S8. It also carries 6 gigs of RAM and 128 gigs of internal storage besides 4 cameras, sensors, communicators, speakers, and see-thru displays. These are impressive specs for smartphones, let alone wearables.

Not only must engineers minimize required space, but also figure out the passive cooling system (no fans) that would keep you from burning your head on a daily basis. Another top competitor, Meta 2, solves the hardware problem by making a detour and providing a headset that needs to be tethered to a computer. Are you ready to carry an additional hardware box around?

The form factor problem is still unsolved, but it’s at the point where we can be pretty optimistic considering that Moore's law is still working and we continue to gradually increase computing power of processing units. But it’s very unlikely that we’ll mistake an AR device for sunglasses very soon.

Field of view (FOV)

You’d be surprised to know how wide a human’s field of view is. Our binocular and monocular vision combined reach about 200° horizontally and 140° vertically. And we don’t look like pigeons! The binocular horizontal view is about 120°. That excludes the monocular view, degrees that we cover by each eye separately per side.The main complaint about HoloLens is the narrow field of view, just about 40° horizontally and 18° vertically. But Microsoft isn’t leading here. Let’s have a look at horizontal FOV of an average person put against fields of view suggested by HoloLens, ODG, and Meta 2.

Basically, when you wear HoloLens, AR objects are visible in a near-rectangular opening in the middle, just like you’re looking at a desktop monitor. ODG is a bit better and the winner is Meta 2 with 90° FOV, which doesn’t even cover binocular vision. It’s an arguable point which FOV will be enough to get the full immersion. If you’ve ever tried VR headsets Oculus Rift or HTC Vive, their horizontal FOVs are pretty much the same, about 110°, and for many, this is not enough as you still notice borders on peripheries.

Why is it such a problem to make at least a 120° FOV? And, by the way, why are FOVs so different across devices?

The field-of-view problem stems from two factors:

- The form

- The type of combiner

But how did Meta 2 achieve an impressive 90° FOV? Well, they basically sacrificed aesthetics.

Meta 2 being worn Source: Wikipedia

Meta 2 uses a conventional HMD (head-mounted display) combiner that is good at FOV but fails in many other aspects, including form factor, image resolution, and others.As mentioned, HoloLens uses waveguide and ODG has birdbath combiners. The waveguide combiner, a flat and relatively ergonomic one, exceeds conventional HMDs in all parameters except for the field of view. The waveguide architecture has a very limited angular bandwidth, which results in a narrow FOV. And if Microsoft were to scale FOV – and if they could – the price tag for the headset would skyrocket. Today the rig costs $3000.

The birdbath can provide better FOVs, but it remains more space-consuming than waveguide.

We can’t resolve the FOV problem now. We either need large devices that won’t match the sought-after eyeglasses form or we must settle for a very narrow FOV in exchange for good image quality and flat, elegant lenses.

Well, there are some signs from Microsoft that may inspire a bit of optimism. Are you wondering why HoloLens would mount a super expensive waveguide combiner if it doesn’t make much difference in terms of the form factor? Why wouldn’t they use a cheaper birdbath instead? It seems they wanted to stick with waveguides and test to later use the technology in future generations of AR devices.

In late December 2016, when HoloLens already had the look we’re familiar with, Microsoft patented the technology that combines waveguides with the pinlight (light field) approach. Pinlight produces a very poor image quality compared to waveguide. But it can support a wide FOV.

What does Microsoft suggest? According to the patent, they consider using configurations selectively. If a virtual object appears in the peripheral vision, it can be rendered with low-quality but high FOV pinlight while objects in the middle of the view will be rendered with waveguides.

Sadly, many patents look more like something out of science fiction than of real technical documentation. So far, it’s safer to stay skeptical until we see at least a prototype. And there’s another problem that we are just about to discuss.

Resolution

We’re used to high resolution on our displays, high enough to never notice pixels. If you were to peek into an iPhone 6 or 7, you wouldn’t be able to distinguish separate dots. For example, iPhone 6 Plus has the resolution of 1920 x 1080 pixels. And we usually look at our smartphones from a reasonable distance, while screens themselves cover just a few degrees of our FOV.So how does it work for near-eye displays?

We can’t measure the resolution of an eye with pixels directly. According to estimates, the near-eye displays should have at least 60 pixels per degree of our field of view. Then we’ll achieve a sharp image with no pixels visible. But even for a relatively narrow field of view – that existing AR devices have – our conventional resolutions fall short.

For example, ODG R-9 will have 1920 x 1080 resolution per eye. To reach the level of 60 pixels per degree horizontally with 50° FOV that R-9 has, the display should have 3000 pixels horizontally instead of 1920.

But do we really need 6600 pixels per eye to make a sharp image to cover say 110° FOV?

In fact, our eyes have variable resolution that changes depending on the object we focus on. So, the real FOV where the highest resolution is applied is only about 40° wide. But the problem is that our eyes tend to move, instead of focusing only on the objects in the middle of the FOV. This means two things:

- Yes, we really need 6600-pixels per-eye displays

- The possible combination of waveguide and pinlight technologies in one device from Microsoft –described in the previous section – would discriminate against our eye movement. We still would have to look in the middle of our FOV to get the best picture.

As Karl Guttag, a heads-up and near-eye display devices engineer, says: “I don’t see any technology that will be practical in high volume (or even very expensive at low volume) that is going to simultaneously solve the angular resolution and FOV that some people want.”

Accommodation

One of the major advancements that Magic Leap bragged about is the accommodation-friendly system. Basically, accommodation means that we can focus our vision on objects of various distances. Our eye mechanism solves the task reasonably well accommodating our focal points in a split second.The problem with AR devices is that the light beam will always stay in focus if we don’t have multiple focal planes, at least five. This will result in eye fatigue and generally will impact the immersion.

Magic Leap claimed that they would use the light field technology to make accommodation possible. If you remember, the pinlight version of light fields breaks the image into individual light rays allowing our eyes to adjust to distances and focus correctly. But the resolution problem of light field deters companies from using it, except one of them besides Magic Leap.

Avegant, the AR company that just recently introduced the Glyph Light Field headset, claims that they use the light field approach to make multiple digital focus planes. And here’s when things start being a bit enigmatic, because technically they use birdbath optics and wouldn’t say exactly how their focal planes adjust. The only thing that we know is that they’ve achieved impressive accommodation results without adding moving parts and eye tracking.

In other aspects, the Glyph Light Field is a bulky headset tethered to a computer. But hey, they acknowledge it to be a prototype! So maybe Avegant will soon become the main competitor to HoloLens, at least in terms of accommodation.

Environment mapping

Let’s touch on something beyond optics and something that we’re competent at. Environment mapping or spatial mapping is needed for augmented reality devices to anchor objects to physical places.To place virtual objects into the physical reality, we need to create a virtual copy that the device is going to deal with. Then we must track the position of a person wearing the device to correctly render virtual objects. There are many videos on the web showing how it works. Check the one from HoloLens, for example.

The most common approach to spatial mapping is using depth-sensing cameras. They would capture the environment’s image, sending it to a processing unit to analyze the depths of objects. Then the system would create a mesh, basically, a 3D model of the environment, that would allow for the placing of virtual objects.

ODG mapping Source: ODG 4K Augmented Reality Review, better than HoloLens?

The current state of technology allows us to create the mesh almost instantaneously as the depth sensing camera captures the environment. But this also should be taken with a grain of salt.If you ever watched Hyper-Reality, the video which portrays the morbid AR future, note that we still can’t do anything like that in terms of mapping. The video shows how the protagonist goes through streets and shops encountering hundreds of virtual objects anchored to the physical environment.

But before placing any virtual objects, the AR device must see the environment in advance to create its 3-D copy. Imagine that you’ve decided to create an action game that takes place on your block. You’d likely want enemies coming at you from all directions. You want them to sit on rooftops, unexpectedly come from around the corner of your house, or say shoot at you from your neighbor's windows. That would be cool, right?

Well, to do this with the existing technology, you first have to explore your neighborhood to the nth degree, in all possible directions to let the device map your playground. If you’re using HoloLens, you’d have to make sure that you approach and look at all objects within 3.1 meters in front of you. If you don’t, the camera won’t capture all details that you want to interact with.

Is there a way around it? Well, yes. Eventually, we may map streets and public places beforehand, just like Google does with Street View, but applying the depth cameras. Or an AR community may contribute to it once the technology reaches mass adoption. So, it’s possible as soon as we deal with the optics problems.

So, what do we have?

Although this story is only the second in our Reality Check series (if you missed, read our AI Reality Check), it becomes a tradition to look at overhyped technologies with skepticism. But you see, hype is good. It makes us invest financially and emotionally in tech’s bright future. If we didn’t, we wouldn’t have what we have today. Hype has its place.Let's sum up.

The look. There’s still no device that would have an acceptable form, namely looking like glasses. The waveguide optics allow for that but they don’t seem to make a big impact on the device look in the case of HoloLens. It will probably take a few years to make the rest of hardware small enough.

Field of view. Here comes the painful part. There are pathways to decent FOV but the trade-offs are too significant to make them useful. It seems that by far we must accept what we have in terms of FOV, if we want a sharp image.

Resolution. The resolution section above comes down hard on existing devices saying that current resolutions are nowhere near the desired result, and things would get even worse if we were to increase FOV. Well, the resolution problem doesn’t seem so problematic, at least for HoloLens reviewers. You may try Oculus Rift or HTC Vive. They also fall short with the desired resolution bar. If you’re okay with them, maybe you’ll be okay with AR as well.

Accommodation. That’s the factor that we don’t know much about. We’ve seen a decent prototype by Avegant, but it leaves many things in the uncertainty area. Will they be able to make the headset smaller and independent of a PC? Will accommodation work as smoothly with third-party applications as it does with demos? Will they be able to make smaller lenses? Too many questions.

Environment mapping. It’s too early to say that we’re entirely through with the mapping question. But it seems that once AR adoption becomes a mass thing, the existing technologies will be enough to support ubiquitous mapping.

Things that we didn’t cover. Obviously, the list of challenges described isn’t exhaustive. For example, engineers still have to figure out how to make the right occlusion, i.e. selectively block certain parts of the real image to avoid visible transparency of the virtual ones. The only technology that can handle occlusion right is pinlight. The patent from Microsoft we mentioned also covers this problem claiming that combined pinlight+waveguide optics could solve the occlusion issue. If you are impressed with ODG R-9, we should mention that they are likely to have a very low see-thru capacity, blocking around 95 percent of real-world light. This could make them very impractical in dark conditions.

And there are many other things blocking AR’s future so far that aren’t so important. But let’s stay optimistic. The high competition among players is inspiring in and of itself. Yes, the price levels and unbaked technology aren’t promising AR to become a mass thing in 10 or so years. But it’s likely that we’ll see corporate adoption soon. Designers, construction workers, and surgeons can already benefit from AR headsets. And they won’t ask for “immersive experiences,” just some necessary and practical basics. So, let’s stick with that for now. It’s an exciting place to be.