Data doesn’t move itself. It needs instructions like what to do, when to do it, and what to wait for. Without that, you end up with operations that break, reports that pull incomplete numbers, and teams that don’t trust the information they’re given.

This is where data orchestration comes in. It acts as a conductor of your data ecosystem and helps you manage the sequence, timing, and logic behind your data workflows. Instead of chasing down failures or manually kicking off processes, orchestration lets you automate the flow from source to destination—cleanly and reliably.

This article explores what data orchestration is, how it works, core components and steps involved, and tools that can help you orchestrate your data more efficiently.

Let’s get into it.

What is data orchestration?

Data orchestration is how you manage, schedule, and coordinate data tasks so they run in the right order, with the right logic, and can recover gracefully if something fails. It’s like putting a conductor in front of your data stack to direct each step and make sure everything works smoothly from start to finish.

You decide what should happen, when, and under what conditions. The orchestration system handles the rest, whether that’s kicking off a data process, retrying a failed task, or sending you an alert when something breaks.

Key data orchestration terms you should know

If you're new to data engineering, you may encounter some unfamiliar terms while reading. To help you out, here’s a brief overview of some key concepts and buzzwords so you can easily follow along.

- Data task: an individual unit of work in a workflow. Each task does only one thing, like renaming columns in a dataset or checking if a file exists.

- Data pipeline: a set of tools and activities for collecting, modifying, and moving data from one system to another, where it can be stored and managed differently.

- DAG (Directed Acyclic Graph): a mathematical abstraction of a data pipeline defining the sequence of operations. It's "directed" because each task points to the next one, and "acyclic" because it doesn’t loop back, meaning tasks only move forward in sequence. While DAGs can be visualized in some tools, they are typically defined in code.

- DAG run: an instance of a DAG being executed. Each run has its own status and results based on the input and schedule.

With that, we’re all caught up on some essential terms and concepts around data orchestration.

Difference between data orchestration, ETL, data integration, and data ingestion

Data orchestration is often confused with other concepts in the data ecosystem. While they’re all related, they have their differences.

Data orchestration vs data integration. Data integration involves combining data from multiple systems into a unified dataset, which can be used for analytics or business intelligence. It’s more about centralizing access to data than controlling the timing or sequence of how it gets processed. Orchestration, however, helps you manage when and how integration happens.

Data orchestration vs ETL/ELT. ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) are two types of data integration pipelines. Data orchestration sits a level above ETL/ELT, coordinating when and how ETL/ELT jobs run.

Data orchestration vs data ingestion. Data ingestion is the process of bringing raw data into your system. This could include streaming from Kafka, pulling files from S3 (a cloud object storage service from AWS), or syncing data from external APIs. Orchestration makes ingestion repeatable and reliable. It handles things like triggering ingestion tasks on a schedule or in response to events, ensuring that ingestion finishes before downstream processes, like validation or transformation, start.

When do you need data orchestration?

While data orchestration is often used to manage integration and ingestion operations, it's not limited to those use cases. It’s also a way to automate

- data migration,

- data synchronization across distributed systems,

- reporting,

- machine learning pipelines, and more.

At the same time, data orchestration might be overkill if you’re working with a single data source or on a simple schedule. Here are some signs it makes sense to bring in data orchestration.

You have many moving parts. If you’re ingesting data from multiple sources—APIs, databases, enterprise data warehouses, data lakes, etc.—and processing it, orchestration helps you coordinate how that data moves through your pipelines.

Order and timing matter. Certain tasks can’t run in parallel and must wait for others to finish. For example, you can’t run data transformation jobs before extraction is complete. Data orchestration helps you define those dependencies and manage them properly.

You want to automate and scale recurring workflows. Data orchestration can schedule and automate repetitive processes by running them at set intervals. It also helps you scale workflows by handling increased data volume, adding new sources or steps, and keeping everything running in the right order as complexity grows.

How does data orchestration work?

Let’s break down the data orchestration process to understand how it works behind the scenes. We’ll use a daily customer analytics pipeline as a real-world example.

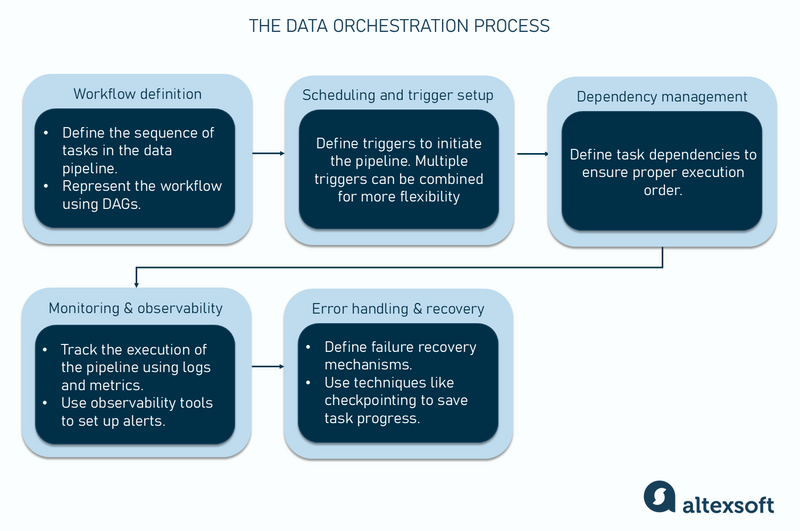

Workflow definition and setup

First, define the sequence of steps your pipeline should follow. This involves breaking the entire process into smaller, manageable tasks.

In our example, the customer analytics pipeline includes the following sequence.

- Pull raw customer data from multiple sources (CRMs like Salesforce, product database, etc.).

- Clean data to remove duplicates, handle missing values, and standardize formats.

- Join and enrich the data with additional fields like user location and lifetime value.

- Load the processed data into a data warehouse.

- Make the final dataset available to business intelligence for daily reporting and insights.

This sequence of steps can be represented as a DAG, which is typically a Python script. Some platforms offer built-in visual editors that let you design DAGs through a UI without writing a single line of code.

Scheduling and trigger setup

Next, you configure one or more triggers to determine when the pipeline should run. Each time a trigger fires and activates the pipeline, it creates a DAG run. We can use different types of triggers to initiate the customer analytics pipeline.

Time-based triggers execute pipelines at specific intervals. For example, we can set the customer analytics pipeline to run daily at 4:00 a.m., so the dashboard is up to date before the workday begins. Time-based triggers are ideal for routine tasks like daily reports or hourly data syncs.

Event-based triggers initiate the pipeline in response to specific events. For example, the arrival of a new CSV file being dropped into a data lake can trigger the pipeline to process that file immediately.

Manual triggers are needed when you want to run the pipeline manually, such as during testing or for one-off tasks. You can do this via command-line interfaces or with orchestration tools.

It's possible to combine different triggers, depending on your needs. For example, you can schedule the pipeline to run every day at 4:00 am and set up an event-based trigger to execute the pipeline whenever a new CRM export file is uploaded.

Dependency management

After scheduling, you need to manage the task dependencies in the pipeline. Every stage of the analytics workflow relies on the successful completion of the stage before it. For instance, you can't clean data that hasn’t been extracted yet, and you shouldn’t load incomplete or incorrectly formatted data into your data warehouse.

A good orchestration system lets you define these relationships clearly, whether tasks run in parallel or in strict sequence. For example, if CRM and product database extractions don’t depend on each other, they can run in parallel to speed up the process.

This layer of orchestration ensures everything happens in the right order, reducing the chance of errors or incomplete data reaching your reports.

Monitoring

As the workflow runs, you must keep an eye on it. Your orchestrator provides logs and metrics so you can see

- which tasks succeeded or failed,

- how long each task took, and

- where slowdowns or failures occurred.

For example, if the Salesforce API is down, the task will be marked as “failed” with an error message in the logs. Or, let’s say the transformation step takes 20 minutes instead of 2. This signals that something in the input data has changed. Maybe the volume spiked, or there’s an edge case in your logic.

Monitoring tools allow you to set alerts, like “if any task fails, send a Slack notification.” This helps you respond quickly before teams open their dashboards and see missing data.

Error handling and recovery

Finally, let’s talk about failure. In any real-world pipeline, things sometimes go wrong. Your orchestrator lets you define what happens at those times.

- If the enrichment process fails, retry up to 3 times with a 5-minute delay.

- If the transformation fails, alert the data team and pause downstream tasks.

- If the final load to the BI tool fails, backfill the data when the issue is resolved.

To avoid starting from scratch if failure occurs, use techniques like checkpointing. It allows you to save the intermediate state or progress of a task or workflow so that it can resume from that point if something fails.

Data orchestration tools and platforms

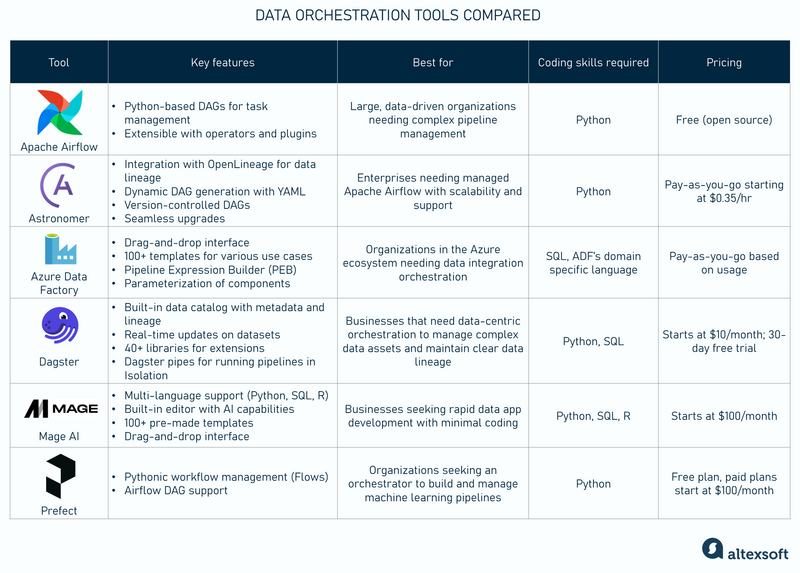

When it comes to choosing a data orchestration tool, one size never fits all. In this overview, we’re highlighting six open-source and commercial solutions.

We shortlisted these platforms based on their popularity among data professionals.

Note: We do not promote any of the tools listed here. They are selected purely for educational purposes.

First, a brief outlook on the core functionalities that are common across most data orchestration tools.

- Workflow automation streamlines the execution of multi-step processes by triggering tasks in the right order.

- Task scheduling allows you to define when operations should run (at regular intervals, specific times, or in response to certain events).

- Monitoring and logging let you view task statuses, failures, and logs to troubleshoot and optimize workflows.

- Built-in integrations ensure smooth connections with various tools and platforms.

With these foundational capabilities in mind, let's dive into specific tools, highlighting what sets each apart.

Apache Airflow: manage complex workflows using Python DAGs

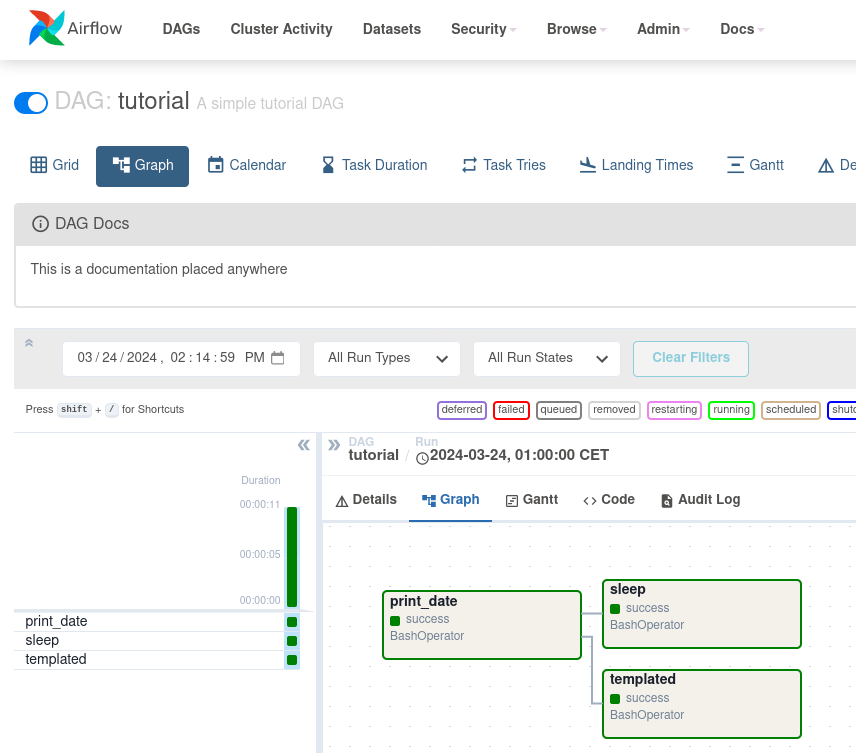

Apache Airflow is a workflow management platform for programmatically creating, scheduling, and monitoring data pipelines. It relies on DAGs describing the sequence, timing, and logic of tasks in your data operations. Each DAG in Apache Airflow is a Python script to be executed by a platform.

Airflow is widely used, has a large ecosystem, and has become a standard for managing data workflows. Many cloud platforms and orchestration services are built on top of or designed to support it.

Among other things, Apache Airflow

- supports dynamic workflow creation, making it easy to update pipelines;

- allows you to extend its core components for greater customization; and

- includes a rich library of operators and plugins.

Keep in mind that you must have programming skills to work with the tool.

Best for: large, data-driven organizations that need a robust and flexible orchestration tool for managing complex pipelines. It is trusted by companies like Airbnb, Lyft, and Shopify.

Pricing: Apache Airflow is open source and free to use. However, costs may arise from infrastructure, maintenance, and scaling requirements.

Learn more about DAGs and Apache Airflow in our dedicated article.

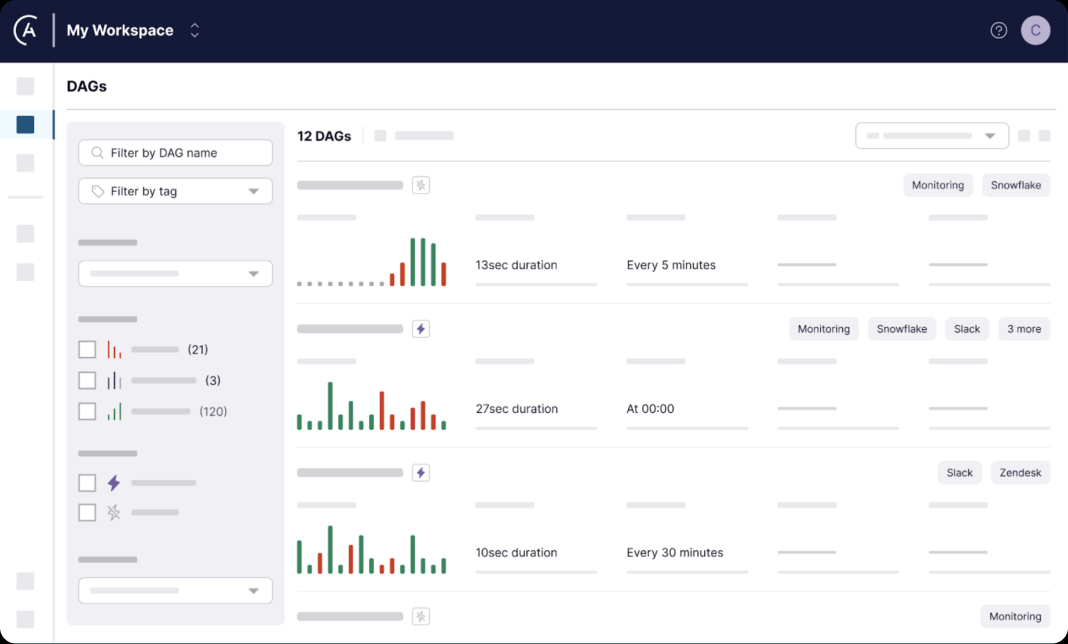

Astronomer: managed Apache Airflow service

Astronomer is a managed service that runs Apache Airflow at scale. It takes the complexity out of setting up and maintaining Airflow environments. With Astronomer, you get cloud-native features like monitoring, version control, and easy deployments—ideal for teams that want the power of Airflow without the overhead.

You’ll need Python programming skills to define DAGs and tasks, just like in standard Apache Airflow. There’s also a Python SDK, which simplifies the coding process.

Astronomer’s core features include

- integration with OpenLineage to capture and visualize data lineage metadata;

- the Astronomer Registry, which gives you access to 117 providers (packages that include prebuilt hooks, operators, and sensors for external tools), 1,904 modules, and 115 example DAGs for exploring supported integrations and reusable components;

- the DAG factory library, which allows you to generate DAGs with YAML config files dynamically; and

- shared workspaces and automatic support for version-controlled DAGs, enhancing team collaboration.

With Astronomer, you can upgrade to newer versions of Airflow directly within your existing environment, without moving DAGs, data, or settings. Upgrading Airflow means getting the latest features, performance improvements, and security updates.

Best for: organizations seeking a managed solution for Apache Airflow with enterprise-level support and scalability. It is used by businesses like Adobe, Marriott International, and Ford.

Pricing: a pay-as-you-go model, with deployments starting at $0.35/hr. Final costs depend on cluster configuration, deployment size, worker compute resources, and network usage. Custom pricing is available for larger teams or enterprise needs.

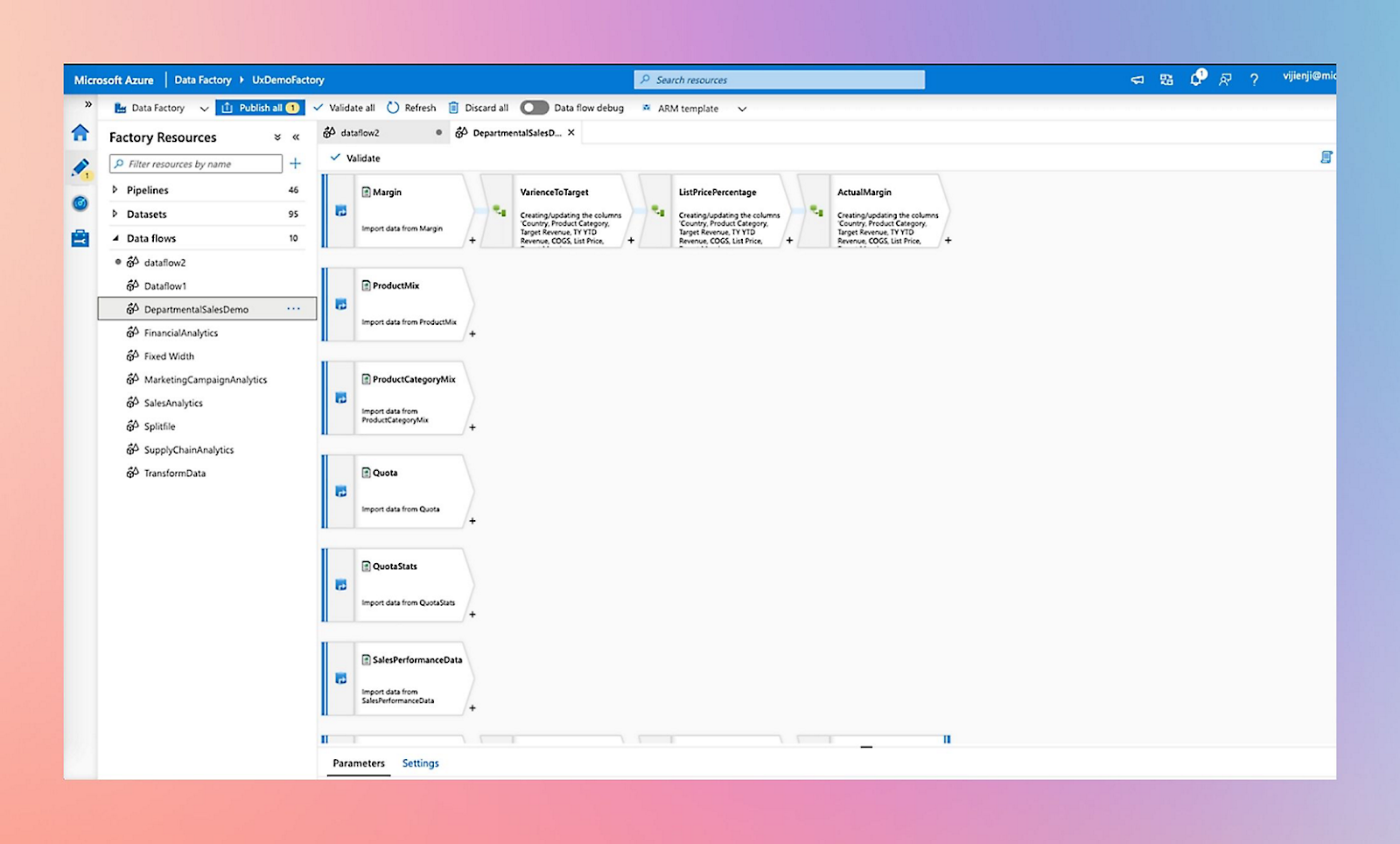

Azure Data Factory (ADF): Microsoft's cloud data integration service

Azure Data Factory is Microsoft’s data integration service specifically tailored towards creating, scheduling, and orchestrating data pipelines across cloud and on-premises environments. It is built for enterprise data movement and transformation and works well with other Azure services.

ADF offers drag-and-drop interface for low-code operations. In cases where greater control is required, ADF provides a domain-specific language called Pipeline Expression Builder (PEB). It allows you to define expressions for data transformations and control flow logic within your pipelines.

Key features include

- the Workflow Orchestration Manager, which allows you to define workflows using Apache Airflow’s Python-based DAGs;

- data compression, reducing the amount of bandwidth used during data transfer to the destination;

- parameterization of ADF components, so you can pass dynamic values at runtime without creating duplicate pipelines for similar processes; and

- a gallery of 100+ official and community-generated templates covering various use cases.

Best for: organizations invested in the Microsoft Azure ecosystem seeking a managed service for orchestrating ETL and data integration tasks. It is used by PZ Cussons, T-Mobile, and American Airlines, among others.

Pricing: a pay-as-you-go model, with costs based on data movement, pipeline orchestration, and data flow activities.

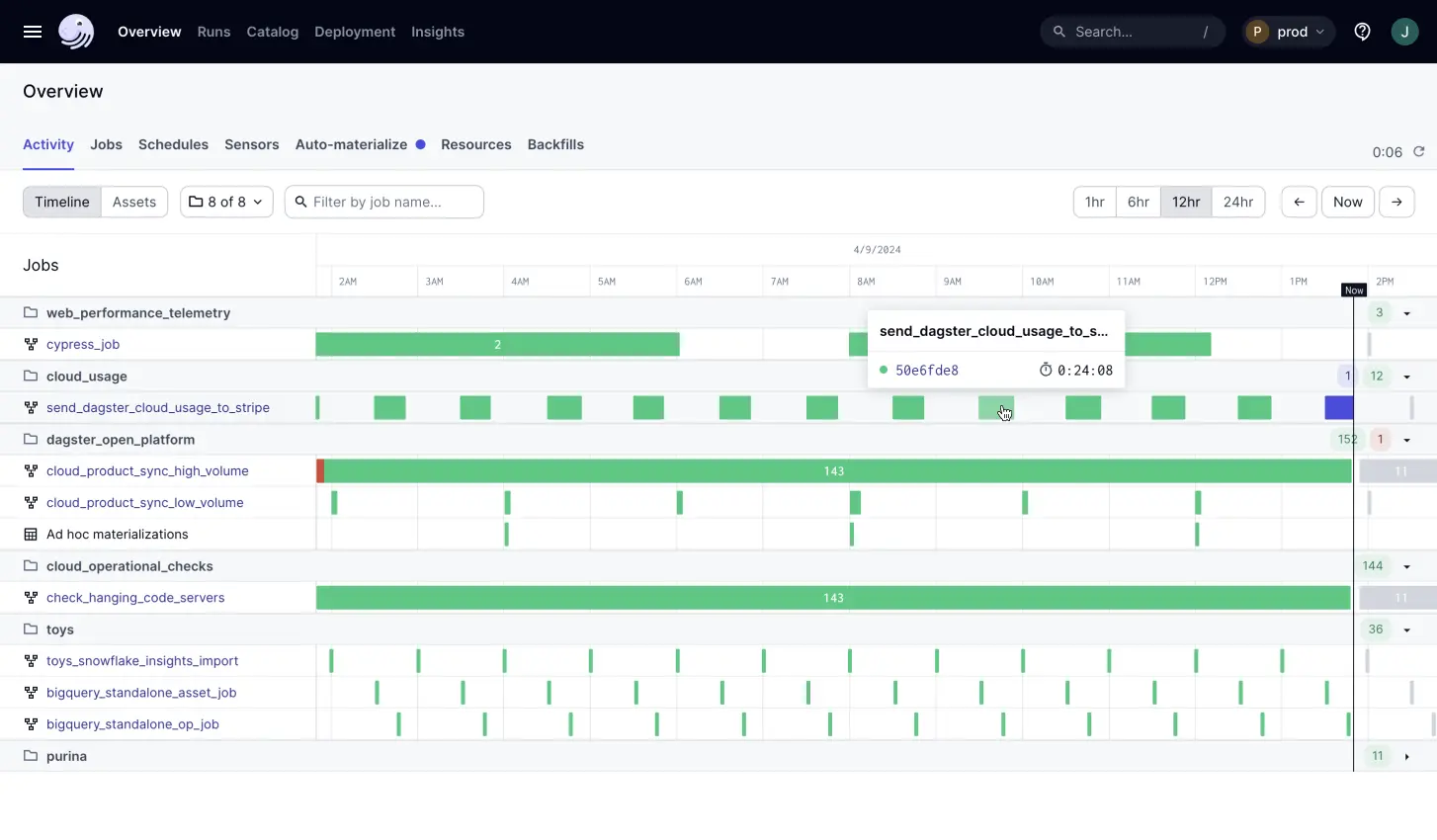

Dagster: orchestrator built for data engineers

Dagster takes a different approach from other data orchestration tools. Instead of focusing solely on task execution, it puts an emphasis on data assets treating them as first-class citizens and making it easier to track what data is being produced, where it’s coming from, and how it's used across pipelines.

Working with Dagster requires proficiency in Python for defining assets and workflows and SQL for data transformation and querying tasks.

Key capabilities include

- a data catalog, which offers a centralized, searchable view of all data assets in an organization. It includes metadata, definitions, and lineage information, and helps you understand how your data is used and what it represents;

- real-time updates on data assets and their transformations;

- a collection of 40+ libraries for extending the platform’s core functionalities with tools like Pandas;

- Dagster pipes, which allow you to run parts of your pipeline in separate, isolated environments (like containers or notebooks) while keeping visibility and control in the Dagster UI; and

- various starter templates tailored to diverse use cases and project needs.

Best for: Businesses that need data-centric orchestration to manage complex data assets, ensure data quality, and maintain clear data lineage—particularly in sectors like finance, healthcare, and retail, where compliance and data reliability are critical. Companies like Shell and Flexport use Dagster to manage their data operations.

Pricing: starts at $10 per month, a 30-day free trial is available.

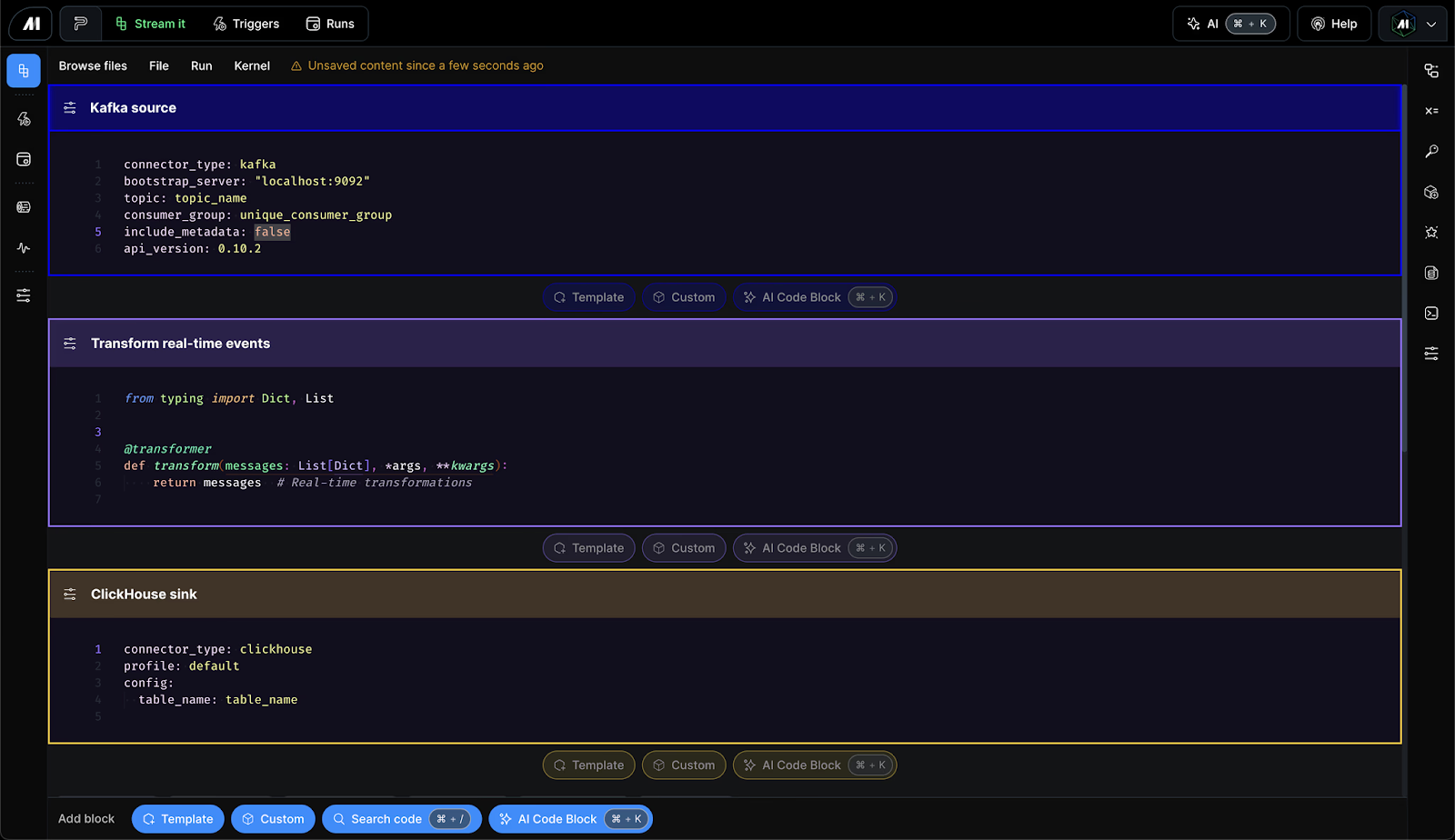

Mage AI: AI-powered pipeline builder

Mage AI is an AI-powered data engineering platform for building, running, and managing data pipelines. It allows you to write data transformation scripts in Python, SQL, or R.

Among other features, Mage AI offers

- a built-in editor with real-time commenting, live collaboration, and generative AI capabilities;

- access to 100+ premade templates for loading, transforming, and exporting data; and

- a simple drag-and-drop interface for building pipelines, with visual graphs to show the flow of data.

Best for: Businesses looking to rapidly develop and deploy data applications with minimal coding effort.

Pricing: starts at $100 per month.

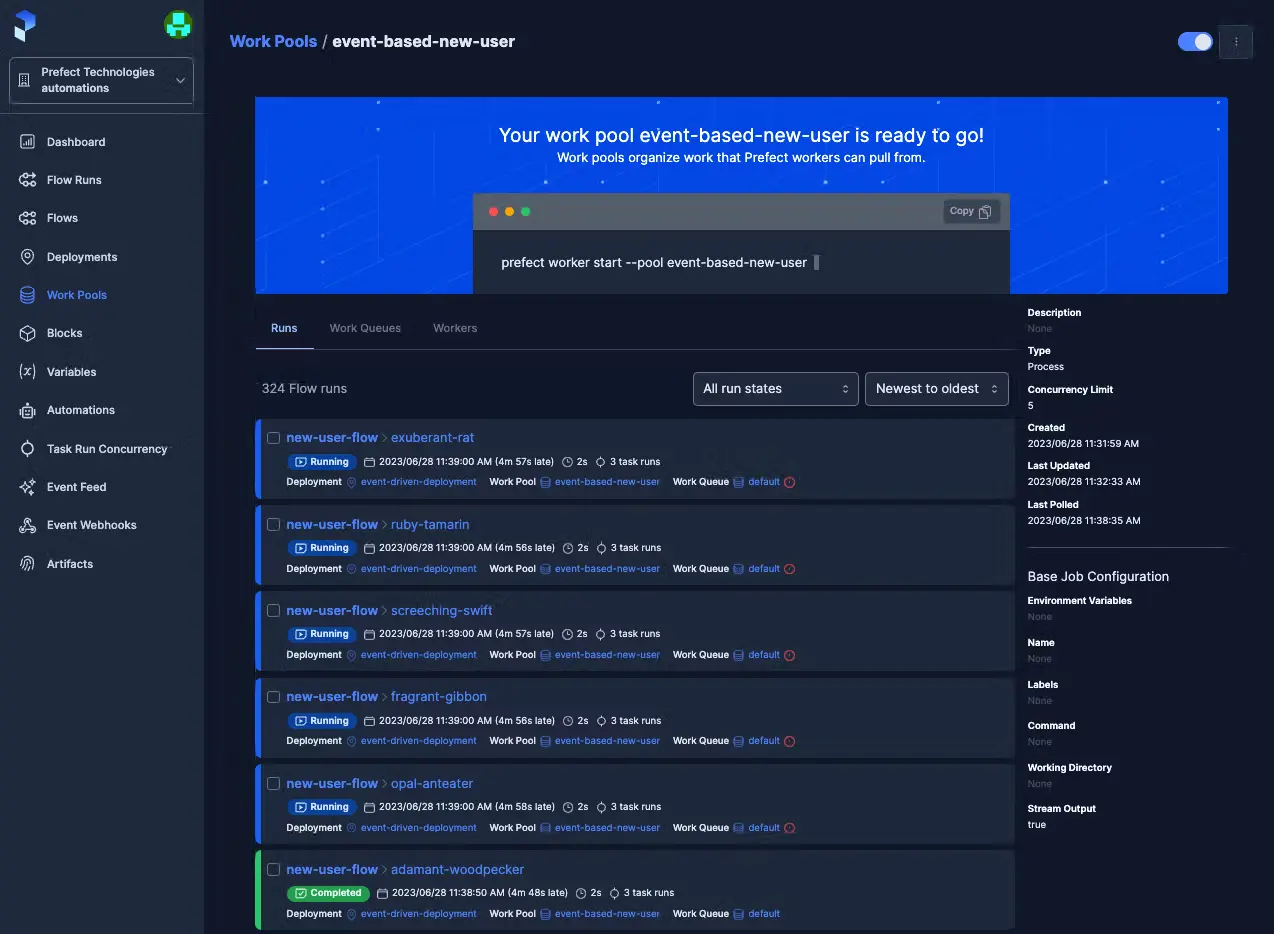

Prefect: workflow orchestration for machine learning pipelines

Prefect is a cloud-based tool for building, managing, and monitoring machine learning pipelines and other complex workflows with Python. You can also use it to automate tasks like data ingestion, preprocessing, model training, evaluation, and deployment, ensuring that ML models remain accurate and effective over time.

Prefect offers both an open-source version and a cloud platform, so you can choose the option that works best.

Among other things, Prefect

- has a collection of example code for a quick start;

- replaces the concept of DAGs with Flows. This switch allows you to write more Pythonic code which is more maintainable, cleaner, and flexible than DAGs; and

- allows you to observe Airflow DAGs on its platform. This incremental approach can help you test the waters ahead of a full, disruption-free migration from Apache Airflow to Prefect.

Best for: organizations seeking an orchestrator to build and manage machine learning pipelines, with an easy migration path from Airflow.

Pricing: offers a free plan for personal and small-scale projects. The paid subscription starts at $100/month.

Factors to consider when choosing a data orchestration platform

We encourage you to research other data orchestration platforms that can meet your needs besides these. As you explore your options, here are some important factors to keep in mind.

Ease of use. Look for a user-friendly tool with clear documentation, especially if your team lacks extensive technical expertise. Data orchestration platforms with low-code or no-code interfaces would be a good fit for such scenarios. However, if your team is highly technical, consider using platforms that allow for greater programmatic control.

Cost. The cost of data orchestration tools varies. Open-source options like Apache Airflow are free but require infrastructure and maintenance costs. In addition to the tool's cost, factor in integration, training, and onboarding expenses.

The complexity of your data workflows. The nature of your workflow will determine the type of orchestration tool you need. Complex, multistep workflows require a solution that offers greater control, while straightforward, simpler workflows may not.

Integration requirements. Consider the systems and tools you need to integrate with. The solution you pick should be compatible with your existing systems.

With a software engineering background, Nefe demystifies technology-specific topics—such as web development, cloud computing, and data science—for readers of all levels.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.