Imagine receiving a carton of apples, only to find that all but three are rotten. Gross, right? Luckily, it’s easy to spot a bad apple – you can see it, cut it open, and immediately know it doesn’t belong in your strudel.

Now take data. In most cases, you can’t just glance at a dataset and instantly spot inconsistencies, missing values, or duplicates. Instead, bad data sneaks into reports, analytics, and decision-making, only revealing its impact when things start going wrong – misleading insights, inaccurate forecasts, or a failed marketing campaign. And that’s not just gross – it’s expensive to fix.

This is where data profiling comes in. Just like you wouldn’t bake a pie without first checking your ingredients, you shouldn’t make business decisions based on unverified data. So how do you profile data?

Our fan-favorite video on data preparation is worth checking out

What is data profiling?

Data profiling is the process of analyzing and assessing data to understand its quality, structure, and content.

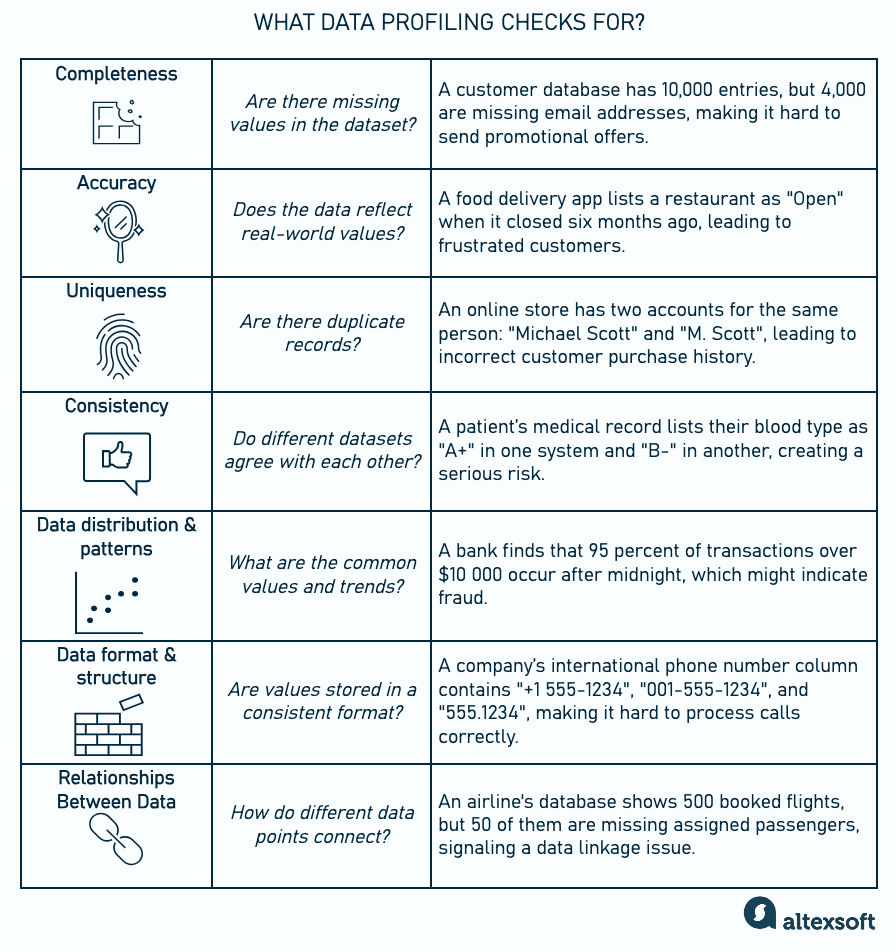

Key elements that are analyzed during data profiling

It helps organizations answer important questions.

- Is the data complete?

- Are there errors or inconsistencies?

- Does it follow expected patterns?

By examining data at a deeper level, businesses can ensure they are working with accurate, reliable information before using it for reports, decision-making, or AI models. Here are the issues data profiling checks for.

Completeness. Are there missing values in the dataset? For example, if a customer database is missing phone numbers for half of the entries, this could be a problem for marketing teams.

Accuracy. Does the data reflect real-world values? If an inventory system lists a product as available in a store, but it's actually out of stock, that’s an accuracy issue.

Uniqueness. Are there any duplicate records? If the same customer appears multiple times in a database with slight variations in their name (say, "Michael Scott" and "M. Scott"), it could lead to errors in reporting or personalization.

Consistency. Do different datasets agree with each other? If a customer’s birthdate is listed as "11/01/1991" in one system and "01/11/1991" in another, there’s an inconsistency to be addressed.

Data distribution and patterns. What are the common values and trends? Profiling might reveal that 90 percent of transactions occur in a specific region, or that most customer names follow a certain format. This helps identify outliers or errors.

Data format and structure. Are values stored in the correct format? For example, if a phone number column contains a mix of "(123) 456-7890" and "123-456-7890", standardizing the format is necessary for consistency.

Relationships between data. How do different data points connect? If an eCommerce company has separate databases for customers and orders, profiling can confirm whether every order is linked to a valid customer.

These elements are usually checked during one of the three main types of data profiling, which we’ll cover in a future section.

When does data profiling happen?

Data profiling typically occurs after data collection at the beginning of any data engineering project. Businesses must ensure their data is accurate and trustworthy to make informed decisions, build reports, or train AI models.

Overview of a complete data engineering process

Data profiling explores the raw data to spot issues like missing values, duplicate records, or inconsistent formats. If found, such problems can be fixed early, saving time and effort down the line.

What are the use cases for doing data profiling?

Data profiling is needed whenever you want to ensure data quality before using it. Which is pretty much the case every time you work with large or complex datasets. This may include:

- data migration. When moving data from one system to another, you need to ensure it’s clean and consistent;

- data integration. If your company is combining data from different departments or external sources, data profiling helps uncover what requires correction at the transformation stage of the ETL/ELT process;

- data analysis and reporting. Obviously, if you're making business decisions based on data, you need to trust its accuracy. Profiling ensures that reports and analytics are based on complete, error-free data, preventing misleading insights.

- data governance and specifically compliance with laws. Many industries have strict regulations about how data must be handled, such as GDPR or HIPAA. Profiling helps organizations identify sensitive information, ensuring security and adherence to privacy laws;

- AI model training. AI models are only as good as the data they’re trained on. Profiling checks if the data is clean, well-structured, and free from biases or inconsistencies that could affect model performance.

Now, let’s review who exactly is responsible for this process.

Who typically performs data profiling?

In smaller companies, a single data analyst or engineer might handle data profiling. In larger organizations, multiple teams work together to maintain data quality.

Watch our overview of all key roles within data science teams

The specific roles involved depend on the organization's size and structure, but here are the key people who typically handle data profiling. Depending on the use case, this can be:

- data analysts,

- data engineers,

- data scientists,

- database administrators,

- ETL developers, and

- business intelligence (BI) teams.

In further sections, we will review tools for automated profiling that require minimum tech knowledge, so even non-data people can do it.

Data profiling vs data cleaning

While data profiling and data cleaning are closely related, they are not the same thing. Data profiling doesn’t fix anything per se. Its only mission is to diagnose the data and provide you with a report on everything that’s wrong (and right) with your data. Data cleaning then is the process of taking action based on the results of data profiling – or curing the data if you will.

Our article on data cleaning goes very deep into the topic, so make sure to check it out.

Data profiling vs data mining

Since both data mining and data profiling involve examining data, some may confuse the two. But in reality, data mining serves an entirely different purpose: it’s about extracting patterns, trends, and useful insights from large datasets. It uses advanced techniques like machine learning and statistical analysis to find hidden relationships within data that weren’t immediately obvious. The goal of data mining is to discover patterns that can be used to make data-driven decisions.

Data profiling types and techniques

Data profiling is usually categorized into three main types, each focusing on different aspects of data quality and structure. These types align with the specific elements examined during profiling.

Structure profiling – Does data follow the expected format?

Structure profiling assesses the layout and arrangement of the data. It helps check how data is organized, formatted, and structured.

Metadata profiling. This technique involves analyzing metadata, or the structural information of a dataset, such as column names, data types, relationships, and constraints. For example, it can reveal that the “Product_ID” in the Sales table is missing primary key constraints, leading to potential issues with duplicate entries.

Cardinality analysis. It assesses the uniqueness and distribution of values within a column and helps identify how many distinct values exist and how often they repeat. This is crucial for understanding the role of a column in a dataset: whether it is a primary key, a foreign key, or an attribute with duplicated values. It also highlights problems within a specific column. For example, if an "Employee_ID" is supposed to have only unique values, but duplicates appear, it's a cardinality issue that needs fixing.

Here’s the breakdown of what else structure profiling typically checks.

Data types and format. Each column in the dataset is checked to confirm that it adheres to its expected data type and formatting rules.

Examples of issues:

- Numeric data stored as text (for example, a price column contains numeric values that are stored as text)

- Date fields with inconsistent formats ( "MM/DD/YYYY" vs "DD-MM-YYYY").

- Improperly formatted cells (inconsistent case usage in names).

Field length. Correct field length ensures that no data is lost or truncated (limited above or below).

Examples of issues:

- A "Description" field with a fixed length of 50 characters might truncate longer text, losing valuable information.

- A “Phone_number” field that allows for more than 10 digits creates problems when trying to analyze numbers uniformly.

Column names. Consistent and well-structured column names make the data easier to understand, use, and maintain.

Examples of issues:

- Inconsistent naming conventions (using both "Product_id" and "ProductID" for different columns).

- Ambiguous names that do not clearly describe the data ("Sum" instead of "Order_amount").

Missing data patterns. Structure profiling aims at understanding how and where the missing data occurs within the table’s organization.

Examples of issues:

- Key columns like "Order_amount" or "Invoice_number" being null in many records.

- Entire rows missing in a dataset, causing data gaps that can affect analysis.

Overall, a well-structured dataset is not only easier to work with but also supports data integrity, consistency, and quality, which are crucial for making accurate business decisions and building reliable models.

Content profiling – Is data accurate and complete?

Content profiling is a deeper examination of the actual values stored in a dataset. Unlike structure profiling, which looks at the format and organization of data, content profiling focuses on what’s inside the data, checking for errors, inconsistencies, and patterns that might indicate issues.

So, what techniques are commonly used for content profiling?

Data rule validation. Data rule validation ensures that data adheres to predefined rules or constraints. It is primarily a content profiling technique but can also apply to structure profiling when checking format constraints. For example, a rule might state that all email addresses must contain "@" and a valid domain, such as "@gmail.com." If any records violate this rule, they are flagged for review.

Inconsistent categorical data. If values within categories aren’t standardized, it becomes difficult to filter, analyze, or generate accurate reports.

Examples of issues:

- A "Country" column contains: "USA," "United States," "US," "America."

- A "Department" column includes: "HR," "Human Resources," "HR Dept."

Outliers and unusual values. Outliers could be caused both by data entry errors or genuine cases of extremely high or low values that require further investigation.

Examples of issues:

- An "Age" column includes values: 25, 30, 35, 250.

- A "Temperature" field records: 98.6°F, 99.1°F, -300°F.

Missing or null values. Missing data can impact customer engagement, marketing efforts, and overall data reliability.

Examples of issues:

- An "Address" field contains placeholders like "N/A" or "Unknown."

Duplicate data entries. Duplicates can lead to incorrect customer counts, redundant marketing efforts, and skewed analytics.

Examples of issues:

- A customer list includes the same person identified as "Michael Scott," "M. Scott," "Michael G. Scott."

- A product catalog has three records for the same product: "iPhone 13 Pro Max," "iPhone 13 ProMax," and "iPhone13Pro Max."

Unusual or invalid data in fields. Some values may not even resemble the required ones, indicating potential data corruption or entry errors.

Examples of issues:

- An "IP_address" field contains "192.168.1.1," "300.400.500.600" (invalid), "localhost."

- A "ZIP_code" field contains "00000" or "99999."

Even well-structured data can be full of errors, inconsistencies, or missing values. Without content profiling, businesses risk making decisions based on inaccurate, misleading, or incomplete information.

Relationship profiling – How does data connect across different datasets?

Relationship profiling is the process of analyzing how different tables relate to each other. While structure profiling ensures that data is stored correctly and content profiling checks the accuracy of individual values, relationship profiling focuses on connections between data points, ensuring that linked data makes logical sense.

In relationship profiling, the following techniques are normally applied.

Cross-column profiling. This technique identifies dependencies between columns and how they relate to each other. It helps ensure that relationships between data fields are logically consistent. For example, if a dataset contains a "City" column and a "Zip_code" column, cross-column profiling can verify that each zip code corresponds to the right city.

Key integrity. This technique checks whether primary and foreign keys in a dataset maintain their relationships properly. It’s crucial for databases that rely on referential integrity. Say, it's an eCommerce database, where every "Order_ID" should be linked to a valid "Customer_ID." If an order exists without a corresponding customer, it signals a key integrity issue.

Below are types of typical relationship profiling issues.

Orphaned records. These are records that reference a nonexistent or missing entity. They break data integrity, making reporting unreliable and causing errors in applications that rely on linked data.

Examples of issues:

- An "Orders" table contains purchases linked to customer IDs that don’t exist in the "Customers" table.

- An "Employees" table lists department IDs that no longer exist in the "Departments" table.

Duplicated relationships. Conflicting relationships cause confusion, redundancy, and reporting errors, leading to incorrect business insights.

Examples of issues:

- A "Product_catalogue" links the same product ID to multiple, slightly different product descriptions.

- An "Employees" table assigns the same manager ID to multiple departments, creating conflicting hierarchies.

Circular references. They occur when relationships between data form a loop, making it unclear which entity depends on the other.

Examples of issues:

- A "Tasks" table contains dependencies where Task 1 depends on Task 2, but Task 2 also depends on Task 1.

- A "Managers" table lists Employee A as Employee B’s manager, but Employee B is also listed as Employee A’s manager.

Mismatched data types between related fields. The problem occurs when linked fields in different tables store data in incompatible formats.

Example of issues:

- A "Customers" table stores customer IDs as text (“1001”), but an "Orders" table stores customer IDs as numbers (1001).

Each type of profiling addresses a different set of problems your dataset may have. Structure profiling reports how data is formatted, content profiling pinpoints errors within data, and relationship profiling discovers whether different datasets work properly. Together, they provide a full picture of data quality, helping businesses trust their data before making decisions.

Data profiling process

Data profiling can involve both manual checks and automated processes, depending on the dataset size and complexity. While automation significantly speeds up profiling, some manual validation is often necessary to ensure accuracy and contextual understanding. Often automated tools handle large-scale scanning while analysts perform targeted manual checks to verify findings and interpret context-specific issues. So here’s an overview of a typical data profiling process.

Step 1. Understand data sources

Before diving into the data, gather key details.

Some of this can be automated using tools, but manual review helps ensure accuracy.

What can be automated?

Metadata extraction. Tools automatically retrieve metadata such as column names, information on data types, and constraints (limitations defining allowable formats, values and conditions for data within a particular column).

What should be reviewed manually?

- Verify that the data structure aligns with the business use case.

- Determine whether key fields are named clearly and logically.

Example: A tool extracts metadata from a sales database and identifies a column named "ID_123" as a primary key. During manual review, you realize the name is unclear and should be changed to "Order_ID" for better readability and consistency.

Step 2. Conduct structure, content, and relationship profiling when needed

Data profiling isn’t a one-size-fits-all process. Depending on the type of data and the business goals, you may use different profiling types.

Structure profiling is commonly used when integrating data with different formats from multiple sources and before migrating data to a new system.

Content profiling is also done before data integration as well as before using data for reporting, analytics, or machine learning models if a dataset shows unexpected trends or anomalies.

Relationship profiling is essential when troubleshooting discrepancies between different tables.

Step 3. Document findings and fix the issues

Automated tools generate reports highlighting missing values, duplicates, and anomalies, but human oversight is necessary to determine which fixes to apply.

What can be automated?

Data cleansing suggestions. An AI-based tool can suggest transformations for inconsistent values.

What can be reviewed manually?

- Decide which fixes should be automated and which require human input (for example, merging duplicate records).

- Create data quality rules for future automation.

Example: A tool suggests merging duplicate clients based on similar names. You manually verify and confirm that some should be merged, but others are separate entities.

Step 4. Continuous monitoring and reprofiling

Data profiling isn’t a one-time task – it’s an ongoing process that ensures data quality over time. As businesses continuously generate, collect, and update data, new issues can emerge, making reprofiling and monitoring essential.

What can be automated?

Real-time monitoring. Tools like Talend or Ataccama can continuously profile new data as it flows into your system. This is especially relevant for data streaming tasks.

More on data streaming and Kafka – in this video

Alerts and notifications. Set up automatic alerts when issues arise (i.e., a sudden increase in duplicate records or missing values).

Automated fixes. Some tools can apply predefined rules to correct common issues, like reformatting dates or filling in missing values based on patterns.

What can be reviewed manually?

Investigate recurring issues. If a certain problem keeps appearing, it might indicate a deeper data entry or system issue.

Refining business rules. Over time, profiling insights help improve validation rules, preventing errors at the source.

Auditing automated fixes. Ensure critical data changes (i.e., merging customer records) are reviewed before being applied.

Example: A profiling tool detects a spike in missing ZIP codes in customer addresses. The system automatically fills in missing values using external reference data but flags records where confidence is low, requiring manual review.

Now, let’s do a deeper review of popular data profiling tools.

Data profiling tools: commercial and open source

Selecting the best data profiling tool depends on your data complexity, business needs, and technical environment. Below are key factors to consider.

Cost and licensing model. Open-source tools are great for small projects, but enterprise solutions offer better automation and support. Additionally, some products have subscription-based payment or flexible pricing based on usage. Also, factor in costs for training, implementation, and ongoing support.

Features and capabilities. Although most tools are capable of comprehensive profiling, you may want to keep in mind the need for continuous profiling. In this case, choose a tool that offers ongoing data quality checks and alerts.

Ease of use. If your team lacks technical expertise, look for a tool with simple interfaces and a no-code option. There are also solutions that generate easy-to-read insights on data quality issues.

Scalability and performance. If you have millions of records, ensure the tool can process them without performance slowdowns. If you work with big data or need remote access, consider a cloud-based solution. Besides, the tool should connect seamlessly with other components of your data infrastructure — be it a database, data hub, data warehouse, data lake, or BI tools.

AI-driven insights. Depending on your needs, consider advanced tools that use machine learning to detect hidden patterns in data.

Brief comparison of data profiling tools

Here, we will review a few different popular tools on the market, but definitelly do your own research as well.

Ataccama: AI-driven all-in-one tool for data quality management

Ataccama ONE is an all-in-one data quality, governance, and management platform that uses AI-driven automation to profile and clean data at scale. It’s designed for enterprises needing continuous monitoring and deep insights into data structure, relationships, and quality.

Key profiling features include:

- smart rule generation and automated rule suggestions,

- continuous monitoring,

- high-performance processing to handle billions of records, and

- seamless integration to relational and NoSQL databases, cloud sources, file storage, and streaming services.

Ataccama also has a cloud version to simplify installation and operations.

Best for: AI-driven data profiling and data governance at an enterprise level.

Data Ladder: code-free tool for complete data quality lifecycle

Data Ladder is a provider of DataMatch Enterprise – comprehensive software for code-free profiling, cleansing, matching, and deduplication that’s easy to use both for seasoned data analysts and novices. It comes with full-fledged data profiling functionality, and some of its features include:

- bulk profiling for multiple data sources,

- custom filters,

- map charts for location address values,

- scheduling for automatic profiling.

DataMatch Enterprise has a 30-day trial of fully functional software with no credit card required.

Best for: businesses needing data matching, deduplication, and profiling.

IBM InfoSphere Information Analyzer: profiling for data integration and migration

IBM InfoSphere Information Analyzer is an IBM InfoSphere Information Server feature – a data integration platform for data cleaning and transformation. It’s a go-to software for data integration and data migration, allowing for easy export analysis contained in IBM InfoSphere products.

Some of its key features are

- scenarios for different types of information analysis projects,

- recommendations on best choices for data structure,

- its lightweight browser-based client,

- publishing and transferring analysis results, and

- developing applications using an API to access Information Analyzer content.

The cloud version of IBM InfoSphere pricing starts at $31,000 a month. On-premises pricing is available on request.

By the way, we have a separate article reviewing IBM InfoSphere if you’re interested.

Best for: enterprise-level data profiling and governance for IBM InfoSphere users.

Open Refine: free and perfect for small teams

OpenRefine is a free, open-source tool designed for data cleaning and transformation. While it includes some basic data profiling features, its real strength lies in managing messy, inconsistent datasets. It’s particularly useful for journalists, researchers, and analysts who work with CSV files, spreadsheets, and JSON data.

Among its main features are:

- faceting or using facets to drill through large datasets,

- fixing inconsistencies by merging values (aka clustering),

- matching datasets to external databases, and

- rewinding to previous dataset states.

OpenRefine has detailed documentation, but it may present too high a learning curve for newcomers. Note that its automation capabilities are limited and it doesn’t support real-time monitoring.

Best for: individuals and small teams cleaning and profiling messy data.

Talend Data Fabric: complete profiling with graphical representation

Talend Data Fabric is a complete data integration and governance platform that includes data profiling, cleansing, and transformation capabilities. It automates data quality checks, anomaly detection, and real-time monitoring, making it ideal for organizations handling large-scale and complex datasets.

Among its data quality features are:

- graphical representation of data statistics and reports,

- a built-in trust score to help you know which datasets require additional cleansing,

- ML-based recommendations for addressing data quality issues, and

Talend has a free trial with pricing available on request.

Best for: large enterprises needing a full-suite data integration and quality management platform.

Soda Core: free tool for quality checks, testing and monitoring

Soda Core is an open-source Python library and CLI tool for data quality and monitoring. It’s especially useful for teams that want to integrate data testing directly into their pipelines with SQL-based checks. You can only use Soda Core with SodaCL, a YAML-based, domain-specific language that’s easy to write and read.

Soda Core’s features include:

- the ability to connect to over a dozen data sources at a time,

- building programmatic scans that can be used with orchestration tools like Apache Airflow,

- writing data quality checks using SodaCL.

Soda Core has a paid extension called Soda Library offering check suggestion prompts and check template creation.

Best for: developers and data engineers needing open-source data monitoring and testing.

YData profiling: free Python library for quick data exploration

YData profiling (previously Pandas profiling) is a Python-based data profiling library that generates detailed HTML reports with summary statistics, missing values, correlations, and more. It’s an excellent tool for data scientists, engineers, and analysts working in Jupyter Notebooks or Python environments.

Its key characteristics:

- generating detailed exploratory data analysts (EDA) reports with a few lines of code,

- working directly with Pandas DataFrames,

- reports integrated as a widget in a Jupyter Notebook,

- supporting large datasets, and

- customizing the report’s appearance.

As any popular open source package, YData has helpful communities online and detailed documentation.

Best for: Python users who need quick exploratory data analysis.

Hopefully, this has given you a sufficient overview to start your own research into data profiling software.

Final data profiling tips

Before you go, here are some less obvious but highly useful data profiling tips.

Start with a small sample, then scale. Instead of profiling your entire dataset upfront, begin with a representative sample. This helps you identify key issues quickly and adjust your profiling strategy before processing large volumes of data.

Look for hidden duplicates. Standard duplicate checks may miss slight variations in names, addresses, or product codes. Use fuzzy matching techniques to find near-duplicates, such as "Jon Snow" vs "John Snow" or "100 Main St." vs "100 Main Street."

Check for hidden bias in data distribution. If 80 percent of your customer base comes from one demographic or region, your models might be biased. Profiling should reveal these imbalances so they can be addressed in decision-making.

Maryna is a passionate writer with a talent for simplifying complex topics for readers of all backgrounds. With 7 years of experience writing about travel technology, she is well-versed in the field. Outside of her professional writing, she enjoys reading, video games, and fashion.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.