Today's organizations deal with immense volumes of data that comes in different formats, lacks consistency, and often contains errors or missing values. These discrepancies become critical if your business wants to make informed decisions backed by analytical insights. Their accuracy depends on the quality of the data that informs them. That’s why it's crucial to understand how to transform raw data into organized and accurate material.

This article will explore data wrangling, explain its importance, and outline the key steps. Additionally, we'll review five popular tools and present real-world use cases that exemplify data wrangling applications in various industries.

What is data wrangling (data munging)?

Data wrangling, also referred to as data munging, is the process of taking raw data and transforming it into a clean and structured format. The process occurs after data collection and before any data analysis takes place.

Data processing workflow

Data wrangling is essential for a few reasons.

Improved data accuracy and consistency. Standardizing disorganized or faulty data ensures that the material used for analysis is reliable and accurate. This also maximizes the trustworthiness of insights that can be later derived from it. Without proper wrangling, the insights drawn from the data may be inaccurate or incomplete, leading to flawed conclusions.

Easier access and collaboration. Through data wrangling, raw data is transformed into a format that is easier to understand and share. By simplifying and organizing data in a consistent manner, it becomes convenient for different departments or individuals to work with the same dataset. This promotes collaboration and knowledge sharing, as even non-experts can interpret the data efficiently.

Enhanced decision-making. Data wrangling results in reliable data insights, improving the effectiveness of decision-making processes within an organization. Clean data reduces the risk of taking actions based on inaccurate or incomplete information.

With data wrangling explained, let's break down its key stages next.

Data wrangling steps

While the specifics may vary, there are standard procedures that guide data wrangling.

Data wrangling steps

This section will provide a comprehensive overview of the six key steps, each responsible for distinct and equally crucial operations.

Discovering

The first step of data wrangling is often referred to as discovery or exploration. Here, you prepare for subsequent stages. This involves comprehensively examining the dataset to understand its structure, content, and quality.

Here are some questions data scientists might ask at this stage:

- What is the source of the data?

- Are there additional data sources that could enhance the analysis?

- What are the specific business questions we aim to address with this data?

- How relevant is the data to the current business situation?

- How can we categorize the data to facilitate analysis?

- Are there any trends or correlations worth exploring?

Overall, during the discovery step, you explore the data, establish criteria for categorization, conceptualize potential uses, and ensure the data can address business needs.

Structuring

The structuring step of data wrangling involves transforming the raw data into a suitable format for analysis. Raw data is typically incomplete or misformatted for its intended application. For example, a field intended for numerical values also includes text entries. This phase includes categorizing data and standardizing data fields to ensure that they are appropriately labeled and reflect their content accurately.

Structuring also involves creating a single, unified dataset when data comes from multiple sources. While the specifics of the structuring stage may vary for structured and unstructured data, it is a crucial step in the data wrangling process for both. A well-structured dataset enables more efficient data manipulation.

Cleaning

Data cleaning is often confused with data wrangling. The first focuses on identifying and correcting errors, inconsistencies, and inaccuracies within the dataset. On the other hand, data wrangling encompasses a broader set of steps, including this one.

Data cleaning includes data profiling, which is the process of reviewing the content and quality of a dataset to detect potential issues or anomalies. The tasks include ensuring consistency in data formats, units of measurement, and terminology; deleting empty cells or rows as they can skew analysis results; and removing outliers—data points that significantly deviate from the rest.

This phase is essential for ensuring data quality, reliability, and integrity before proceeding with analysis. Check our article on dataset preparation to learn more.

Enriching

The step of enriching is optional and occurs if you decide that you need additional data. You can add more context for analysis by incorporating internal (generated or owned by your organization) and external data sources (public databases or third-party vendors) to add new values.

By enriching the dataset, you can identify new connections, dependencies, or patterns and provide a more comprehensive understanding of the underlying data.

Verifying

The fifth step of data wrangling, known as validating or verifying, aims to ensure the accuracy and reliability of the transformed data and conclude readiness for analysis. It involves thorough checks and assessments to confirm the data's completeness, correctness, and consistency. These checks include tasks like verifying that data adheres to predefined formatting rules and cross-referencing data with external sources.

Validated datasets instill confidence in the analysis results, mitigate the risk of inaccurate conclusions, and support the credibility of future data-driven insights.

Publishing

The sixth and final step of data wrangling is publishing, where you prepare the processed dataset and make it available for sharing, analyzing, and decision-making among stakeholders, whether it's within the organization or to the broader public. This phase focuses on putting the data in a preferred format that is understandable and easy to navigate.

It's a good practice to create documentation that provides essential context, definitions, and instructions for using the dataset effectively. It may include data dictionaries defining the meaning and characteristics of data fields and attributes, as well as metadata that contains information about the dataset’s source, creation date, and other relevant aspects.

Data wrangling tools

Data professionals traditionally rely on Python and R to prepare data for analysis. But today, even people without coding skills can perform data wrangling tasks using off-the-shelf tools. They decrease the need for manual intervention, help prepare data faster, and allow organizations to focus their resources and expertise on more strategic aspects like generating insights and interpreting results.

Data wrangling tools overview

Here, we review five most popular data wrangling tools, highlighting their key features and functionalities. Note that the pricing for each tool is custom and provided upon request.

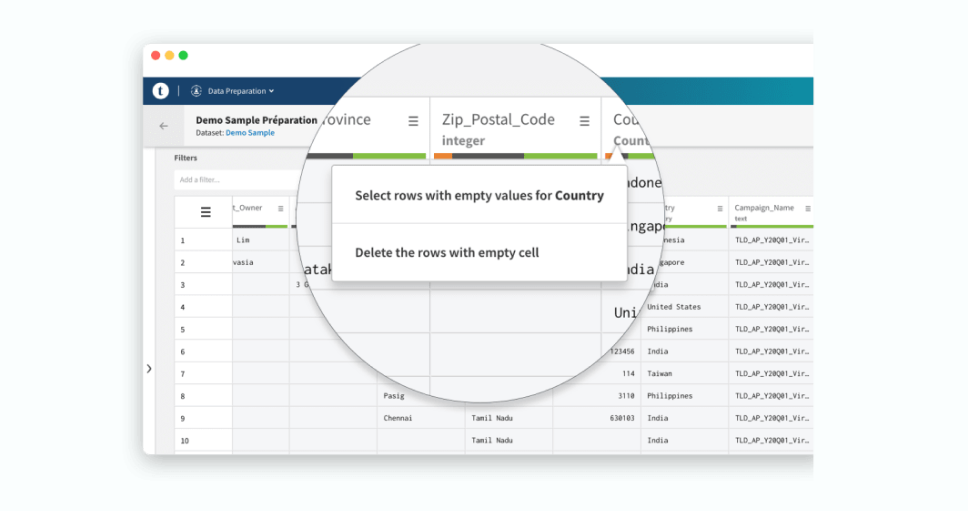

Talend: Custom data wrangling rules and flexible workflows

Talend Data Preparation, a part of the Talend platform covering the entire data management cycle, is a browser-based tool for self-service data profiling. Users can define rules or criteria for how their data should be cleaned or enriched to ensure consistency. It also allows you to set role-based access controls (restricting access to data based on users' roles or permissions) and workflow-based curation (defining processes for managing and approving changes to data).

Talend Data Preparation feature. Source: Talend

Talend partners with leading cloud service providers, data warehouses, and analytics platforms, including Amazon Web Services, Microsoft Azure, Google Cloud Platform, Snowflake, and Databricks.

Talend also offers a Data Integration feature that leverages over 1,000 connectors to different cloud and on-premises data sources. This enables you to explore information outside your business systems and enrich your datasets. The platform embeds quality measures throughout the data integration process, checking for issues as data moves.

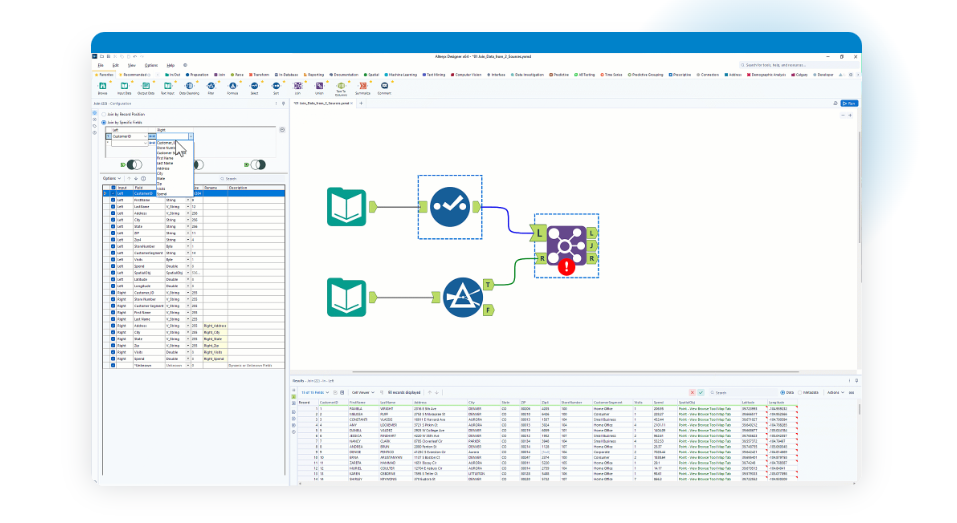

Alteryx: Centralizing data and tracking progress with a visual canvas

Alteryx offers comprehensive on-prem and cloud solutions for data wrangling tasks.

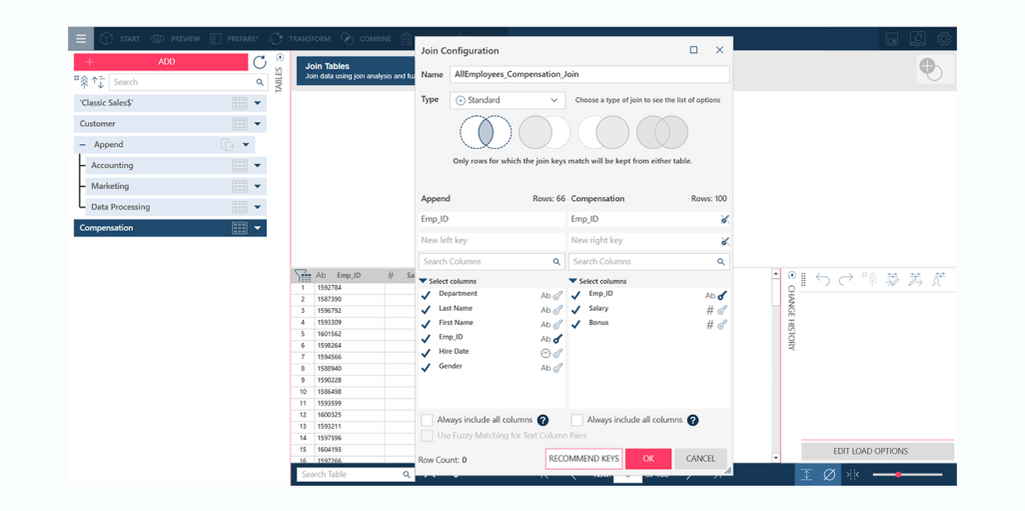

Designer is an on-premises drag-and-drop tool that allows users to bring disparate data together and blend it into a unified dataset. It also covers data analysis and monitors each step of the analytic process with a visual canvas. The platform connects to over 80 data sources, including cloud-based platforms Databricks and Snowflake.

Alteryx Designer Cloud (formerly Trifacta Wrangler) performs similar tasks but has unlimited scalability and built-in data governance. It also uses AI-driven suggestions to lead you through the data wrangling process.

There are other useful tools, such as Server—for sharing your activities and scaling them organization-wide; and Intelligence Suite, which can categorize and extract text from PDFs and images, leveraging Google Tesseract’s OCR (Optical Character Recognition) capabilities.

Alteryx Designer interface. Source: Alteryx

Additionally, Alteryx offers an Auto Insights feature that applies machine learning algorithms to analyze your data, detect important patterns, and explain them in simple and understandable language.

The Alteryx AI Platform for Enterprise Analytics leverages an AI engine called AiDIN to generate text-based reports, predictions, and recommendations to inform critical business decisions.

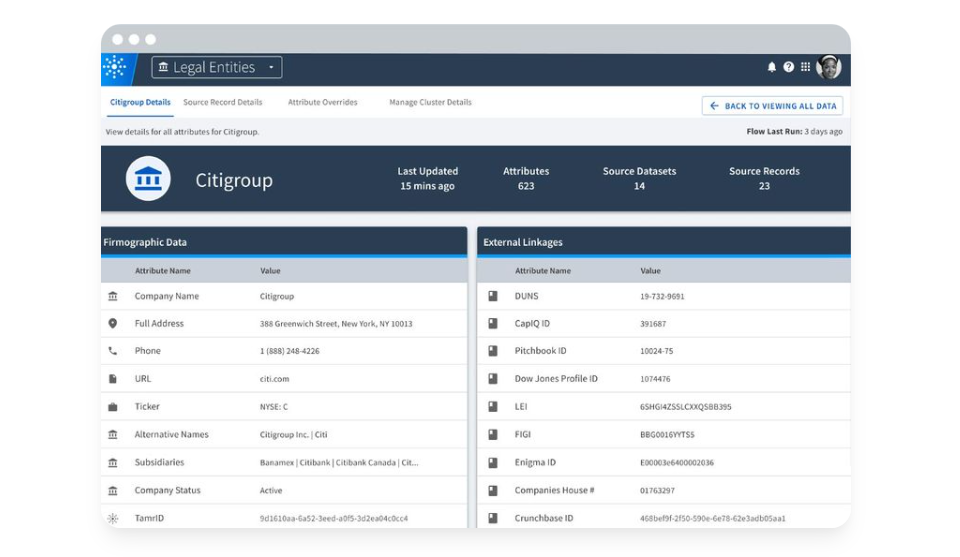

Tamr: Automatic error handling and data matching

Tamr provides templates that streamline the process of consolidating messy or disparate source data into clean and analytics-ready datasets. These templates offer user-friendly interfaces that were designed for use by both humans and machines and are accessible for analytical systems across the organization.

Tamr’s data product template interface. Source: Tamr

Tamr's templates come with pre-defined schemas that serve as starting points for defining the structure of your data, providing a standardized framework that helps ensure consistency and alignment across different datasets.

Tamr leverages AI and machine learning models to track changes, automatically identify errors, and resolve duplicates. The platform also uses AI to compare attributes from different sources to ensure that data matches all attributes accurately. This is especially advantageous when dealing with extensive and complex datasets, as manually matching data attributes is time-consuming and prone to errors.

Astera: Standardizing data from numerous unstructured sources with AI

Astera offers two data wrangling tools: Astera Centerprise and Astera ReportMiner.

Astera Centerprise empowers users to cleanse raw data and transform it into a refined, standardized, enterprise-ready format. You can create custom validation rules for advanced data profiling and debugging.

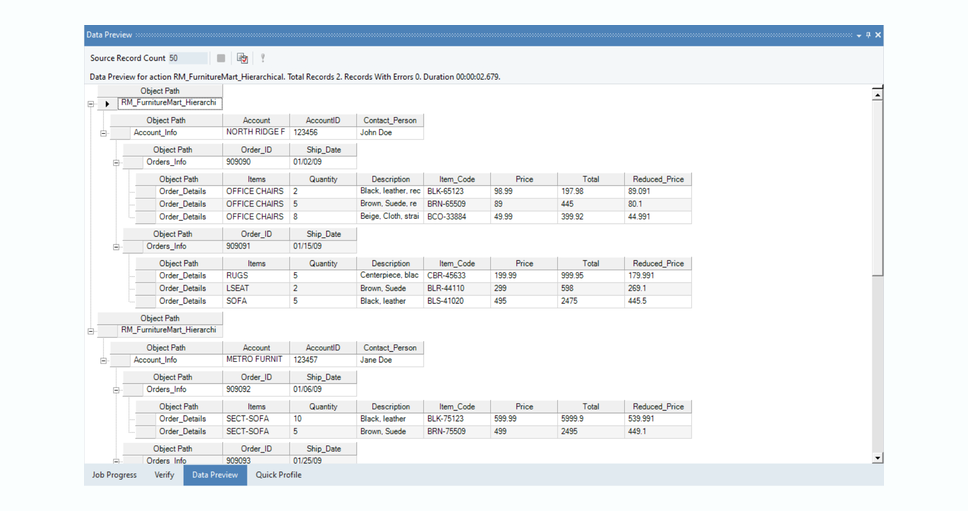

Data Preview window displays the extracted data before exporting. Source: Astera ReportMiner

Astera ReportMiner extracts data from various unstructured sources, making it suitable for businesses handling numerous invoices, purchase orders, tax documents, and other files. ReportMiner supports PDF, text, RTF, and PRN formats. The tool leverages AI to automatically generate extraction templates and capture valuable information from unstructured files. Additionally, users can export the extracted data to other databases, BI tools, and ERP and CRM systems.

Altair: Over 80 functions for quick data wrangling

Altair offers a platform with data wrangling capabilities called Altair Monarch.

Altair Monarch interface. Source: Altair

Altair Monarch is a desktop-based self-service solution that can quickly convert disparate data formats into standardized rows and columns ready for analysis. It has over 80 functions to transform messy data into usable datasets. Monarch connects to multiple data sources, including structured and unstructured, cloud-based, and big data.

Apart from providing data wrangling solutions, Altair's software suite includes a diverse range of products—from data visualization to generative AI tools.

Data wrangling examples

In this section, we'll look at how different industries apply data wrangling to address problems.

Travel companies often collect demographic, behavioral, and transactional data about their customers. Data wrangling allows them to integrate this data to create customer segments based on travel preferences, booking history, loyalty status, and spending patterns. Travel companies also gather information on historical pricing, competitor fares, demand patterns, and market trends. Analyzing such data allows businesses to adjust prices dynamically and optimize their revenue management strategies.

Healthcare organizations deal with vast amounts of patient data from electronic health records (EHRs), medical imaging systems, wearable devices, laboratory test results, and more. Data wrangling is essential for integrating disparate data sources to create comprehensive patient profiles for better diagnosis, treatment planning, and overall decision-making.

Financial institutions rely on data wrangling to transform and organize data from multiple sources, such as transaction records from banking systems, customer information databases, and regulatory reports. Clean and consolidated data can then be analyzed to identify potential risks, prevent fraudulent activities, and evaluate investment opportunities.

Retailers collect data from multiple sources, such as point-of-sale (POS) systems, online sales platforms, customer loyalty programs, and supply chain operations. Data wrangling helps combine and standardize this data to gain insights into customer behavior, preferences, and purchasing patterns. Retailers can optimize inventory levels, reduce stockouts, and minimize excess inventory carrying costs. Businesses can also target specific customer segments with relevant promotions and recommendations by combining data from CRM systems and online interactions.

In these industries, data wrangling is crucial in transforming raw, unstructured data into clean, organized datasets for better analytics, decision-making, and driving business insights.