The competition between Databricks and Snowflake, the titans in the big data analytics space, has become as dramatic as it used to be between Apple and Microsoft. They even came up with new marketing terms to distinguish themselves: Databricks promotes itself as a "data lakehouse," and Snowflake positions itself as the "AI data cloud."

Indeed, Snowflake is a cloud data warehouse, and Databricks is an analytical platform built on top of Apache Spark that combines the capabilities of a data lake and data warehouse. However, as both companies evolve, their offerings are increasingly overlapping—Snowflake expands into data science and machine learning, while Databricks enhances its SQL and, thus, business intelligence capabilities, blurring the lines between their traditional roles.

In this article, we’ll explore the actual differences between the two platforms to help you select the best solution for your business.

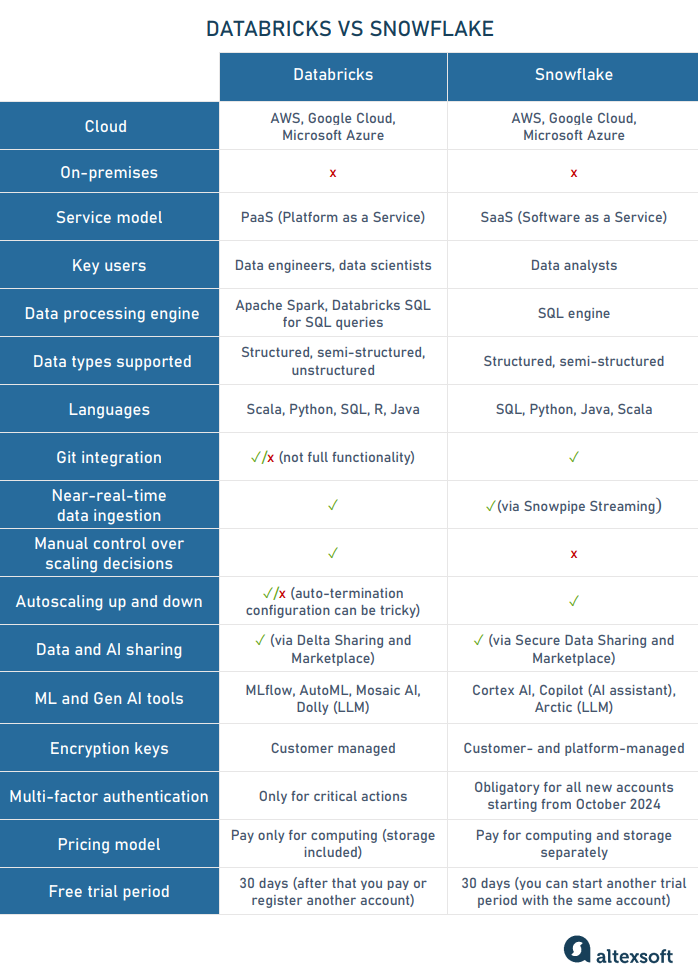

Databricks vs Snowflake key features compared

Вut bеfore we compare these data solutions in detail, we’ll provide a few examples of how they can be used.

What Snowflake and Databricks are used for

Both the cloud-based data warehouse and the analytics platform can serve various purposes. Here are some of the key use cases.

Business intelligence and data analytics

Data analytics and business intelligence are primary use cases for both Snowflake and Databricks due to their ability to consolidate large volumes of data from multiple sources. This centralization allows for efficient querying, reporting, and analysis, enabling organizations to generate actionable insights and make data-driven decisions.

The Australian health insurance fund nib group adopted Snowflake as their cloud data warehouse solution due to its comprehensive out-of-the-box BI capabilities. It seamlessly integrates with the company’s existing cloud infrastructure, efficiently handles large volumes of data, and dynamically scales to meet fluctuating business demands. By directly querying Snowflake, the team can swiftly compute KPIs in Tableau for various metrics spanning claims, sales, policies, and customer behavior.

In turn, one of the world's biggest pharmaceutical and biotechnology companies, Bayer, used Databricks to develop ALYCE, an advanced analytics platform specifically designed for the clinical data environment. ALYCE facilitates the analysis of extensive and complex clinical data while ensuring compliance with regulatory standards. The platform employs business intelligence and machine learning to speed up clinical trial data reviews.

IoT streaming data insights

Although Databricks and Snowflake were originally intended for batch ingestion, they can handle data streaming and near-real-time or real-time analytics.

Using Snowflake, San Francisco International Airport (SFO) successfully manages extensive data from various sources, including third-party applications that continuously transmit real-time data. For instance, the Transportation Network Companies (TNC) app tracks rideshare movements within the airport and streams events like entry, drop-off, pick-up, and exit into a Snowflake table. This data is crucial for operational optimization, compliance, investigating and handling incidents, and revenue auditing.

Another use case is a whole different ballgame. A US Major League Baseball team, the Texas Rangers, uses Databricks to capture data at hundreds of frames per second to analyze player mechanics in near real-time. It allows immediate insights for making quick, informed decisions on personnel management and injury prevention.

Building custom Gen AI models

Cloud data platforms offer scalable, high-performance infrastructure for storing, processing, and managing large datasets, providing seamless access to compute power and data for training machine learning algorithms, including generative AI models. This is where Databricks excels.

Workday, an American platform for finance and HR, collaborated with Databricks to create a large language model (LLM) to transform inputs like job titles, company names, and required skills into new job descriptions for the site.

Another example is the creative platform Shutterstock, which launched Shutterstock ImageAI. Powered by Databricks Mosaic AI (the main Databricks tool for AI and ML), this image-generating model creates high-quality, photorealistic pictures. Because the AI model was trained only on Shutterstock’s licensed images, the outputs are legally compliant and meet professional standards. Businesses can integrate ImageAI into their applications and fine-tune it to meet specific brand requirements.

Databricks Lakehouse vs Snowflake: data storage capabilities and data types supported

Databricks and Snowflake are both available on AWS, Microsoft Azure, and Google Cloud Platform, using their cloud object storage. This technology accommodates massive volumes of unstructured data (images, audio, video, texts) and serves as the foundation for data lakes. Benefitting from almost unlimited scalability, Databricks and Snowflake follow their specific ways of organizing and handling data.

Databricks relies on Delta Lake, a tabular storage layer on top of the existing data lake. It guarantees reliability and consistency of operations but, at the same time, supports all types of data — structured, semi-structured, and non-structured. This gives Databricks a significant advantage in managing data variety, allowing users to extract insights from unstructured sources.

Snowflake stores information in its internal columnar tables divided into micro-partitions — small units containing between 50 and 500 MB of data. Unlike Databricks, Snowflake automatically compresses and organizes data for better scaling, faster SQL querying, and storage efficiency.

For more details about different types of data storage, watch our video on data storage for analytics and machine learning.

Data Storage for Analytics and Machine Learning.

Besides structured data, Snowflake supports semi-structured data. After loading JSON or XML files, you can transfer them to a table on the go just by specifying which keys from the JSON file should be turned into the columns. There’s no need to parse/transform data; Snowflake builds a table, and you can work with it immediately.To

Programming languages supported

As Databricks is based on Spark, its primary language is Scala. But the platform also supports Python, Java, R, and SQL. All these options except Java are available directly in Databricks notebooks. For Java code, you can use a remote Databricks workspace in IntelliJ IDEA or Eclipse.

Snowflake was originally limited to SQL. However, Snowpark API, introduced in 2022, allows users to query and process data in Snowflake using Python, Java, and Scala. You can create Snowflake-based applications with Java and Scala in IntelliJ IDEA or use Visual Studio Code and Jupyter Notebook for Scala and Python development. Python coding is also supported by internal tools — Snowflake worksheets and notebooks.

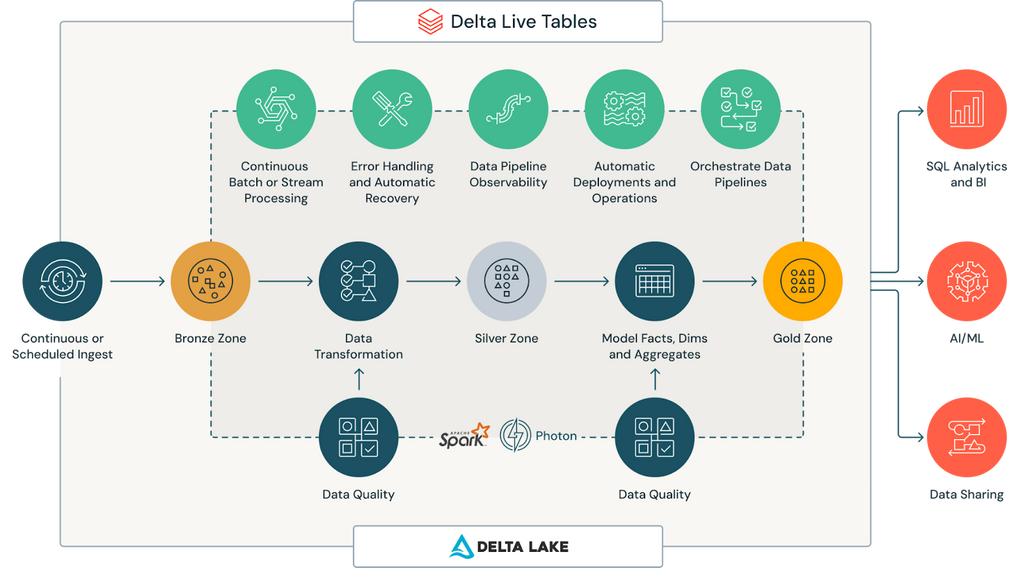

ETL/ELT support

Both platforms support ETL/ELT with a range of tools and features. They are pre-connected with popular ETL tools like Informatica, Talend, and Fivetran and with Apache Airflow for orchestration.

Databricks also offers its own Jobs feature for orchestrating tasks within data pipelines.

Delta Live Tables – a declarative ETL framework. Source: Databricks

Additionally, Delta Live Tables (DLT) launched in 2022, enables users to simplify ETL processes: you can declaratively define how data should flow and be transformed through the pipeline. It automatically manages the underlying processes (orchestration, cluster management, data quality checks, tracking data lineage, error handling, and recovery).

In Snowflake, you can build automated ETL/ELT with Snowflake tasks, organizing them into a sequence with task graphs. A task graph flows only in one direction and has a limit of 1000 tasks. Dynamic tables are another internal tool to simplify ETL/ELT design. They join and aggregate source tables, transforming the data into required format for final consumption and analysis.

You can watch our video on data engineering to learn more about ingesting, transforming, delivering, and sharing data for analysis.

How Data Engineering Works.

User interface

In Databricks, you have several ways to interact with data

- an SQL editor to write and run SQL queries;

- AI/BI dashboards to create, view, change, and share visualizations; and

- Databricks Notebooks, a development environment for data engineers, data scientists and analysts. Though it supports SQL, Python, R, and Scala, the debugger is not available for Scala and R.

All Databricks objects and interfaces are accessible via a Databricks workspace, where you can search for, organize, and manage datasets, notebooks, ML experiments, SQL queries, dashboards, and more.

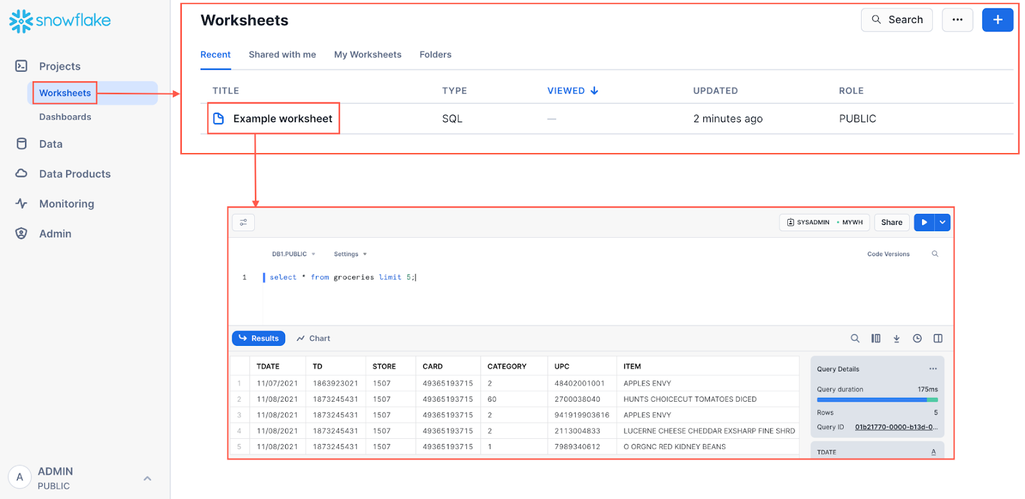

Snowflake's interface, called Snowsight, is much more user-friendly and easy to grasp for analysts and other non-tech people. You can:

- write and run queries in a worksheet — an interactive interface that provides an environment to analyze data using SQL or Python and view results;

- visualize results in charts and dashboards;

- use Snowflake Copilot (an LLM-powered assistant that accepts natural language requests and can generate and refine SQL queries) to help with analysis; and, finally

- write and execute SQL and Python code in notebooks.

Snowflake’s Worksheets. Source: Snowflake

Data governance

Databricks offers governance for data and AI through the Unity Catalog. It provides a user-friendly interface for managing data, notebooks, machine learning models, dashboards, and other assets. You can define specific access policies for individual users and groups.

Snowflake’s data governance key functionalities include data quality and data integrity monitoring, which utilizes system and user-defined metrics. The platform also allows for tagging columns that contain sensitive information for regulatory compliance and provides a historical view of access. Users can track policies and report on their implementation through intuitive dashboards in Snowsight.

Security and data protection

Databricks and Snowflake both prioritize data security but approach it differently.

Databricks emphasizes transparency so that users can manage their own encryption keys. The platform sticks to AES-256 encryption for data at rest, and TLS 1.2 for data in transit. It uses short-lived tokens for most actions and multi-factor authentication (MFA) only for critical ones.

Snowflake offers built-in, multi-layered security, including AES-256 encryption, role-based access control down to the row and column level, IP whitelisting, and private connectivity. It uses platform-managed encryption keys and also supports customer-managed keys, but only in combination with Snowflake’s (this feature is called Tri-Secret Secure). Since October 2024, Snowflake has enforced multi-factor authentication by default for all new user accounts. It also strongly recommends OAuth for external applications working with Snowflake.

Data sharing

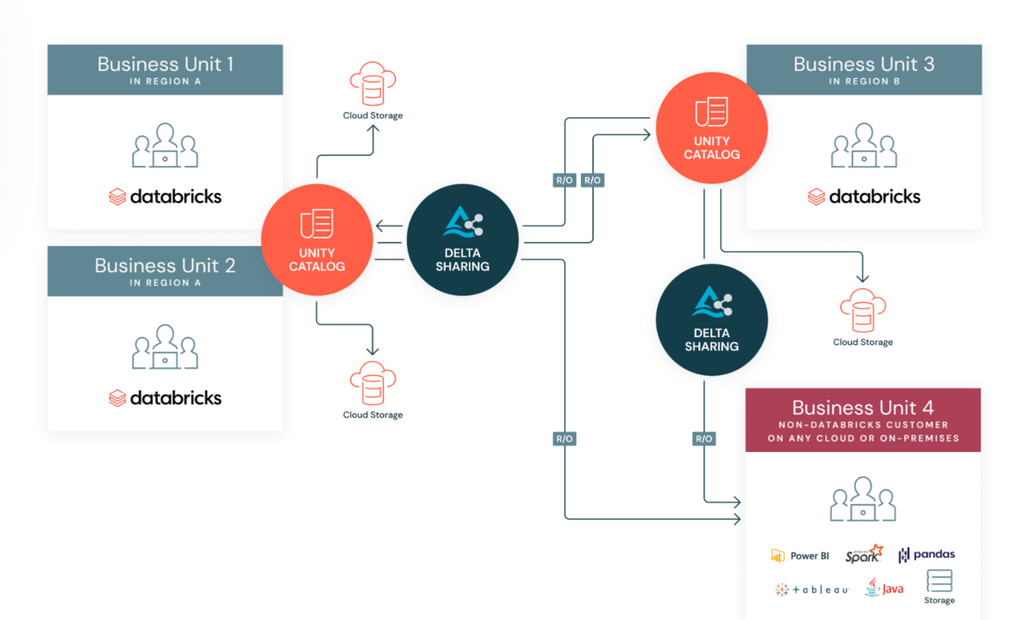

Databricks enabled real-time data collaboration in 2021 by launching Delta Sharing, an open protocol designed for secure data exchange. It allows organizations to seamlessly share information with customers and partners across cloud platforms. Due to native integration with the Unity Catalog, you can maintain consistent data governance, regardless of the cloud infrastructure or tools in use.

Delta Sharing use cases. Source: Databricks

In 2022, Databricks also presented Databricks Marketplace, a centralized platform where providers can publicly list and share data products or services with other Databricks users.

However, it's worth noting that Databricks was a catch-up in this regard. One of Snowflake’s standout features from the very beginning was its capability to securely share data without replication, all within a scalable, GDPR-compliant framework. You can grant other Snowflake users controlled read-only access to a specific dataset, allowing them to query and analyze the shared data.

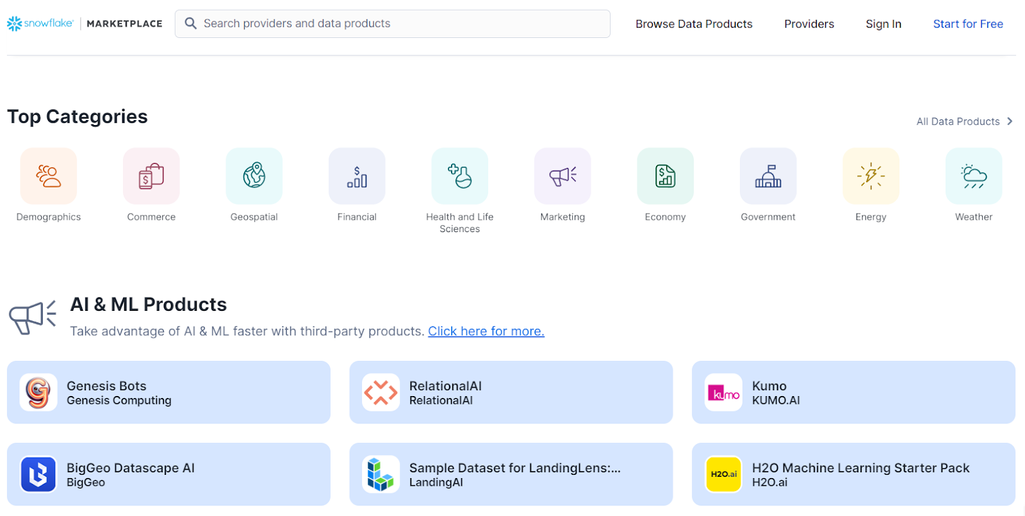

You can also share data, apps, AI assets, and more via Snowflake Marketplace with multiple consumers at once rather than managing separate sharing.

Snowflake Marketplace. Source: Snowflake.

There’s the possibility of monetizing your data products. You also can access datasets and tools from multiple third-party providers for business intelligence, data science, or other purposes.

Scalability

Databricks growth depends on how much you can afford to invest in the infrastructure (or as much as your cloud provider allows). A Databricks compute cluster consists of a main driver node and one or more worker nodes. A node is a group of virtual machines. You can dynamically adjust the number of worker nodes to handle varying data processing workloads.

The platform allows for manual control over scaling decisions. Users can specify minimum and maximum cluster sizes, decide how long clusters should remain active, and set policies for auto-termination after idle periods. This flexibility is valuable for scenarios with complex, variable workloads, like real-time analytics or machine learning.

On the other hand, successfully scaling a Databricks cluster requires a certain level of technical expertise to configure and manage it effectively. For example, if auto-termination thresholds are not properly configured or workload predictions are inaccurate, clusters may remain active longer than necessary, leading to higher costs.

As for Snowflake, scaling decisions are handled automatically: The platform starts or stops virtual warehouses based on their activity. A user, though, has control over the size of a warehouse. Each warehouse has one cluster, with the size ranging from X-Small (1 CPU node) to 6X-Large (512 CPU nodes). Isn’t it cute that they used a t-shirt size system to make it more fun?

Scaling a warehouse up means adding clusters of the same size. Let's say you selected a Large warehouse. At some point, you have reached its capacity limit and are just a little bit short. However, only a cluster of the same Large size can be added automatically, which is expensive and uneconomical.

The good thing is that users can create multiple warehouses that operate independently, allowing for flexible scaling based on workload requirements.

By the way, it was Snowflake’s creators who had the brilliant idea to decouple compute and storage resources, enabling their independent scalability. Naturally, you process less data than you store. This innovation revolutionized data warehousing, allowing for more efficient handling of large-scale workloads.

Data streaming and real-time analytics

Databricks is built on top of the Apache Spark engine that provides a Structured Streaming component for near-real-time data processing. The Spark-based architecture ensures low latency and scalability, making it ideal for time-sensitive analytics, monitoring, and event-driven applications. You can use Structured Streaming with Unity Catalog to add data governance to streaming workloads.

There are also other methods to organize near-real-time data streaming. Auto Loader automatically detects new data files and incrementally loads and processes them. Delta Live Tables, in turn, support incremental data processing, including both ingestion (which can be handled by Auto Loader) and the subsequent transformation.

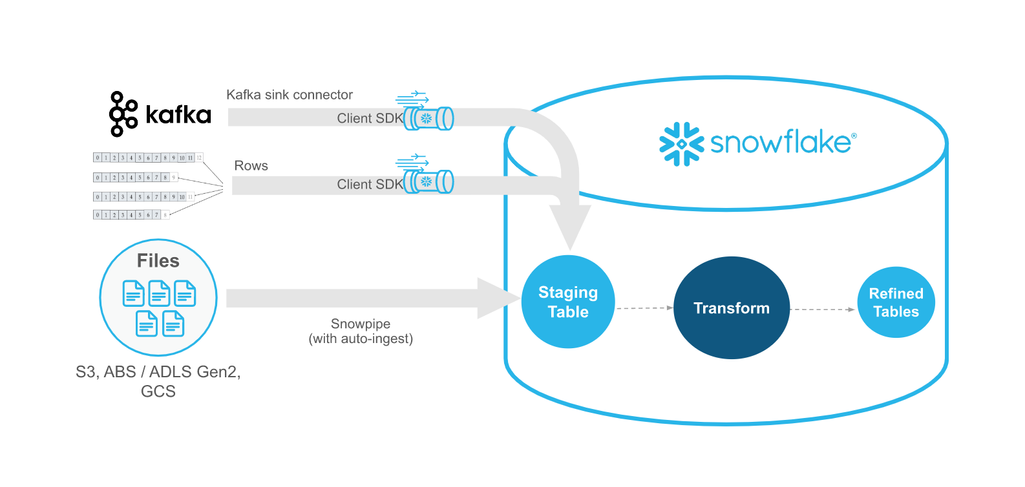

Snowflake supports continuous data ingestion through Snowpipe, which loads data in micro-batches for near-instant availability. In 2023, Snowflake introduced the Snowpipe Streaming API, pre-connected with Kafka, which enables real-time processing.

Snowpipe Streaming schema. Source: Snowflake.

To summarize, both platforms are suitable for high-volume, real-time analytics tasks. But if the data is simple (for instance, clicks and likes on a social media platform) and you need real-time access without transforming it too much, just to see the statistics, then it makes sense to use a smaller and less complex storage solution, like MongoDB.

Ecosystem: tools and extensions

Databricks leans heavily on open-source technology for flexibility and customizability. It is complex but offers greater control, enabling users to design highly customized data pipelines and environments tailored to specific needs, especially in AI and machine learning tasks. Databricks offers native support for frameworks like TensorFlow, PyTorch, Hugging Face, DeepSpeed, and MLlib; it also allows for graph processing.

Recently, Databricks has been successfully closing the gap between itself and Snowflake, which initially had a much wider range of pre-integrated tools. Now, Databricks has integrations with established data vendors, data governance tools, data warehouses, and data lakehouse platforms.

Snowflake’s strong suit is user-friendly, pre-packaged solutions, enabling organizations to set up analytics with minimal effort quickly. It also offers connectors to 105 data products, allowing you to

- easily bring data from external sources like medical, weather, demographical, financial, and geospatial data hubs;

- sync or digest data directly from Google Analytics, GitLab, Jira, Salesforce, Amazon Ads, and Google Ads;

- push and pull from MySQL and PostgreSQL; and more.

In general, it’s fair to say that Snowflake offers a highly controlled commercial ecosystem.

AI and ML capabilities

Although both platforms are now well-suited for machine learning, Databricks was the first in this realm. It is built with a strong focus on data science and machine learning. It simplifies designing end-to-end ML pipelines with tools for data engineering, model training, and deployment, making it ideal for collaborative machine learning workflows.

MLflow, an open-source tool developed by Databricks, is widely used for MLOps: It enables easy tracking, versioning, and deployment of models. Additionally, AutoML functionality allows for low-code, faster model deployment, making it accessible to non-experts.

Mosaic AI, a key component of Databricks AI capabilities, is designed to optimize and streamline the entire machine learning lifecycle. This multitool unifies data, model training, and production environments in a single solution. Mosaic AI provides the distributed computation required for generative AI models, which can leverage billions of parameters. In 2023, Databricks released Dolly, the first open-source LLM, which is available for free download and commercial usage.

Machine learning functionality in Snowflake is not as mature as Databricks'. It relies more on third-party integrations for advanced ML tasks, including popular machine learning platforms like DataRobot and Amazon SageMaker.

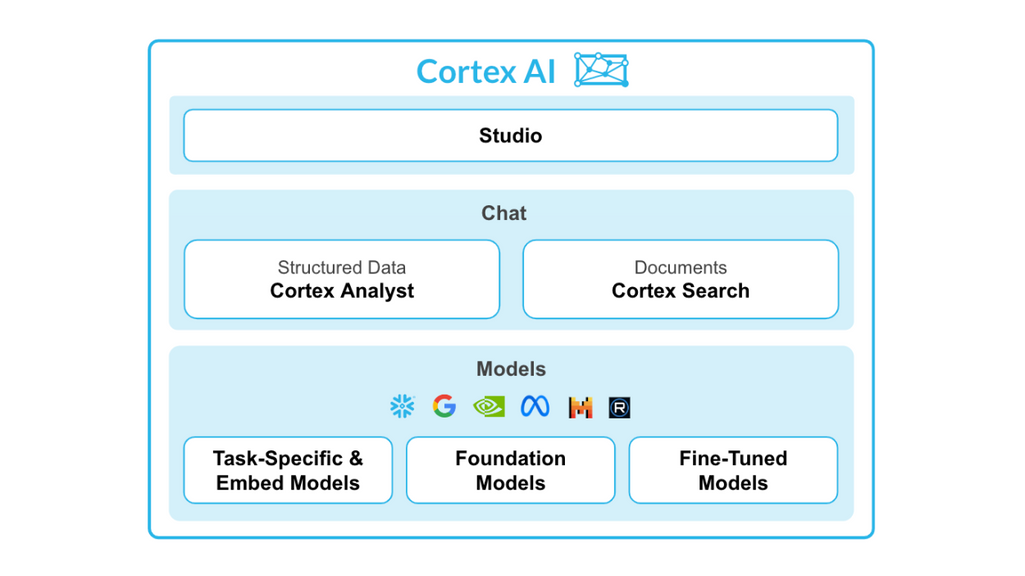

In 2023, Snowflake launched Cortex AI, a new service that simplifies data exploration and analysis by enabling businesses to make queries using natural language. It also allows for building AI-driven applications.

Cortex AI structure. Source: Snowflake

CortexAI provides access to AI models, including industry-leading LLMs developed by Mistral, Reka, Meta, and Google, enabling you to customize them for your needs. In 2024, Snowflake followed the example of Databricks and presented its own LLM model called Arctic, which can be downloaded from Hugging Face.

Pricing model

Databricks pricing is based on the compute usage. Storage, networking, and other related costs are included and vary depending on the tools you choose and the cloud service provider.

The Databricks pricing model is not that transparent, and it's not easy to find out how much you will effectively pay for some processes.

As for the trial period, Databricks only allows one 30-day trial per email, after which you either have to pay or use a different email.

Snowflake's architecture separates the storage and the compute layers, and they are priced separately as well. The platform charges a monthly fee for data storage based on a flat rate per terabyte. You also pay for compute usage each time the warehouse starts. This means you need to carefully manage how often you run processes; solutions that run continuously can become expensive in Snowflake.

As with any usage-based cloud platform, costs can escalate rapidly if not carefully managed or monitored. Snowflake has an advantage here since its pricing model is easy to understand, with separate charges for storage and computing. Snowflake’s detailed documentation allows you to estimate costs based on data size, job frequency, and duration. Moreover, you see spending in the admin panel almost on the go. You can also analyze costs by user roles, making it simple to track expenses for different teams, such as developers or analysts. This real-time cost insight helps with budget management and project planning.

Snowflake offers trial versions that you can repeatedly use with the same email, allowing you to test and thorously learn without having to pay.

Databricks or Snowflake: How to choose between the two data titans

Overall, Snowflake and Databricks are both strong data platforms for BI and analytics. Snowflake is a reliable option for standard data transformation and analysis, especially for SQL users. Databricks seems a better choice for machine learning, AI, and data science, particularly due to its support for all types of data.

However, the type of data storage is no longer a crucial distinction when selecting between these two data giants since Snowflake can easily connect to external data lakes and databases (including Databricks).

The best choice for your business depends on your data strategy, use cases, data needs, and workload scale. These questions can help you decide.

- How complex are your data transformations? Do you have in-house expertise in Python, Scala, or other programming languages, or will you rely mostly on SQL-based workflows? Obviously, Snowflake is better for non-tech users.

- Does your business rely on machine learning and Gen AI? Then, Databricks will probably be a better option—at least so far.

- Do you need robust business Intelligence? Snowflake wins this category.

However, the most essential factor is the team's skill set. Switching to Snowflake is far easier for data analysts accustomed to relational databases, while Databricks is more complex.

On the other hand, if you have a team of developers and data engineers who've written services and applications and used more versatile tools than only SQL, it will be easier for them to grasp Databricks.

Of course, you can always find new people with new skills, but it takes time. So, look at what you have on board and make the most of it.

Olga is a tech journalist at AltexSoft, specializing in travel technologies. With over 25 years of experience in journalism, she began her career writing travel articles for glossy magazines before advancing to editor-in-chief of a specialized media outlet focused on science and travel. Her diverse background also includes roles as a QA specialist and tech writer.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.