You’ve certainly noticed that the image above is generated by AI—specifically, Midjourney. However, text-to-image translation is just one of many examples of what generative AI models do nowadays. These digital creators are helping with tedious tasks, designing, writing, and building everything from websites to music tracks faster than we can spell algorithm.

Neural nets can create images, audio and video content that not every person can.

Generative AI's impact on businesses in different fields is huge and continues to grow. According to a recent Gartner survey, business owners reported the essential value derived from GenAI innovations: an average 16 percent revenue increase, 15 percent cost savings, and 23 percent productivity improvement.

It would be a big mistake on our part to not pay due attention to the topic. So, this post will explain generative AI models, how they work, and their practical applications in different areas.

Generative AI explained in less than 15 minutes

What is generative AI?

Generative AI refers to unsupervised and semi-supervised machine learning algorithms that enable computers to use existing content like text, audio and video files, images, and even code to create new possible content. The main idea is to generate completely original artifacts that would look like the real deal.

Jokes aside, generative AI allows computers to abstract the underlying patterns related to the input data so that the model can generate or output new content.

As for now, there are several most widely used generative AI models, and we’re going to scrutinize four of them.

- Generative Adversarial Networks, or GANs — are technologies that can create visual and multimedia artifacts from both imagery and textual input data.

- Transformer-based models — comprise technologies such as Generative Pre-Trained (GPT) language models that can translate and use information gathered on the Internet to create textual content.

- Variational Autoencoders (VAEs) are used in tasks like image generation and anomaly detection.

- Diffusion models excel in creating realistic images and videos from random noise (random sets of data points).

To understand the idea behind generative AI, we need to examine the distinctions between discriminative and generative modeling.

How does gen AI work: discriminative vs generative modeling

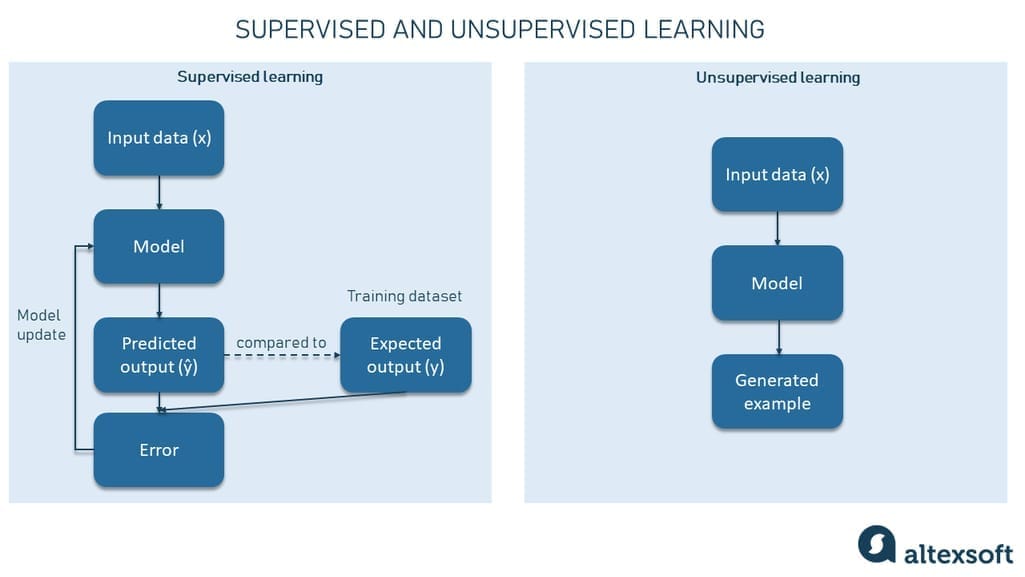

Discriminative modeling is used to classify existing data points (e.g., images of cats and guinea pigs into respective categories). It mostly belongs to supervised machine learning tasks.

Generative modeling tries to understand the dataset structure and generate similar examples (e.g., creating a realistic image of a guinea pig or a cat). It mostly belongs to unsupervised and semi-supervised machine learning tasks.

The more neural networks intrude on our lives, the more the discriminative and generative modeling areas grow. Let’s discuss each in more detail.

Discriminative modeling

Most machine learning models are used to make predictions. Discriminative algorithms try to classify input data given some set of features and predict a label or a class to which a certain data example (observation) belongs.

Say we have training data that contains multiple images of cats and guinea pigs. These are also called samples. Each sample comes with a set of features (X and a class label (Y), which, in our case, can be "cats" or "guinea pigs." We also have a neural net aiming to understand how to discriminate between two classes and predict the probability of a given sample belonging to one of them.

During the training, each prediction (ŷ) is compared to the actual label (Y). Based on the difference between the two values, the model gradually learns the relationships between features and classes and correlates its results.

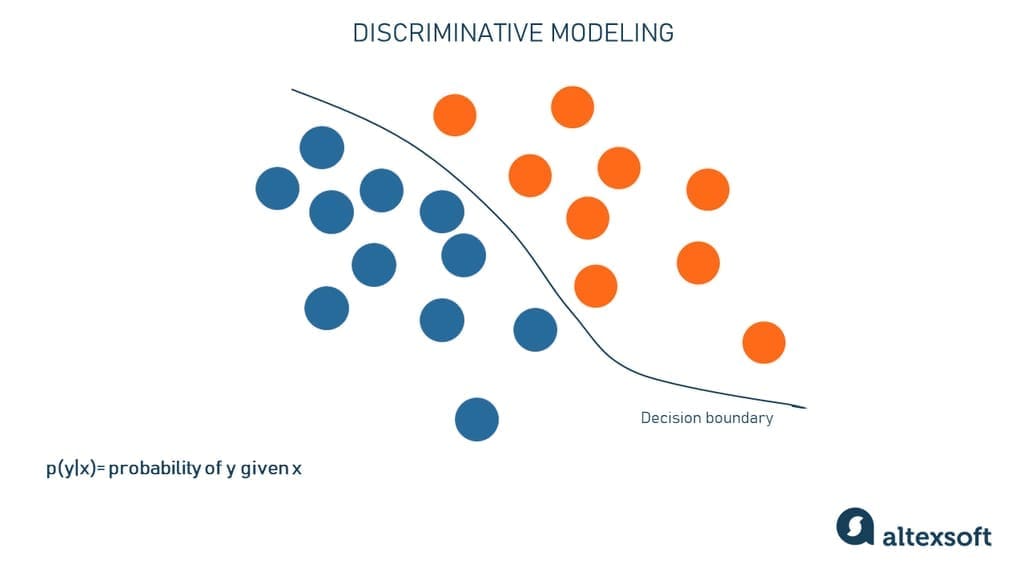

Let’s limit the difference between cats and guinea pigs to just two features in the feature set X (for example, “the presence of the tail” and “the shape of the ears”). Since each feature is a dimension, it’ll be easy to present them in a 2-dimensional data space. In the viz above, the blue dots are guinea pigs, and the orange dots are cats. The line depicts the decision boundary or that the discriminative model learned to separate cats from guinea pigs based on those features.

When this model is already trained, it literally checks on which side of a decision boundary a new picture falls. To do it, the model, in some sense, just “recalls” what the object looks like from what it has already seen.

To recap, the discriminative model kind of compresses information about the differences between cats and guinea pigs, without trying to understand what a cat is and what a guinea pig is.

Generative modeling

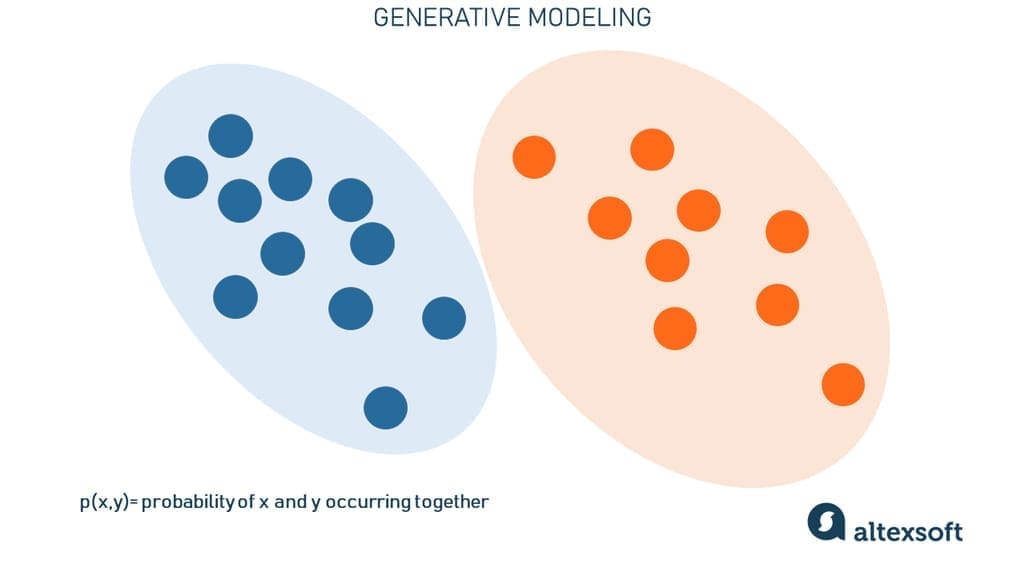

Generative algorithms do the complete opposite — instead of predicting a label given to some features, they try to predict features given a certain label. Discriminative algorithms care about the relations between X and Y; generative models care about how you get X from Y.

Mathematically, generative modeling allows us to capture the probability of x and y occurring together. It focuses on learning features and their relations to get an idea of what makes cats look like cats and guinea pigs look like guinea pigs. As a result, such algorithms not only distinguish the two animals but also recreate or generate their images.

You may wonder, “Why do we need discriminative algorithms at all?” The fact is that they are easier to monitor and more explainable — in other words, you can understand why the model comes to a certain conclusion.

Besides, it doesn’t matter how the data was generated in many cases — we only need to know the category it belongs to, and that’s exactly where discriminative models excel. Think of sentiment analysis in hotel reviews — its goal is to detect whether a comment is positive or negative, not to generate fake reviews. Discriminative models are still the go-to option for image recognition, document classification, fraud detection, and many other daily business tasks.

Generative AI has business applications beyond those covered by discriminative models. Let’s see what general models there are to use for a wide range of problems that get impressive results.

Generative AI models and algorithms

Various algorithms and related models have been developed and trained to create new, realistic content from existing data. Some of the models, each with distinct mechanisms and capabilities, are at the forefront of advancements in fields such as image generation, text translation, and data synthesis. Some, like GANs, are already a bit outdated but still in use.

Generative adversarial networks

A generative adversarial network or GAN is a machine learning framework that puts the two neural networks — generator and discriminator — against each other, hence the “adversarial” part. The contest between them is a zero-sum game, where one agent's gain is another agent's loss.

GANs were invented by Jan Goodfellow and his colleagues at the University of Montreal in 2014. They described the GAN architecture in a paper titled “Generative Adversarial Networks.” Since then, there has been a lot of research and practical application. GANs were the most popular generative AI algorithm until the recent success of diffusion-based models and transformers (you’ll read about them below).

GAN architecture.

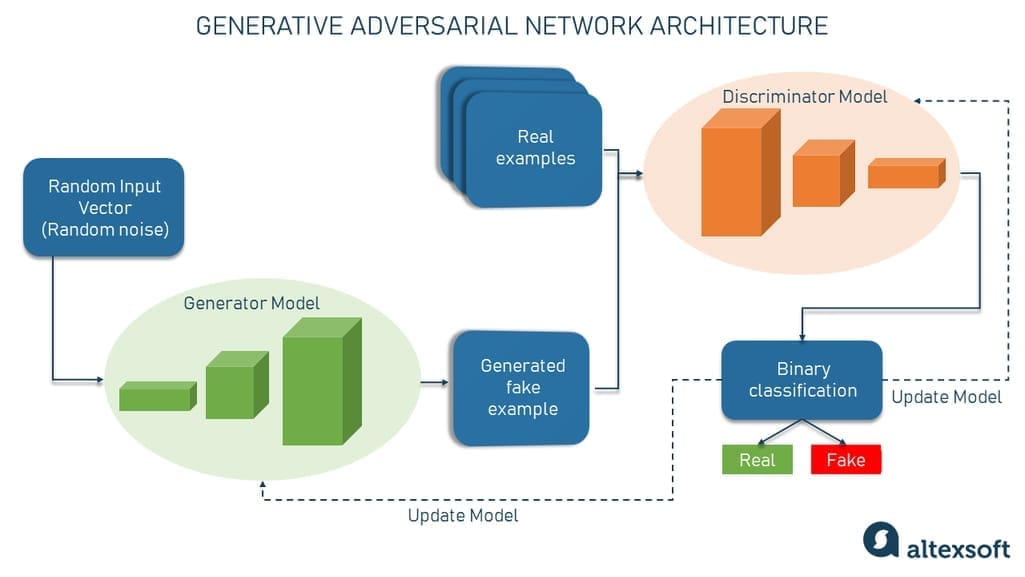

In their architecture, GANs have two deep learning models:

- generator — a neural net whose job is to create fake input or fake samples from a random vector (a list of mathematical variables with unknown values); and

- discriminator — a neural net whose job is to take a given sample and decide if it’s a fake from a generator or a real observation.

The discriminator is basically a binary classifier that returns probabilities—a number between 0 and 1. The closer the result to 0, the more likely the output will be fake. Vice versa, numbers closer to 1 show a higher likelihood of the prediction being real.

Both a generator and a discriminator are often implemented as CNNs (Convolutional Neural Networks), especially when working with images.

So, the adversarial nature of GANs lies in a game theoretic scenario in which the generator network must compete against the adversary. The generator network directly produces fake samples. Its adversary, the discriminator network, attempts to distinguish between samples drawn from the training data and those drawn from the generator. In this scenario, there’s always a winner and a loser. Whichever network fails is updated while its rival remains unchanged.

GANs will be considered successful when a generator creates a fake sample that is so convincing that it can fool a discriminator and humans. But the game doesn't stop there; it’s time for the discriminator to be updated and get better. Repeat.

Transformer-based models

First described in a 2017 Google paper, the transformer architecture is a machine learning framework that is highly effective for NLP natural language processing tasks. It learns to find patterns in sequential data like written text or spoken language. Based on the context, the model can predict the next element of the series, for example, the next word in a sentence. It’s perfect for translation and text generation.

Transformer-based architecture.

Let's take a step-by-step look at how a transformer-based model works.

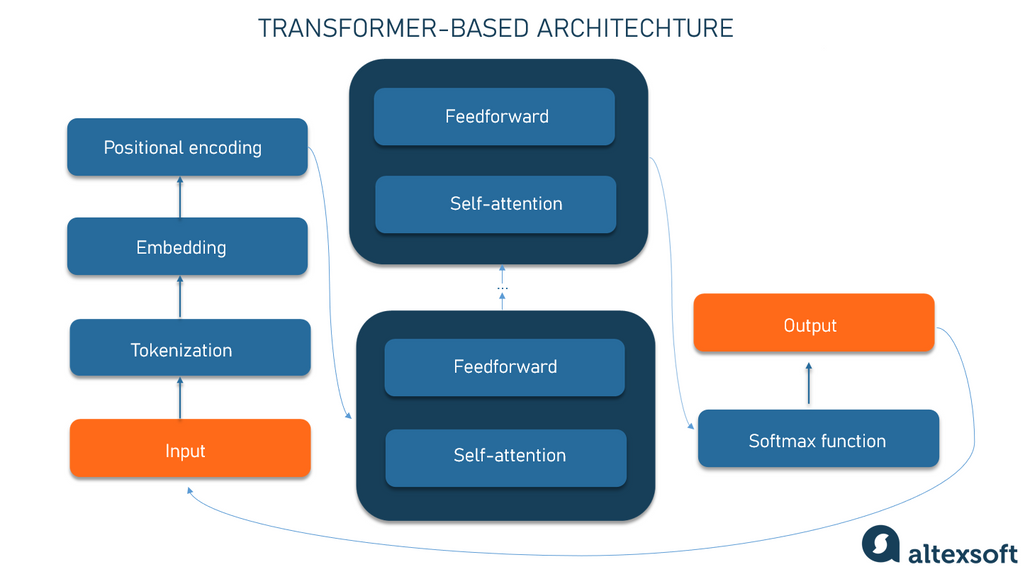

Tokenization. The input — let's say a phrase — is broken down into tokens (words or subwords, like “unbeliev" from "unbelievable”).

Embedding. Input tokens are converted into numerical vectors called embeddings. Each token is represented by a unique vector (a set of real-valued numbers). A vector represents the semantic characteristics of a word, with similar words having vectors that are close in value. For example, the word crown might be represented by the vector [3,103,35], while apple could be [6,7,17], and pear might look like [6.5,6,18]. Of course, these vectors are just illustrative; the real ones have many more dimensions.

Read our article about vector databases to learn more about embedding.

Positional encoding. To understand the text, the order of words in a sentence is as important as the words themselves. So, at this stage, information about the position of each token within a sequence is added in the form of another vector, which is summarized with an input embedding. The result is a vector reflecting the word's initial meaning and position in the sentence.

It’s then fed to the transformer neural network, which consists of two blocks.

Self-attention mechanism computes contextual relationships between tokens by weighing the importance of each element in a series and determining how strong the connections between them are. Mathematically, the relations between words in a phrase look like distances and angles between vectors in a multidimensional vector space. This mechanism is able to detect subtle ways even distant data elements in a series influence and depend on each other.

For example, in the sentences I poured water from the pitcher into the cup until it was full and I poured water from the pitcher into the cup until it was empty, a self-attention mechanism can distinguish the meaning of it: In the former case, the pronoun refers to the cup, in the latter to the pitcher.

The feedforward network refines token representations using knowledge about the word it learned from training data (but to be honest, it’s challenging even for scientists to explain exactly what it does).

The self-attention and feedforward stages are repeated multiple times through stacked layers, allowing the model to capture increasingly complex patterns before generating the final output.

The softmax function is used at the end to calculate the likelihood of different outputs and choose the most probable option.

Then the generated output is appended to the input, and the whole process repeats itself.

Diffusion models

The diffusion model is a generative model that creates new data, such as images or sounds, by mimicking the data on which it was trained.

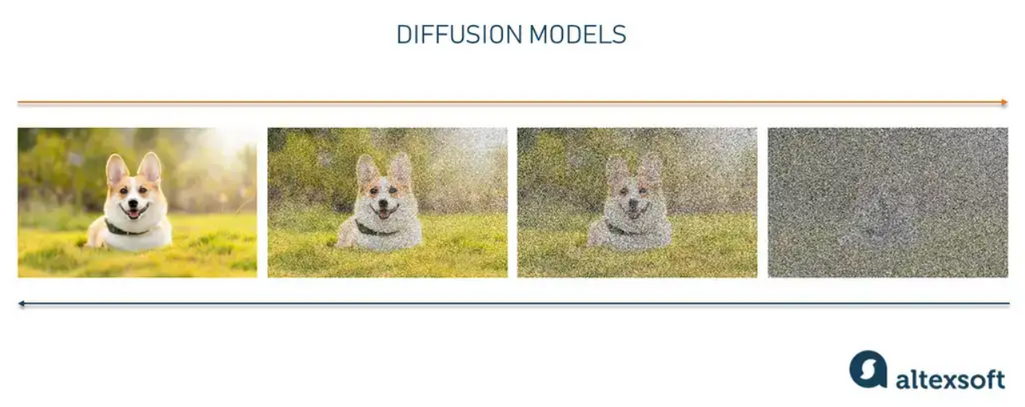

A corgi turns to a random noise… and back to a corgi.

Think of the diffusion model as an artist-restorer who studied paintings by old masters and now can paint their canvases in the same style. The diffusion model does roughly the same thing in three main stages.

Direct diffusion gradually introduces noise into the original image until the result is simply a chaotic set of pixels. The process is akin to physical diffusion, where the model gets its name.

If we return to our analogy of the artist-restorer, direct diffusion is handled by time, covering the painting with a network of cracks, dust, and grease; sometimes, the painting is reworked, adding certain details and removing others.

The learning stage is like studying a painting to grasp the old master's original intent. The model carefully analyzes how the added noise alters the data. It meticulously traces the path from the original image to its chaotic version, learning to distinguish between the original and the distorted data at each step. This understanding allows the model to effectively reverse the process later on.

After learning, this model can reconstruct the distorted data via the process called reverse diffusion. It starts from a noise sample and removes the blurs step by step—the same way our artist gets rid of contaminants and later paint layering. The result is new data that is close to the original—say, a picture of a dog, but not exactly the same dog as in the original image.

This technique enables diffusion models to generate realistic images, sounds, and other data types. Midjourney and DALL-E are two well-known image-generation tools based on diffusion models.

Variational Autoencoders (VAEs)

VAE-based models were first introduced in 2013 by Diederik P. Kingma and Max Welling and have since become a popular type of generative model.

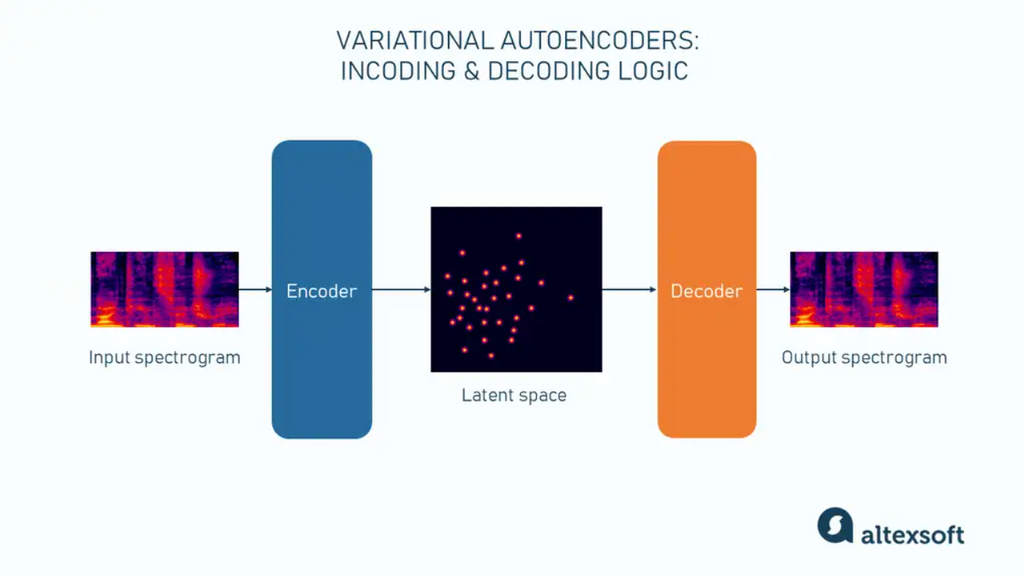

That’s how VAE works.

A variational autoencoder is an unsupervised neural network consisting of two parts: an encoder and a decoder. During the training stage, an encoder learns to compress input data into a simplified representation (so-called latent space, which is lower-dimensional than the original data) that captures only essential features of the initial input. Each data point isn't represented by a single value but by a probabilistic distribution of values. This built-in randomness is what gives the autoencoder its "variational" characteristic.

Think of latent representations as the DNA of an organism. DNA holds the core instructions needed to build and maintain a living being. Similarly, latent representations contain the fundamental elements of data, allowing the model to regenerate the original information from this encoded essence. But if you change the DNA molecule just a little bit, you get a completely different organism. For example, did you know that human and chimpanzee DNA is 98-99 percent identical?

A decoder takes latent representation as input and reverses the process. But it doesn’t reconstruct the exact input; instead, it creates something new resembling typical examples from the dataset.

VAEs excel in tasks like image and sound generation, as well as image denoising.

Types of generative AI applications with examples and use cases

Generative AI has a plethora of practical applications in different domains such as computer vision where it can enhance the data augmentation technique. The potential of generative model use is truly limitless. Below you will find a few prominent use cases that already present mind-blowing results. Or watch our video on the topic.

How businesses use generative AI

Image generation

The most prominent use case of generative AI is creating fake images that look like real ones. For example, in 2017, Tero Karras — a Distinguished Research Scientist at NVIDIA Research — published “Progressive Growing of GANs for Improved Quality, Stability, and Variation.”

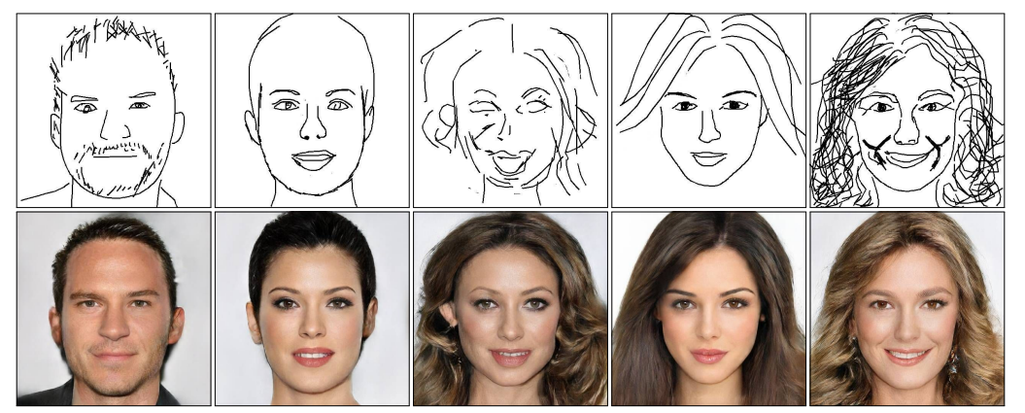

Generated realistic images of people that don’t exist. Source: Progressive Growing of GANs for Improved Quality, Stability, and Variation, 2017

In this paper, he demonstrated the generation of realistic photographs of human faces. The model was trained on the input data containing real pictures of celebrities and then it produced new realistic photos of people's faces that had some features of celebrities, making them seem familiar. Say, the girl in the second top right picture looks a bit like Beyoncé but, at the same time, we can see that it’s not the pop singer.

Image-to-image translation

As the name suggests, generative AI transforms one type of image into another. There is an array of image-to-image translation variations.

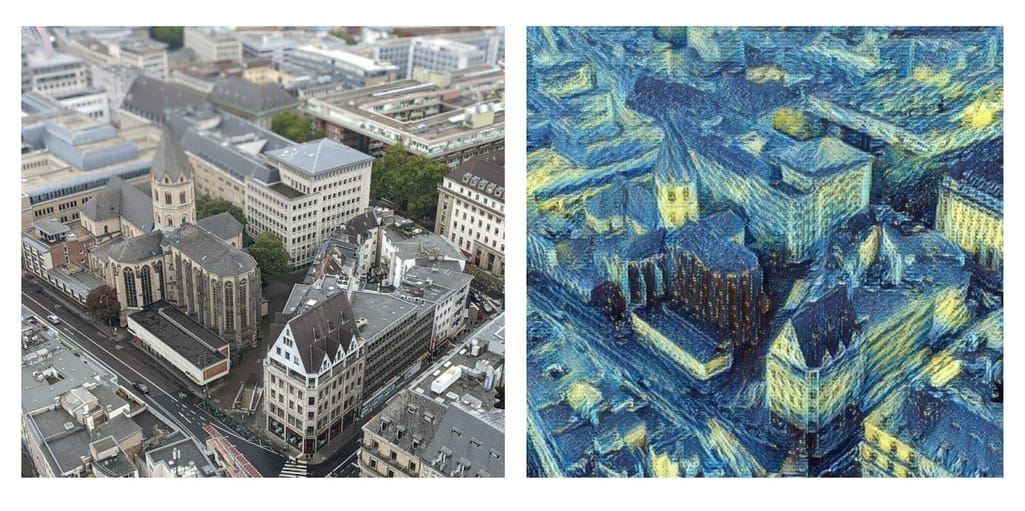

Style transfer. This task involves extracting the style from a famous painting and applying it to another image. For example, we can take a real picture we made in Cologne, Germany, and convert it into the Van Gogh painting style.

Sketches-to-realistic images. Here, a user starts with a sparse sketch and the desired object category, and the network then recommends its plausible completion(s) and shows a corresponding synthesized image.

One of the papers discussing this technology is “DeepFaceDrawing: Deep Generation of Face Images from Sketches.” It was published in 2020 by a team of researchers from China. It describes how simple portrait sketches can be transformed into realistic photos of people.

MRI into CT scans. In healthcare, one example can be transforming an MRI image into a CT scan because some therapies require images of both modalities. But CT, especially when high resolution is needed, requires a fairly high dose of radiation to the patient. Therefore, you can only do an MRI and synthesize a CT image from it.

Text-to-image translation

This approach implies producing various images (realistic, painting-like, etc.) from textual descriptions of simple objects. Remember our featured image? That’s an example of text-to-image translation. The most popular AI image generators are the aforementioned Midjourney, Dall-e from OpenAI, and Stable Diffusion.

To make the picture you see below, we provided Stable Diffusion with the following word prompts: a dream of time gone by, oil painting, red blue white, canvas, watercolor, koi fish, and animals. The result isn’t perfect, yet quite impressive, taking into account that we didn’t have access to the original beta version with a wider set of features but used a third-party tool.

The results of all these programs are pretty similar. However, some users note that, on average, Midjourney draws a little more expressively, and Stable Diffusion follows the request more clearly at default settings.

Text-to-speech (TTS)

Researchers have also used GANs to produce synthesized speech from text input. Advanced deep learning technologies like Amazon Polly and DeepMind synthesize natural-sounding human speech. Such models operate directly on character or phoneme input sequences and produce raw speech audio outputs.

This ability to generate lifelike speech is a foundational layer for AI agents that need to communicate verbally with users. For example, an agent managing a smart home use TTS to notify users of schedule changes or security alerts.

Audio generation

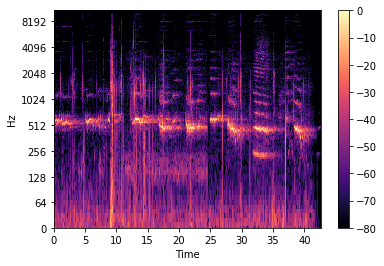

Generative AI can also process audio data. To do this, you first need to convert audio signals to image-like 2-dimensional representations called spectrograms. This allows us to use algorithms specifically designed to work with images like CNNs for our audio-related task.

A spectrogram example. Source: Towards Data Science

Using this approach, you can transform people's voices or change the style/genre of a piece of music. For example, you can “transfer” a piece of music from a classical to a jazz style.

In 2022, Apple acquired the British startup AI Music to enhance Apple’s audio capabilities. The technology developed by the startup allows for creating soundtracks using free public music processed by the AI algorithms of the system. The main task is to perform audio analysis and create "dynamic" soundtracks that can change depending on how users interact with them. That said, the music may change according to the atmosphere of the game scene or depending on the intensity of the user's workout in the gym.

Read our article on sound generation to learn more.

Video generation

Video is a set of moving visual images. So, logically, videos can also be generated and converted in much the same way as images. While 2023 was marked by breakthroughs in LLMs and a boom in image generation technologies, 2024 has seen significant advancements in video generation. At the beginning of 2024, OpenAI introduced a really impressive text-to-video model called Sora.

Sora is a diffusion-based model that generates video from static noise. It’s able to craft complex scenes with multiple characters, specific motions, and accurate details of both subject and background. Similar to GPT models, Sora also uses a transformer architecture to work with text prompts. Besides generating videos from text, Sora can animate existing still images.

Image and video resolution enhancement

If we have a low-quality image, we can use a GAN to create a better version by determining each individual pixel and then making a higher resolution of that.

It’s totally fine if you feel like this right now (BTW, the meme resolution has also been upscaled using Generative AI)

We can enhance images from old movies by upscaling them to 4k and beyond, generating more frames per second (e.g., 60 fps instead of 23), and adding color to black-and-white movies.

Synthetic data generation

While we live in a world that is overflowing with data generated in great amounts continuously, the problem of getting enough data to train ML models remains. Acquiring enough high-quality samples for training is a time-consuming, costly, and often impossible task. The solution to this problem can be synthetic data, subject to generative AI.

As mentioned, NVIDIA is making many breakthroughs in generative AI technologies. One of them is a neural network trained on videos of cities to render urban environments.

NVIDIA's Interactive AI Rendered Virtual World

Such synthetically created data can help develop self-driving cars as they can use generated virtual world training datasets for pedestrian detection, for example.

Read our article on the best AI tools for business to learn more. We also recommend our dedicated article about LLM API integration for different use cases.

The dark side of generative AI: Is it that dark?

Whatever the technology, it can be used for both good and bad. Of course, generative AI is no exception. At the moment, a couple of challenges exist.

Pseudo-images and deep fakes. Initially created for entertainment purposes, deep fake technology has already gotten a bad reputation. Available publicly to all users via software like FakeApp, Reface, and DeepFaceLab, deep fakes have been employed by people not only for fun but also for malicious activities.

For example, in March 2022, a deep fake video of Ukrainian President Volodymyr Zelensky telling his people to surrender was broadcast on hacked Ukrainian news. Though it could be seen to the naked eye that the video was fake, it got to social media and caused a lot of manipulation.

The risk of losing control. When we say this, we do not mean that tomorrow, machines will rise against humanity and destroy the world. Let's be honest, we're pretty good at it ourselves. However, since generative AI can self-learn, its behavior is difficult to control. The outputs provided can often be far from what you expect.

But as we know, technology would be incapable of developing and growing without challenges. Besides, responsible AI makes it possible to avoid or completely reduce the drawbacks of innovations like generative AI.

By the way, don't worry: The post you have just read wasn’t generated by AI.

Or was it?