Over the past few years, Kubernetes has become an integral part of cloud-native computing and the gold standard for container orchestration at scale. In 2022, its annual growth rate in the cloud hit 127 percent, with Google, Spotify, Pinterest, Airbnb, Amadeus, and other global companies relying on the technology to run their software in production.

What’s behind this massive popularity? Read this article to learn how top organizations benefit from Kubernetes, what it can do, and when its magic may fail.

What is Kubernetes?

Kubernetes or K8s for short is an open-source platform for deploying and orchestrating a large number of containers — packages of software, with all dependencies, libraries, and other elements necessary to execute it, no matter the environment.

Containers have become the preferred way to run microservices — independent, portable software components, each responsible for a specific business task (say, adding new items to a shopping cart). Modern apps include dozens to hundreds of individual modules running across multiple machines— for example, eBay uses nearly 1,000 microservices.

Clearly, there must be a mechanism to coordinate the work of such complex distributed systems, and that’s exactly what Kubernetes was designed for by Google back in 2014. The unusual name came from an ancient Greek word for helmsman — someone who steers a ship. A ship loaded with containers, in our case. That explains the ship’s wheel on the Kubernetes logo. What is K8s then? Count letters between “K” and “s” to get a clue.

To better understand how the digital vessel pilot controls the fleet of software packages let’s briefly explore its main components.

Kubernetes cluster

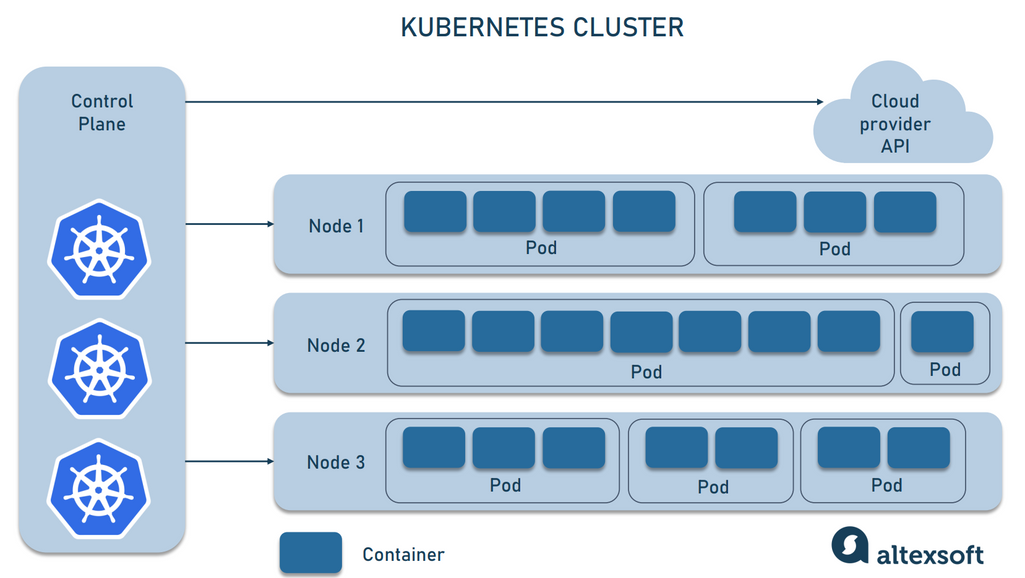

A key concept of Kubernetes is the cluster — a set of physical or virtual machines or nodes that execute the containerized software. For testing purposes, a cluster may have a single node but on average it uses five nodes with 16 to 32 GB of memory each in the public clouds and nine nodes with 32 to 64 GB when deployed on-premises

Components of a Kubernetes cluster.

Nodes host pods which are the smallest components of Kubernetes. Each pod, in turn, holds a container or several containers with common storage and networking resources which together make a single microservice.

The orchestration layer in Kubernetes is called the Control Plane, previously known as a master node. It handles internal and external requests, schedules pod activities, aligns the cluster with the desired state, detects events, and responds to them.

You can scale up or down your cluster by adding or removing new machines. But how large can it actually be? Officially, Kubernetes supports

- no more than 110 pods per node,

- no more than 5,000 nodes per cluster,

- no more than 150,000 pods in total, and

- no more than 300,000 containers in total.

Speaking of containers that Kubernetes manages, in most cases, they are created with another brightest star in the containerization sky — Docker. Now, let’s see how organizations use K8s and what it can do.

What is Kubernetes used for

Though the orchestrator can work on-premises, according to the Kubernetes in the wild report 2023, it became the number-one technology for moving software to the cloud. Another obvious trend is the growing range of use cases. Initially, companies utilized Kubernetes mainly for running containerized microservices. Now, the ratio of application to non-application (auxiliary) workloads is 37 to 63 percent.

What auxiliary processes do companies entrust to the orchestrator? Most often, Kubernetes is engaged to run:

- open-source observability tools (mostly, Prometheus) that continuously trace data generated by a cluster to identify problems and optimize its performance (77 percent of organizations);

- databases (71 percent, with Redis leading in Kubernetes deployments);

- messaging and event streaming with Kafka, RabbitMQ, or ActiveMQ (44 percent);

- continuous integration and delivery or CI/CD tools (39 percent);

- Big Data analytics processes (36 percent);

- security instruments (34 percent); and

- service mesh tools to automate service-to-service communication over the network.

The shift to non-application jobs driven by the ability to support various types of workloads turns Kubernetes into a universal platform for almost everything and a de-facto operating system for cloud-native software.

Kubernetes advantages

The 2021 Annual Survey by the Cloud Native Computing Foundation (CNCF) shows that 96 percent of organizations are already using or at least evaluating Kubernetes. The fact that this free technology is backed by Google and the CNCF makes it a hands-down go-to. But there are other pros worth mentioning.

Kubernetes load balancer to optimize performance and improve app stability

The goal of load balancing is to evenly distribute incoming traffic across machines, enabling an app to remain stable and easily handle a large number of client requests. Kubernetes leverages two types of tools for this task:

- internal load balancers for routing traffic from services inside the same virtual network; and

- external load balancers that act as middlemen between users and your app, directing external HTTP requests to individual pods of your cluster.

The embedded load-balancing instruments help you optimize app performance, maximize availability, and improve fault tolerance since traffic is automatically redirected from failed nodes to working ones.

Self-healing to minimize downtime

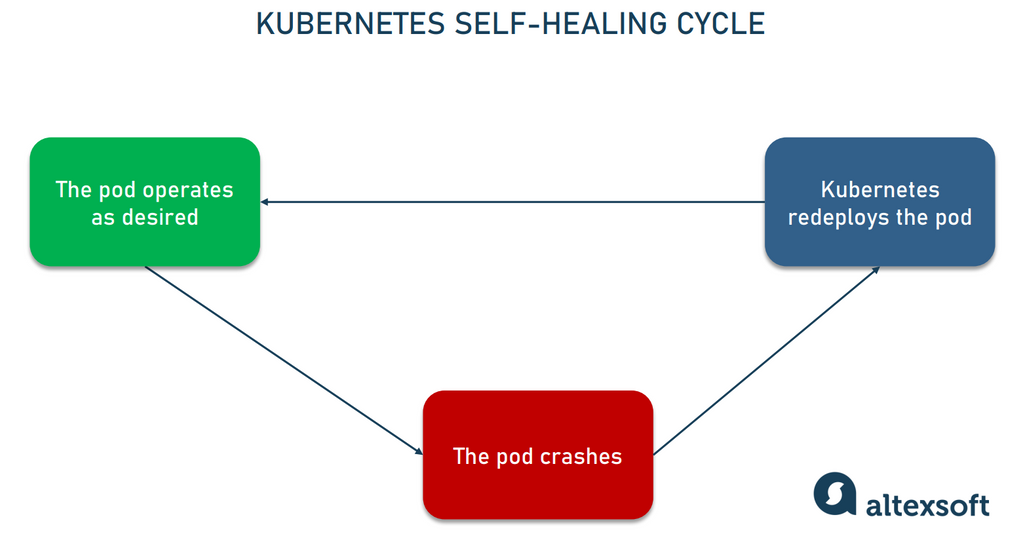

Kubernetes comes with a default self-healing mechanism that constantly checks the running status of containers and their ability to respond to client requests. It takes up to five minutes to detect an issue.

How Kubernetes heals itself.

Depending on the type of failure spotted, Kubernetes

- redeploys crashed containers as soon as possible;

- replaces outdated containers with new versions; or

- disables ("kills") irresponsive containers.

The problem containers remain inaccessible for users or other packages until they “get healthy”.

The self-healing feature enables companies to run their operations around the clock, with no need for human help, and roll out updates without downtime. It also minimizes unplanned outages, which may disrupt businesses dependent on event streaming and real-time analytics.

Portability to avoid vendor lock-in

Designed with portability in mind, raw Kubernetes is vendor-agnostic. It enables you to choose among clouds and types of deployments: on-premises, in a single cloud, across several cloud services, or in a hybrid cloud — a mix of environments, including public and private clouds and onsite data centers that use Windows or Linux. You’ll avoid vendor lock-in, even if you opt for a closed or proprietary infrastructure.

Deployment and scaling automation to boost CI/CD

Kubernetes has become an important part of a DevOps toolchain. It enables DevOps and site reliability engineer (SRE) teams to automate deployments, updates, and rollbacks. The orchestrator also has an autoscaling feature that periodically adjusts the number of nodes and pods to the current load. This enables your CPU and memory utilization to stay aligned with customer demand that can dramatically change throughout the day.

“I never have to write a script to orchestrate a rolling deployment ever again. I’ll never have to touch Puppet. I no longer need to use Terraform to scale out a service. Сouldn’t be happier about this change!” says a DevOps engineer describing the benefits of migrating from virtual machines to Google Kubernetes Engine on Hacker News.

Large community to improve Kubernetes and help you out with any problem

Kubernetes has gathered a large community of users and contributors who drive its growth. Strong support from both independent developers and big players (like CNCF, Google, Amazon, Microsoft, and others) means that the platform gets constant improvements and the technology won’t become outdated any time soon. The number of open-source tools to enhance its work is also increasing. Currently, there are nearly 2,400 Kubernetes contributors registered on GitHub.

Another advantage is that you can find answers to a wide range of questions or share your ideas on related forums. Stack Overflow has amassed over 54,200 Kubernetes-tagged discussions, the Kubernetes community on Reddit amounts to 99,000 participants, and the community on Slack has over 171,800 members, from novices to seasoned Kubernetes developers.

Kubernetes documentation to understand the technology

The open-source project boasts detailed and comprehensive technical documentation that includes

- an overview of its main concepts and components;

- user manuals on how to deploy an application on Kubernetes; and

- instructions on how to set up a Kubernetes cluster in learning and production environments.

There are also many other useful materials on the official Kubernetes Documentation page — such as examples of common Kubernetes tasks and a glossary of commonly-used terms.

Kubernetes disadvantages

Kubernetes takes care of many container-related tasks, making the management of large microservice-based apps much easier. But it’s not that simple to reap all the benefits promised. Let’s see what common challenges organizations face when using the technology.

Hard learning curve

Kubernetes is definitely not for IT newcomers. To start with it, you have to be closely familiar with microservices-based architecture, containers, networking, cloud-native technologies, continuous delivery and integration practices, infrastructure as code, and more.

But even a seasoned software developer or DevOps engineer can find Kubernetes intimidating in the beginning. “After six years learning CI/CD pipelines, it still feels like I’m drinking from the firehose”, “I’ve started and gave up several times”, “I was overwhelmed by its scope, which made it difficult to know what to focus on and what's ok to ignore,” are typical comments from DevOps folks about their experience with their introduction to K8s.

Overall complexity

Even with sufficient knowledge, Kubernetes is difficult to set up and configure since it has many moving parts and no graphical user interface to conveniently manage them. Google itself recognized that the orchestrator is incredibly complex. “What we’ve seen… is a lot of enterprises are embracing Kubernetes but then they run headlong into a difficulty,” admitted Drew Bradstock, product lead for Google Kubernetes Engine (GKE).

No wonder, 73 percent of Kubernetes workloads in the cloud are run on commercial platforms that simplify the orchestrator adoption and maintenance (we’ll talk about them later) and only 27 percent are handled by companies on their own. It means that the majority of organizations have to pay extra for managed Kubernetes services.

Expensive talent and unpredictable cloud spends

Kubernetes-related bills of enterprises hit a million dollars and more per month while expenses of mid-to-large organizations may range from thousands to hundreds of dollars monthly. Even startups spend up to $10,000 monthly to run Kubernetes.

Suppose you don’t pay for managed services opting for vanilla Kubernetes which is free. Yet, it doesn’t mean it’s cheap to use. Due to the orchestrator’s complexity and hard learning curve, you need to invest intensively in training your developers or hire experienced Kubernetes engineers whose services are quite expensive. Besides that, cloud computing costs can be unpredictable and quickly increase.

A 2021 survey conducted by the CNCF and FinOps Foundation revealed that 68 percent of participants saw a rise in Kubernetes bills, with most spending falling on computing and memory resources. The researchers arrived at the conclusion that money losses are often driven by automated deployment and scaling.

These features are highly appreciated by developers as they accelerate product launches and prevent performance problems. But if you don’t constantly track what’s going on in your Kubernetes environment, your cluster can eat up resources that are not actually used, leading to unexpected cost spikes. The good news is that most companies can cut Kubernetes spending by up to 40 percent if they introduce cost-monitoring practices and tools.

Kubernetes alternatives

Frankly speaking, Kubernetes doesn’t have true rivals in the market, since most of them are enhanced variations of vanilla K8s — or its distributions — and tools to streamline Kubernetes operations.

Kubernetes distributions can be lighter-weight versions of the original orchestrator or fully managed services (Kubernetes as a Service, shortened to KaaS) with proprietary features and extensions to meet specific business needs. KaaS products are typically provided in the public cloud but sometimes are available for on-premises deployment. They offer more user-friendly interfaces, tech support, and other perks that simplify the handling of containers for enterprises.

Note that distributions can be tied to the vendor platform so you are not able to use them in other environments, without extra components and licenses.

Kubernetes vs Docker vs Docker Swarm (8,6 k, medium)

Docker is a widely-used open-source technology that provides a standard for software packages, while Kubernetes’ mission is to control them in production. In other words, Docker creates isolated, executable pieces of the app, and Kubernetes works as their supervisor after the launch.

Both tools facilitate the deployment and management of microservices-based applications and are commonly used together. According to the 2022 Developer Survey by Stack Overflow, Docker and Kubernetes are the two most loved and wanted tools outside of programming languages, databases, cloud platforms, web frameworks, and libraries.

Docker leverages its own orchestration tool called Docker Swarm to make “swarms” of microservices work together smoothly, as a single unit. It lags behind Kubernetes in terms of customization and automation capabilities. On the other hand, Docker Swarm is more lightweight and beginner-friendly while Kubernetes is known for its excessive complexity.

Learn more about Docker architecture, advantages, and alternatives from our article The Good and the Bad of Docker Containers.

OpenShift vs Kubernetes (1,5 k, medium)

Besides Docker Swarm, there are many other containerization tools. One of them is Red Hat OpenShift, a platform that enables you to build Docker containers and, after deployment, manage them with Kubernetes. Unlike Docker and K8s, it’s a commercial product that includes dedicated tech support, additional capabilities, and enhanced security. The open-source version of OpenShift comes with self-support only — yet, it offers almost the same set of features as a paid subscription. Though based on the upstream Kubernetes, OpenShift can be installed on the Linux versions by Red Hat only.

Other Kubernetes distributions and Kubernetes as a Service platforms

Other examples of Kubernetes distributions and managed services are

- K3s built specifically to run Kubernetes on the IoT edge;

- Rancher, a flexible enterprise-grade platform compatible with any Linux-based OS and deployable in any public cloud or on-premises;

- Amazon Elastic Kubernetes Service (ESK) for working with the orchestrator in the AWS cloud and on-premises data centers;

- Google Kubernetes Engine (GKE), an automated service for managing containers on the Google Cloud Platform;

- Azure Kubernetes Service (AKS), Microsoft’s answer to Amazon ESK and GKE tailored for deployments in Azure Cloud, on-premises, or at the edge; and

- IBM Cloud Kubernetes Service to deploy containers on IBM Cloud.

Look through the full list of certified Kubernetes-based products here. All of them support the same APIs as the open-source version which lies at their very core.

How to start with Kubernetes

For those who think that the advantages of Kubernetes outweigh its shortcomings, here’s a list of useful resources and certifications that will help you or your team start with Kubernetes, master it, and confirm your expertise.

Kubernetes website: documentation, training courses, and more

These Kubernetes official website pages are a good starting point to get familiar with Kubernetes.

- Getting started introduces you to different ways to set up Kubernetes.

- Concepts explains what Kubernetes is, what it can do, and how different parts of the system work.

- Tasks will teach you how to perform basic operations with Kubernetes.

- Tutorials will show you how to accomplish multi-step goals that are more complex than tasks.

- Reference contains links to useful resources — such as a Glossary of Kubernetes-related terms, API documentation, client libraries, and more.

Visit the Training page to learn about available Kubernetes courses. One of the officially recommended options is Introduction to Kubernetes, available free on the edX platform.

Kubernetes certifications and courses from the Linux Foundation

The Linux Foundation offers several training courses coupled with professional certifications that verify your knowledge of Kubernetes. You can take courses and exams independent of each other, but, the course and exam bundle will cost you less ($595 instead of $299 per course and $395 for an exam individually).

Kubernetes Fundamentals is ideal for IT administrators or those who want to start a career in the cloud. It introduces students to key concepts of Kubernetes and teaches them how to install and configure Kubernetes clusters in production.

Related certification: Certified Kubernetes Administrator (SKA)

Kubernetes Security Essentials suits specialists with SKA certification who are interested in security practices. It overviews security issues in cloud production environments. The course includes labs to design, install, and monitor Kubernetes clusters and log security events.

Related certification: Certified Kubernetes Security Specialist (CKS)

Kubernetes and Cloud Native Essentials explains the basics of cloud-native technologies and how to implement and monitor them with Kubernetes. It aims at software developers, system administrators, and architects, who work with legacy systems and are new to cloud environments and container orchestration.

Related certification: Kubernetes and Cloud Native Associate (KCNA)

Kubernetes for Developers is designed for software and cloud engineers who want to build Kubernetes apps. Students should be able to work with the Linux command line, code using Python, Node.js, or Go, and be familiar with concepts of cloud-native apps and architectures. The program covers such practical skills as containerizing, hosting, deploying, and configuring an app in a multi-node cluster.

Related certification: Certified Kubernetes Application Developer (SKAD)

Other courses to consider

You can learn Kubernetes on multiple other platforms as well — depending on your professional level, previous expertise, and cloud technologies and other tools you’re going to use. Here are several resources worth looking at.

Getting Started With Google Kubernetes Engine (GKE) on Coursera. The course offered by Google Cloud focuses on GKE, comparing its functionality with vanilla Kubernetes. At the end of the training, you’ll be able to create containers, and deploy, monitor, and manage a Kubernetes cluster with GKE and other Google Cloud services.

Introduction to Containers with Docker, Kubernetes, and OpenShift on Coursera. This course is designed by IBM as a part of the preparation for getting an IBM professional certificate (Backend Development, Applied DevOps Engineering, DevOps and Software Engineering, or Full Stack Software Developer.) It explains Docker and Kubernetes concepts and enables you to build and manage cloud-native apps with Docker, Kubernetes, and OpenShift.

Docker& Kubernetes: The Practical Guide on Udemy. The course instructs you on how to install Docker, create Docker containers, and deploy Docker applications manually, with raw Kubernetes and with managed services.

Kubernetes Certified Application Developer (KSAD) with Tests on Udemy. This course prepares you for KSAD certification. It requires basic knowledge of system administration, Python, Linux virtual machines, and Kubernetes. The set of skills you’ll get includes developing cloud-first apps, deploying them in a Kubernetes cluster, scheduling jobs, troubleshooting, rolling updates, and more.

This post is a part of our “The Good and the Bad” series. For more information about the pros and cons of the most popular technologies, see the other articles from the series:

The Good and the Bad of Docker Containers

The Good and the Bad of Apache Airflow

The Good and the Bad of Apache Kafka Streaming Platform

The Good and the Bad of Hadoop Big Data Framework

The Good and the Bad of Snowflake

The Good and the Bad of C# Programming

The Good and the Bad of .Net Framework Programming

The Good and the Bad of Java Programming

The Good and the Bad of Swift Programming Language

The Good and the Bad of Angular Development

The Good and the Bad of TypeScript

The Good and the Bad of React Development

The Good and the Bad of React Native App Development

The Good and the Bad of Vue.js Framework Programming

The Good and the Bad of Node.js Web App Development

The Good and the Bad of Flutter App Development

The Good and the Bad of Xamarin Mobile Development

The Good and the Bad of Ionic Mobile Development

The Good and the Bad of Android App Development

The Good and the Bad of Katalon Studio Automation Testing Tool

The Good and the Bad of Selenium Test Automation Software

The Good and the Bad of Ranorex GUI Test Automation Tool

The Good and the Bad of the SAP Business Intelligence Platform

The Good and the Bad of Firebase Backend Services

The Good and the Bad of Serverless Architecture