This article explores the most popular large language models and their integration capabilities for building chatbots, natural language search, and other LLM-based products. We’ll explain how to choose the right LLM for your business goals and look into real-world use cases, including AltexSoft's experience.

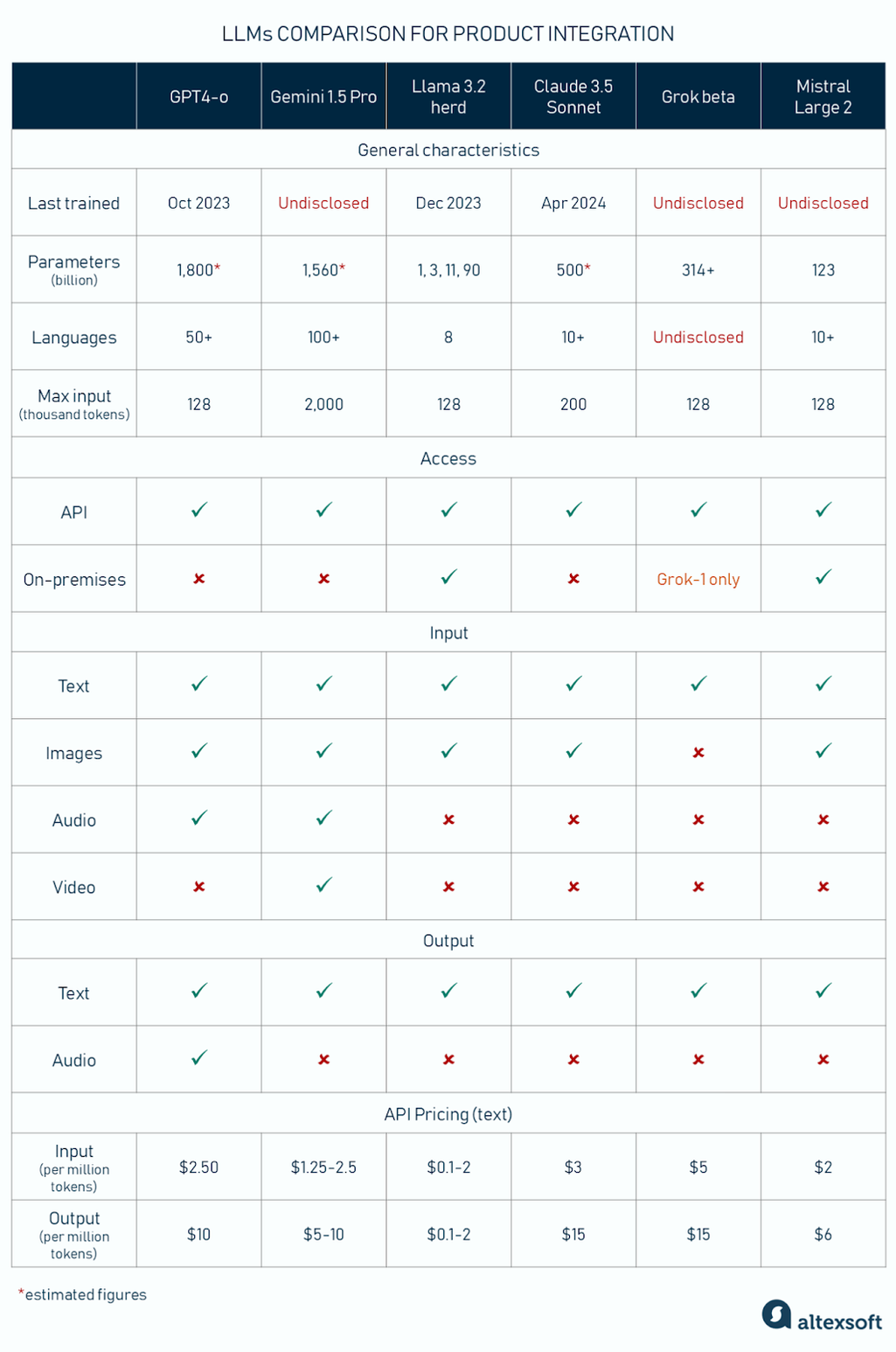

Flagship LLMs comparison for product integration: main features and capabilities

LLM capabilities: key metrics and features

Understanding the core metrics and features is essential when building a business application on top of a large language model. Here, we explore the key attributes that can impact both performance and user experience.

Size in parameters. Parameters are the variables that the generative AI model learns during training. Their quantity indicates the AI's capacity to understand human language and other data. Bigger models can capture more intricate patterns and nuances. When is a model considered large? The definition is vague, but one of the first models recognized as an LLM was BERT (110M parameters). The modern LLMs have hundreds of billions of parameters.

Number of languages supported. Pay attention to the fact that some models work with 4-5 languages while others are real polyglots. It’s crucial, for example, if you want multilingual customer support.

Context window (max. input). It’s the amount of text or other data (images, code, audio) that a language model can process when generating a response. The bigger context window allows users to input more custom, relevant data (for example, project documentation) so the system will consider the full context and give a more precise answer.

For textual data, a context window is counted in tokens. You can think of tokens as sequences of words, where 1,000 tokens are about 750 English words.

Access. You can integrate with most of the popular LLMs via API. However, some of them are available for download and can be deployed on-premises.

Input and output modality. Modality is the type of data the model can process. LLMs primarily handle text and code, but multimodal ones can take images, video, and audio as input; the output is mainly text.

Fine-tuning. An LLM model can gain specific domain knowledge and become more effective for your business tasks via fine-tuning. This option is typically available for downloadable models, but some providers allow users to customize their LLMs on the cloud, defining the maximum size of the fine-tuning dataset. In any case, this process requires investment and well-prepared data.

Pricing. The price is counted per million tokens; image files and other non-text modalities can also be tokenized, or counted per unite/per second. Here is an OpenAI pricing calculator for the most popular LLMs. The cheapest in the list is Llama 3.2 11b Vision Instruct API, and the most expensive is GPT-4o RealTime API, which supports voice generation.

We have only mentioned the LLM's main characteristics here. Now, let's discuss the most popular models in more detail.

GPT by Open AI

OpenAI released its first GPT model in 2018, and since then, it has set the industry standard for performance in complex language tasks. The LLM remains unrivaled in performance, reasoning skills, and fine-tuning ease. The flagship model so far is the GPT-4o, which has a smaller, faster, and cheaper version called the GPT-4o mini.

Both variations understand over 50 languages and are multimodal: They take text and image input and generate text output, including code, mathematical equations, and JSON data. Besides, with RealTime API, presented in October 2024, you can also feed audio into GPT-4o and have the model respond in text, audio, or both.

Recently, OpenAI introduced a new series of o1 models (o1 and o1-mini), currently in beta mode. They are trained through reinforcement learning, which enables deeper reasoning and tackling more complex tasks, especially in science, coding, and math. However, for most common use cases, GPT-4o will be more capable in the near future, as the new generation lacks many features (like surfing the Internet).

Besides LLMs, Open AI represents image models DALL-E and audio models Whisper and TTS. To know more about how they work, read our article on AI image generators and another one explaining sound, music and voice generation.

Parameters and context window

GPT-4o is estimated to have hundreds of billions of parameters; some sources claim 1.8 trillion, although exact details are proprietary. In both versions, the context window can hold up to 128,000 tokens, which is the equivalent of 300 pages of text.

Access

GPT models are only available as a service in the cloud; you can’t deploy them on-premises. They are accessible via Open AI APIs, using Python, Node.js, and .Net. or through Azure OpenAI Service, which also supports C#, Go, and Java. You can call API with other languages as well, thanks to community libraries.

Below, we’ll list API products offered directly by Open AI.

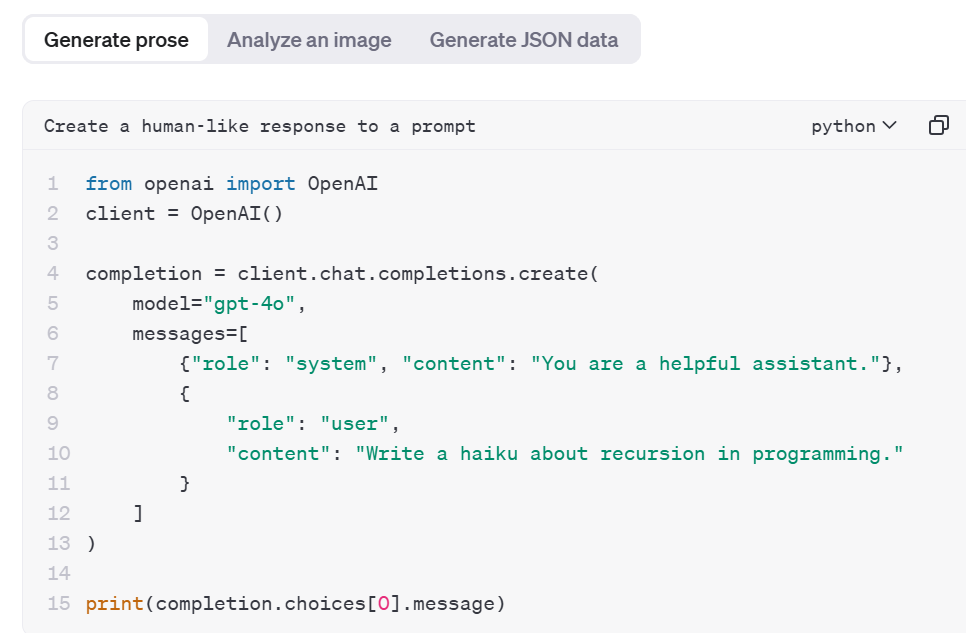

Chat completions API allows you to quickly embed text generation capabilities into your app, chatbot, or other conversational interface.

Generating prose using Python with the chat completions endpoint. Source: OpenAI Platform

Assistants API (in beta testing mode) is for designing powerful virtual assistants. It comes with built-in tools like file search to retrieve relevant content from your documents and a code interpreter, which helps solve complex math and code problems. The API can access multiple tools in parallel.

Batch API is perfect for tasks that don't require immediate responses, like sentiment analysis for hotel reviews or large-scale text processing. A single batch may include up to 50,000 requests, and a batch input file shouldn’t be bigger than 100 MB. Batch API costs 50 percent less compared to synchronous APIs.

Realtime API (in beta testing mode) supports text and audio as both input and output, which means you can build a low-latency speech-to-speech, text-to-speech, or speech-to-text chatbot that will support audio conversations with clients. You can choose one of six male or female voices. The virtual interlocutor will use any tone you like, such as warm, engaging, or thoughtful; “he” or “she” can even laugh and whisper.

Fine-tuning options

To customize GPT-4o/GPT-4o mini models, you have to prepare a dataset that contains at least 10 conversational patterns, though the OpenAI recommendation is to start with 50 training examples. The dataset for fine-tuning must be in JSON format and up to 1GB in size (though you don’t need a set that large to see improvements.) It can also contain images. To upload the dataset, use Files API or Uploads API for files larger than 512 MB.

Fine-tuning can be performed via OpenAI UI or programmatically, using OpenAI SDK. Azure OpenAI currently supports only text-to-text fine-tuning.

Pricing

GPT-4o API usage for business costs $2.5 /1M input tokens and $10/1M output tokens, while GPT-4o mini is much cheaper: $0.15/1M input tokens and $0.6/1M output tokens.

O1-preview costs $15.00/1M input tokens and $60.00/1M output tokens.

You can find the detailed pricing information here.

Gemini by Google DeepMind

The Gemini (former Bard) model family is optimized for high-level reasoning and understanding not only texts but also image, video, and audio data.

The model's name was inspired by NASA's early moonshot program, breakthrough Project Gemini. It was also associated with the Gemini astrology sign since people born under it are highly adaptable, effortlessly connect with diverse individuals, and naturally view situations from multiple perspectives.

The flagship products are Gemini 1.5 Pro and Gemini 1.5 Flash. Flash is a mid-size multimodal model optimized for a wide range of reasoning tasks. Pro can handle large amounts of data. Both models support over 100 languages.

Gemini 1,5 Pro on Vertex AI platform. Source: Vertex AI

Parameters and context window

Estimates suggest Gemini models operates with 1.56 trillion parameters. Gemini 1.5 Pro has an unprecedented context window of two million tokens, which allows it to fit 10 Harry Potter novels (well, existing seven plus fan-dreamed three) in one prompt. Or one Harry Potter movie (2 hours of video) or 19 hours of audio. The Gemini 1.5 Flash’s context window is one million tokens.

Access

Gemini models are cloud-based only. Google provides two ways to access its LLMs — Google AI and Vertex AI (Google’s end-to-end AI development platform). Both APIs support function calling.

Google AI Gemini API provides a fast way to explore the LLM capabilities and get started with prototyping and creating simple chatbots. It supports mobile devices and natively connects with Firebase, a Google platform for the development of AI-powered web, iOS, and Android apps. The API can be consumed with Python, Node.js, and Go. SDKs for Flutter, Android, Swift, and JavaScript are recommended for prototyping only. If you want to launch your app written in those languages to production, migrate to Vertex AI.

Vertex AI Gemini API allows for building complex, enterprise-grade applications. With Vertex AI, you can leverage a range of additional services, such as MLOps tools for model validation, versioning, and monitoring, an Agent Building console for creating virtual assistants, and security features, critical for the production environment.

Overall, it makes sense to start building your Gemini-based app with Google AI API and, as your project matures, move to Vertex AI API.

Fine-tuning options

With Google AI, fine-tuning is possible for 1.5 Flash and text only. It doesn’t cover chat-style conversations. You can start with a dataset of 20 examples (each no longer than 40,000 characters), but the optimal size is between 100 and 500 examples. Tuned models don’t support JSON inputs and texts longer than 40,000 characters.

Vertex AI enables supervised fine-tuning of 1.5 Flash and Pro, with text, image, audio, and document inputs. The recommended size of a training dataset is 100 examples, each no longer than 32,000 tokens.

You can create a supervised fine-tuning job using the Gemini REST API, the Vertex AI section of the Google Cloud console, the Vertex AI SDK for Python, or Colab Enterprise (a collaborative, managed notebook environment in Google Cloud).

Pricing

Rates vary based on the model, input type, and prompt size (inputs/outputs over 128k tokens cost twice as much). Text input/output is counted in million tokens or thousand characters (Vertex AI), audio and video inputs—in seconds, and visual inputs—in images.

Google AI Gemini API offers a free tier for testing purposes with limitations of 15 requests/minute, 1500 requests/day, and 1M tokens per minute. Vertex AI provides batch mode with a 50 percent discount.

More details are available on the Google Cloud pricing page.

Llama by Meta

Llama (short for Large Language Model Meta AI) is a family of open-source, highly customizable language models. The latest release, Llama 3.2, has two collections of models:

-

Lightweight (1B and 3 B)—text-only, 2-3 times faster than their bigger counterparts, and ideal for on-device and edge deployment; and

-

Vision (11B and 90B)—support image inputs and excel on image understanding.

All versions work with eight languages: English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

Be aware that the EU’s AI Act, which will go into effect in the summer of 2025, сreates regulatory complexity for open-source AI. Llama 3.2 is currently restricted from the European market due to concerns about GDPR compliance.

Llama API service on the Vertex AI platform. Source: Vertex AI

Parameters and context window

Llama 3.2 is presented in 1, 3, 11, and 90 billion parameter sizes. They all support a context length of 128,000 tokens. The 11B and 90B models have vision capabilities: They can extract details from images, analyze sales graphs, interpret maps to answer geographical queries, etc.

Access and deployment

Llama can be deployed on-premises, which gives companies control over data security and privacy. The models are available for download on llama.com and Hugging Face.

In September 2024, Meta presented the Llama Stack, the company’s first framework for efficiently deploying Llama models across various environments: on-premises, in cloud, and on local devices.

It contains a set of APIs, such as

- Inference API for generating responses and processing complex inputs;

- Safety API to moderate outputs for avoiding harmful or biased content;

- Memory API that allows models to retain and reference past conversations, which is useful in customer support chatbots;

- Agentic System API for function calling;

- and more.

Client SDKs for working Llama Stack include Python, Node, Swift, and Kotlin, among others.

Also, companies can access Llama on multiple partner platforms, such as AWS (via Amazon Bedrock, a service that offers a choice of models via a single API), Databricks, Dell, Google Cloud, Grok, IBM, Intel, Microsoft Azure, NVIDIA, Oracle Cloud, Snowflake, and more.

Fine-tuning

You can customize your Llama model using different environments. Below are several options.

- Hugging Face with Axolotl library allows you to fine-tune all Llama 3.2 versions;

- PyTorch-native library torchtune supports the end-to-end fine-tuning lifecycle for Llama 3.2 (1,3 and 11B only);

- Azure AI Studio offers fine-tuning for Llama 3.2 1B and 3B; other versions are coming soon; and

- Amazon Bedrock supports customizing Llama 3.1 models but not the later generation.

Learn more about Llama fine-tuning from the official documentation here.

Pricing

Llama is open-source but still governed by a license that restricts its use in certain cases. Businesses with over 700 million active users monthly must contact Meta for special licensing. Downloading Llama is free. However, to utilize it as a service, you’ll have to pay the chosen cloud provider or API platform, and the price will depend on many factors and additional features offered by the vendor.

For example, Amazon Bedrock’s pricing depends on the region: North America is split into two price zones, Europe into three. In the US East zone, the framework charges for integration Llama 3.2 1B $0.1/M input or output tokens; Llama 3.2 90B—$2/M input or output tokens.

The Llama 3.2 1B price on the Grok platform is four times higher: $0.4/M input or output tokens.

Claude by Anthropic

Claude is a family of models that can process text, code, and image input and generate code and text. The last generation includes two state-of-the-art models: the flagship Claude 3.5 Sonnet, specializing in complex tasks, coding, and creative writing, and the smaller and faster Claude 3.5 Haiku. The most powerful model of the previous generation is Claude 3 Opus, good in math and coding. The models support 10+ languages.

While all major LLMs are trained not to give dangerous, discriminatory, or toxic responses, Claude's creators particularly emphasize ethics and responsibility. They have conceptualized and published a manifesto for Constitutional AI based on universal human values and principles. The responsible AI system is trained to evaluate its outputs and select the safer, less harmful output.

Regarding technical attributes, the company recently unveiled a breakthrough feature: computer use. The set of tools allows the model to work with machines as humans do, interacting with screens and software. For example, you can instruct the model to “Fill out this form with data from my computer and the Internet.” The system will search for the files on your PC, open the browser, navigate the web, etc. The feature is now in beta testing mode.

Parameters and context window

The parameters are undisclosed, though they are rumored to be about 500 billion. The context window is 200k+ tokens (500 pages of text or 100 images) for all Claude 3.5 versions.

Access and deployment

The model is cloud-hosted only. There are three first-party Anthropic APIs:

- Text Completions API is a legacy API, that won't be supported in the future.

- Messages API is the new main API, and transferring from Text Completion to Message API is recommended. It can be used for either single queries or stateless multi-turn conversations.

- Message Batches API (in beta mode) can process multiple Messages API requests simultaneously, costing 50 percent less than streaming API.

Anthropic API console. Source: Anthropic

You can call APIs directly or use Python SDK and TypeScript SDK.

Claude 3.5 and Claude 3 families are also available through Amazon Bedrock API and Vertex AI API.

Fine-tuning options

Anthropic’s strict information security requirements were a stumbling block when it came to model customization since the tuning data is user-controlled. So far, fine-tuning is only possible for Claude 3 Haiku in Amazon Bedrock.

Pricing

Claude 3.5 Sonnet usage costs $3/M input tokens and $15/M output tokens; Claude 3.5 Haiku is $0.25/M input tokens and $1.25/M output tokens. The Batches API provides a 50 percent discount. The detailed pricing info is here.

Grok by xAI

Grok, an Elon Musk's brainchild, is integrated with X (formerly Twitter) and incorporates information from posts and comments in X into its answers. Grok-1 is open-source; you can download the code from GitHub.

The latest Grok family generation includes the commercial Grok-2. The Grok-2 mini is to be released soon. Currently, only the Beta version is accessible on the xAI console.

The website claims X Grok 2 Beta AI supports many languages, but their number is undisclosed. Obviously, the model can speak English.

The word grok was invented by sci-fi author Robert A. Heinlein in his 1961 novel Stranger in a Strange Land. To grok is to deeply understand and empathize. In this regard, Grok’s a work in progress. A test conducted on six different models showed that Grok is the easiest to manipulate into giving instructions like how to make a bomb or how to seduce a child. It will take some effort and tricky prompt techniques, but it's possible, which means Grok still lacks safety measures older models have.

So, if you’re looking for a customer service application, this probably shouldn’t be at the top of your list. But it’s good in coding and math calculations.

Parameters and context window

There are 314 billion parameters declared for Grok-1, and it has an 8.000-length context window. Parameters for Grok-2 are unknown so far, but the Grok beta version, which belongs to the Grok-2 family, has a 128-length context window.

Grok-2 is multimodal. The model can generate high-resolution images on the X social network and understand input images, even explaining the meaning of a meme. However, it’s not publicly available yet.

To learn more AI image generation, its applications and limitations, read our comprehensive explainier.

Access and deployment

Grok-1 can be downloaded and deployed on-premises.

In the beginning, Grok was used only by the X platform and by premium users of X as a chatbot. However, in October 2024, Grok presented its first REST API service for enterprises, currently in the public beta testing phase. The API is accessible via xAI Console, which provides tools to create API keys, manage teams, handle billing, compare models, track usage, and access API documentation.

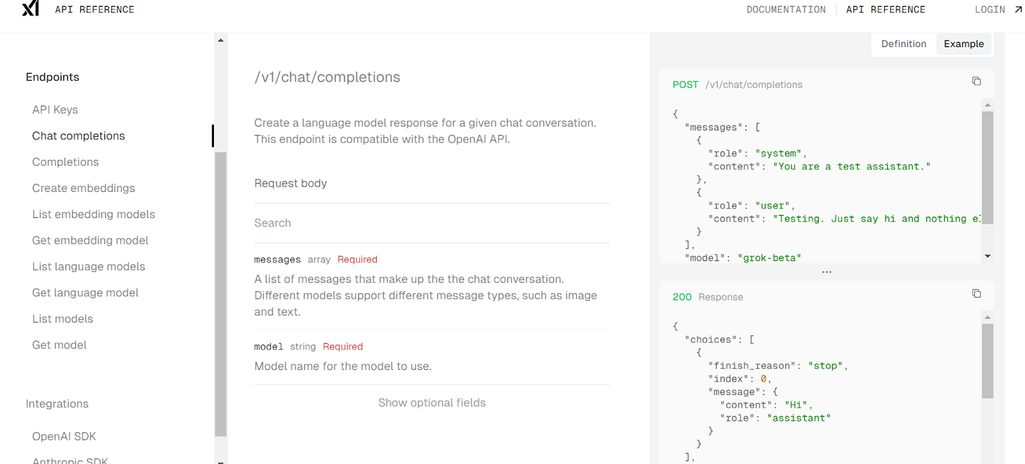

Ghat completions example in xAI Console. Source: xAI

Currently, only the Grok-beta model—offering comparable performance to Grok 2 with enhanced efficiency, speed, and capabilities—is accessible via API on the console.

The xAI API integrates fully and is available through both the OpenAI SDK and the Anthropic SDK. Once you update the base URL, you can use the SDKs to call the Grok models with your xAI API key.

Fine-tuning options

Though downloadable, Grok-1 model cannot be fine-tuned.

Pricing

Grok-beta costs $5/M input tokens and $15/M output tokens. You can download the earlier version, Grok-1, for free.

Mistral by Mistral AI

Founded in 2024, Mistral AI is a French startup co-founded by former Meta employees Timothée Lacroix and Guillaume Lample, together with former DeepMind researcher Arthur Mensch. The company offers a mix of open-source and commercial generative models.

The premium (commercial) product range includes six LLMs: Mistral Large 2 for high-complexity tasks, Codestral for AI coding, 3 B and 8B—small but fast models—and more. Mistral Large 2 provides free usage and modification for research and non-commercial goals.

In addition to commercial solutions, there are seven free models, such as Mathstral 7b and multilingual Mistral NeMo.

Most of the Mistral models are fluent in five major European languages; Mistral NeMo supports eleven; Mistral Large 2 “speaks” dozens of languages, including French, German, Spanish, Italian, Portuguese, Arabic, Hindi, Russian, Chinese, Japanese, and Korean, along with 80+ coding languages.

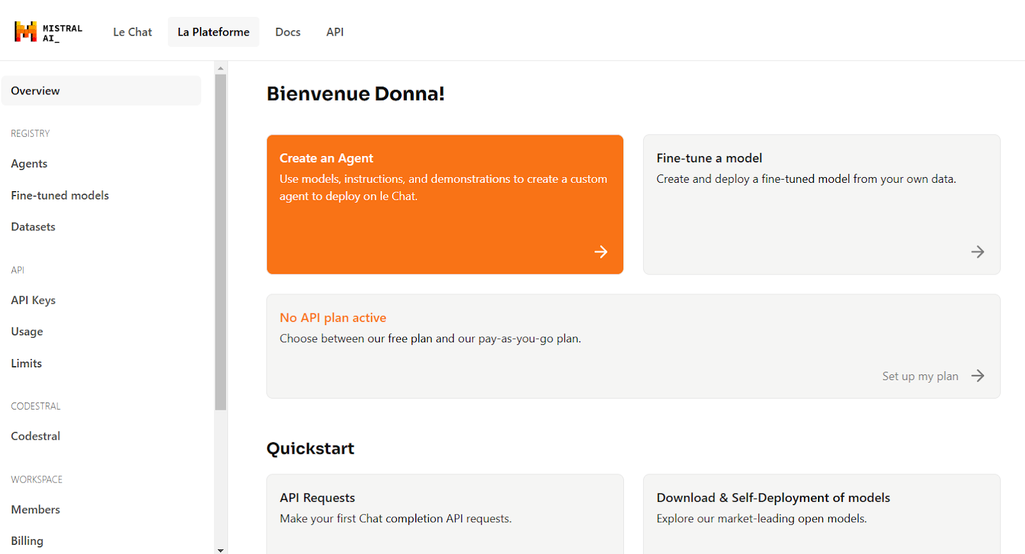

Mistral AI SDK. Source: Mistral AI

Parameters and context window

Mistral Large 2 operates with 123 billion parameters.

Mistral Large 2, Mistral 3B, and 8B have a context window of 128,000 tokens.

Access and deployment

First, you can access any model on La Plateforme, a developer platform hosted on Mistral’s infrastructure, and pay-as-you-go for commercial use.

Open-source models can be downloaded from Hugging Face and used on your device or in your private cloud infrastructure, but you’ll have to buy a commercial license (to get the price list, connect with the team at the Mistral AI website). They are also accessible via cloud partners GCP, AWS, Azure, IBM, Snowflake, NVIDIA, and OUTSCALE, with a pay-as-you-go pricing model.

Mistral Large 2 is available on Azure AI Studio, AWS Bedrock, Google Cloud Model Garden, IBM watsonx, and Snowflake.

Mistral AI API includes a list of APIs dedicated to specific tasks: Chat Completion API for generating responses, Embeddings API for vectorizing or representing words as vectors (necessary for text classification, sentiment analysis, and more), Files API for uploading files that can be used in various endpoints, etc.

Fine-tuning options

All models are open to experiments and customization. You can use a dedicated fine-tuning API at La Plateforme to create the fine-tuning pipeline. An open-source codebase on GitHub can be customized, too.

While the maximum size for an individual training data file is 512MB, the number of files you can upload is unlimited.

Fine-tuning is also available via Amazon Bedrock.

Pricing

Mistral Large 2 costs $2/1M for input tokens and $6/1M for output tokens. A recent price cut makes it one of the most cost-effective frontier models. Ministral 3B is $0.04/1M for input/output tokens.

A free API tier comes with rate limitations, allowing most models, including Mistral Large 2, a maximum of 500K tokens per minute and a monthly cap of 1M tokens.

Choosing LLM for your business: what else to consider?

As you've probably noticed, almost every well-known LLM manufacturer presents both small and large models, and most models have achieved remarkable uniformity in technical capabilities and performance over the last year.

“Big cloud-hosted LLMs perform similarly and have more or less the same capabilities,” confirms Glib Zhebrakov, head of the Center of Engineering Excellence at AltexSoft.

Since the capabilities of the models are comparable, Glib suggests taking into account some other factors.

Existing infrastructure. If a business already uses a specific cloud provider (e.g., Azure, AWS, Google Cloud), selecting the LLM service from the same platform ensures easier integration. Besides, keeping all data flow within the same cloud infrastructure without exposure to the public Internet is essential for data-sensitive applications.

Local deployment possibility. This is required not only for security reasons but also for fine-tuning if a provider doesn’t offer cloud-based fine-tuning services.

Streaming or token-by-token decoding allows users to see each word as it's generated in real time. This feature is important for conversational applications where instant feedback enhances the user experience. Many LLMs, including GPT, Claude, and Gemini, have streaming, but smaller LLMs often lack this functionality and deliver responses as a single output.

Function calling. If an LLM API supports function calling, it means the model can interact with external tools to perform a range of tasks, from fetching the most recent data (like weather forecasts) to doing math calculations.

For example, if a client tells a company chatbot that he/she is going to terminate the contract and wants to know its status, the function can be called to retrieve the contract from an internal database and simultaneously notify a human client manager.

Caching. Context caching refers to storing parts of a conversation history to reduce the need for reprocessing repeated or unchanged information. It lowers computational costs and latency.

Batching. If you plan to leverage LLM for tasks like bulk data analysis or content generation, it makes sense to choose an API with batching capabilities. This means requests and responses will be accumulated and sent in groups at regular intervals, say, once daily. Tokens processed in the batch mode are typically twice as cheap as those processed with streaming.

The size matters, but…

If you’re going to download an LLM, don’t be too tempted by the enormous models. Large size is both a blessing and a curse: it requires significant investment in hardware or cloud computing GPU. If you, for example, rent infrastructure from AWS, it would cost approximately $1,500-2,000/month.

In many cases, a smaller, properly instructed or finetuned model is more cost-effective and can outperform a larger LLM

For instance, in AltexSoft's experience, an insurance company developed a model to predict insurance risks in nursing homes. Eventually, each home received a unique small model tailored to its specific conditions and claim types, as each had specialized needs and risk factors.

The size of the custom window also has a flip side.

I always prefer keeping a prompt concise and to the point: Adding too much data in one prompt can cause AI hallucinations

This is where Retrieval-Augmented Generation (RAG) plays a role: a vectorized database of domain- and company-specific documents that can be searched to find contextually matching information. Following the prompt, the model retrieves and processes matching chunks of data and then includes them in its response context. For creating RAG, you need engineers.

Of course, people matter too

Be aware that the most challenging part is not API integration per se but prompt engineering—crafting instructions for the model so that it produces answers relevant to your goals. At this stage, success depends on the skills and experience of an ML engineering team.

If you are considering hiring a specialist for this task or you are an engineer exploring a new profession, read our article on a role of a prompt engineer, their responsibilities and skills required.

If you want to fine-tune a model, consider hiring experts with backgrounds in data science, data engineering, and/or ML engineering. Properly done, it may significantly improve the results. SK Telecom, one of South Korea’s leading companies, customized the Claude model to enhance customer support workflows and improve service experiences by integrating industry-specific knowledge. The deployment of a fine-tuned Claude model boosted positive feedback on agent responses by 73 percent.

LLM integration: when do you need it?

LLM integration has recently become increasingly popular in most industries. From customer support to data analysis—here are the most popular use cases.

Improving customer service

LLM-powered сhatbots understand the nuances of human language, providing personalized and empathetic responses to customer inquiries. Models can support more engaging, knowledgeable, and efficient conversations, boosting customer satisfaction and loyalty.

An Australian travel startup partnered with AltexSoft to build a booking platform that automatically combines tours from available flights and hotels. Customers can choose from a variety of vacation packages and tailor their holiday. One of the key features is a search bar integrated with OpenAI API to access the GPT language model.

Thanks to the Chat GPT integration, travelers can describe their ideal holiday in simple words and search, even if they don’t know the exact destination. For instance, a user can type “Where to eat a bowl of perfect pho,” “I want to find a cozy mountain cabin for a ski trip,” or “Take the family on a relaxing beach getaway to a scenic island.”

By introducing ChatGPT functionality, our partner enhanced customer experience with natural language interactions and increased the platform's conversion rate.

Automating content creation

From drafting marketing copy to generating lengthy reports, LLMs can streamline content generation creation processes, freeing up your team’s time to tackle more complex tasks. For example,

Duolingo, an internationally recognized app for learning languages, uses LLM to create exercises for their lessons.

Multinational e-commerce platform Shopify created a GPT-powered digital assistant to generate and proofread compelling product SEO descriptions.

Enhancing data analysis

LLMs can analyze massive volumes of text data, uncovering valuable insights and patterns that would be challenging or impossible to detect manually. This data-driven intelligence can inform strategic decision-making and optimize business operations.

Irish-American multinational financial service Stripe employs GPT to understand customer needs and how each person utilizes the Stripe platform. Many clients are small businesses, like nightclubs, and maintain very simple websites, making it difficult to gather necessary information. GPT scans these sites and provides summaries more informative than those generated by humans.

Personalizing user experience

LLMs can tailor recommendations, content, and interactions based on individual preferences and behavior. This level of personalization can lead to higher engagement and improved conversion rates.

Amazon developed the LLM-based framework COSMO to improve its recommendation engine. Through a COSMO framework, LLMs analyze customer interaction data to uncover hidden relations, like connecting slip-resistant shoes with pregnant women. COSMO built a knowledge graph that links products in 18 categories to relevant human contexts (for example, a query “shoes for a pregnant woman should result in offering slip-resistant shoes), refining recommendations for various audiences and uses. Customer reviewers ensure these insights are accurate and relevant.

Remember that the above are just the most prominent examples of successful applications for LLMs. There could be many more. An Austrian philosopher, Ludwig Wittgenstein, said: “The limits of my language means the limits of my world.” It might be time to let large language models expand these boundaries for your business.

Olga is a tech journalist at AltexSoft, specializing in travel technologies. With over 25 years of experience in journalism, she began her career writing travel articles for glossy magazines before advancing to editor-in-chief of a specialized media outlet focused on science and travel. Her diverse background also includes roles as a QA specialist and tech writer.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.