“AI systems (will) take decisions that have ethical grounds and consequences.”

Prof. Dr. Virginia Dignum from Umeå University

On March 23, 2016, Microsoft released its AI-based chatbot Tay via Twitter. The bot was trained to generate its responses based on interactions with users. But there was a catch. Various users started posting offensive tweets toward the bot, resulting in Tay making replies in the same language. The bot basically turned into a racist, sexist, hate machine. Less than a day after the initial release, Tay was taken offline by the developer company followed by an official apology for the bot’s controversial tweets.

Tay is an example of the dark side of AI use. One of many, to be honest. The world knows quite a few cases when AI went wrong.![]()

Though it’s not Darth Vader, AI has its dark side

To prevent potential negative outcomes, companies must regulate their artificial intelligence programming and set a clear governance framework in advance. They need to implement responsible AI.

In our post, we present an informative explanation of responsible AI, its principles, and the approaches to ensure AI projects are implemented with responsibility in mind.

What is responsible AI and why does it matter?

Responsible AI (sometimes referred to as ethical or trustworthy AI) is a set of principles and normative declarations used to document and regulate how artificial intelligence systems should be developed, deployed, and governed to comply with ethics and laws. In other words, organizations attempting to deploy AI models responsibly first build a framework with pre-defined principles, ethics, and rules to govern AI.

Why is responsibility in AI programming important?

As AI advances, more and more companies use various machine learning (ML) models to automate and improve tasks that used to require human intervention.

An ML model is an algorithm (e.g., Decision Trees, Random Forests, or Neural Networks) that has been trained on data to generate predictions and help a computer system, a human, or their tandem make decisions. The decisions can include anything from telling whether a transaction is fraudulent to accepting/declining loan applications to detecting brain tumors on MRIs and working as a diagnosis support tool for doctors.

The models and data they are trained on are far from perfect. They may introduce intended and unintended negative outcomes, not only positive ones. Remember Tay? That’s what we’re talking about.

By taking a responsible approach, companies will be able to

- create AI systems that are efficient and compatible with regulations;

- ensure that development processes consider all the ethical, legal, and societal implications of AI;

- track and mitigate bias in AI models;

- build trust in AI;

- prevent or minimize negative effects of AI; and

- get rid of ambiguity about “whose fault it is” if something in AI goes wrong.

All of this will help organizations pursuing AI power prevent potential reputational and financial damage down the road.

Responsible AI isn’t something that exists only in theory. Three major tech giants — Google, Microsoft, and IBM — have called for artificial intelligence to be regulated and built their own governance frameworks and guidelines. Google CEO Sundar Pichai declared the importance of developing international regulatory principles saying, “We need to be clear-eyed about what could go wrong with AI.”

This brings us to the heart and soul of responsible artificial intelligence — its principles.

Key principles of responsible AI

Responsible AI frameworks aim at mitigating or eliminating the risks and dangers machine learning poses. For this, companies should make their models transparent, fair, secure, and reliable, to say the least.

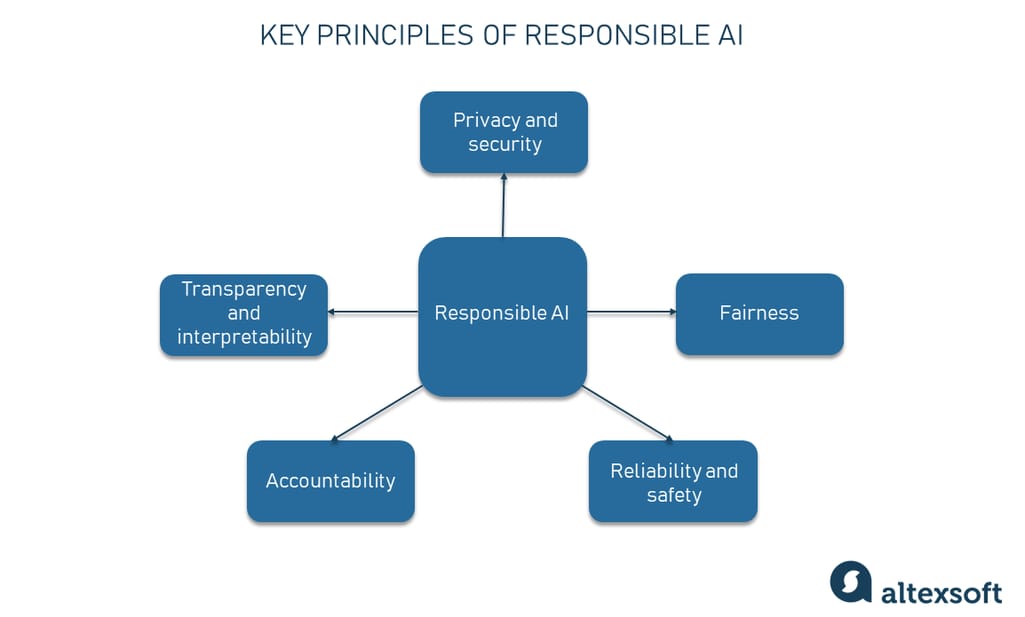

Responsible AI principles

The picture above represents the core principles but their number can vary from one organization to another. Not to mention that the ways they are interpreted and operationalized can vary too. We’ll review the main principles of responsible AI in this section.

Fairness

Principle: AI systems should treat everyone fairly and avoid affecting similarly-situated groups of people in different ways. Simply put, they should be unbiased.

Humans are prone to be biased in their judgments. Computer systems, in theory, have the potential to be fairer when making decisions. But we shouldn’t forget that ML models learn from real-world data which is highly likely to contain biases. This can lead to unfair results as initially equal groups of people may be systematically disadvantaged because of their gender, race, or sexual orientation.

For example, Facebook's ad-serving algorithm was accused of being discriminatory as it reproduced real-world gender disparities when showing job listings, even among equally qualified candidates.

This case proves the necessity to work towards developing systems that are fair and inclusive for all, without favoring anyone or discriminating against them, and without causing harm.

To enable algorithm fairness, you can:

- research biases and their causes in data (e.g., an unequal representation of classes in training data like when the recruitment tool is shown more resumes from men, making women a minority class);

- identify and document what impact the technology may have and how it may behave;

- define your model fairness for different use cases (e.g., for a certain number of age groups); and

- update training and testing data based on the feedback from those who use the model and how they do it.

Questions to be answered to ensure fairness: Is your AI fair? Is there any bias in training data? Are those responsible for developing AI systems fair-minded?

Privacy and security

Principle: AI systems should be able to protect private information and resist attacks just like other technology.

As said, ML models learn from training data to make predictions on new data inputs. In many cases, especially in the healthcare industry, training data can be quite sensitive. For example, CT scans and MRIs contain details that identify a patient and are known as protected health information. When using patient data for AI purposes, companies must do additional data preprocessing work such as anonymization and de-identification not to violate HIPAA rules (the Health Insurance Portability and Accountability Act that protects the privacy of health records in the US.)

Here’s how data is prepared for machine learning

AI systems are required to comply with privacy laws and regulatory bodies that govern data collection, processing, and storage and ensure the protection of personal information. In Europe, data privacy concerns are addressed by GDPR — the General Data Protection Regulation.

To ensure privacy and security in AI you should

- develop a data management strategy to collect and handle data responsibly,

- implement access control mechanisms,

- use on-device training where appropriate, and

- advocate for the privacy of ML models.

Questions to be answered to ensure data privacy and security: Is Ai technology violating anyone’s privacy? How do you protect data against attacks and confirm the security of your AI? How will your AI system protect and manage privacy?

Reliability and safety

Principle: AI systems should operate reliably, safely, and consistently under normal circumstances and in unexpected conditions.

Organizations must develop AI systems that provide robust performance and are safe to use while minimizing the negative impact. What will happen if something goes wrong in an AI system? How will the algorithm behave in an unforeseen scenario? AI can be called reliable if it deals with all the “what ifs” and adequately responds to the new situation without doing harm to users.

To ensure the reliability and safety of AI, you may want to

- consider scenarios that are more likely to happen and ways a system may respond to them,

- figure out how a person can make timely adjustments to the system if anything goes wrong, and

- put human safety first.

Questions to be answered to ensure reliability: Is your AI system reliable and stable? Does it meet performance requirements and behave as intended? Can you govern and monitor the powerful AI technology?

Transparency, interpretability, and explainability

Principle: People should be able to understand how AI systems make decisions, especially when those decisions impact people’s lives.

AI is often a black box rather than a transparent system, meaning it’s not that easy to explain how things work from the inside. After all, while people sometimes can’t provide satisfactory explanations of their own decisions, understanding complex AI systems such as neural networks can be difficult even for machine learning experts.

For example, Apple launched its credit cards in August 2019. People could apply for the card online and were automatically provided with credit limits. However, it turned out that women were given much lower credit limits than men. In addition to being unfair, it wasn’t quite clear how the algorithm performed and provided outputs. We can assume that the company failed with transparency and explainability principles when developing the model.

All of this raises many questions in terms of the interpretability and transparency of ML models. They range from legal concerns about how our data is being processed to ethical and moral dilemmas such as the trolley problem — whether a self-driving car should sacrifice one person to save the lives of many.

While you may have your own vision of how to make AI transparent and explainable, here are a few practices to mull over.

- Decide on the interpretability criteria and add them to a checklist (e.g., which explanations are required and how they will be presented).

- Make clear what kind of data AI systems use, for what purpose, and what factors affect the final result.

- Document the system’s behavior at different stages of development and testing.

- Communicate explanations on how a model works to end-users.

- Convey how to correct the system’s mistakes.

Questions to be answered to ensure transparency and interpretability: Can you and your team explain both the overall decision-making process and the individual predictions generated by your AI?

Accountability

Principle: People must maintain responsibility for and meaningful control over AI systems.

Last but not least, all stakeholders involved in the development of AI systems are in charge of the ethical implications of their use and misuse. It is important to clearly identify the roles and responsibilities of those who will hold accountability for the organization's compliance with the established AI principles.

There’s a correlation between the degree of complexity and autonomy of the AI system and the responsibility intensity. The more autonomous AI is, the higher the degree of accountability of the organization that develops, deploys, or uses that AI system. That’s because the outcomes may be critical in terms of people’s lives and safety.

Question to be answered to ensure accountability: Do you have robust governance models for your AI system? Have you clearly defined the roles and responsibilities of individuals accountable for AI development?

General approaches to implementing responsible AI

“There is no question in my mind that artificial intelligence needs to be regulated. The question is how best to approach this,” said Sundar Pichai, Google CEO.

While organizations may take different routes to create and implement responsible AI guidelines, there are a few general approaches that can help put principles into practice.

Define what responsible AI means for your company. Make sure the entire organization is on the same page in regard to your responsible AI initiatives. For this, you may want to involve top managers and executives from different departments to collaborate on drafting the principles that will lead the design and use of AI products.

Develop principles and training. Build values that guide your responsible AI solutions and distribute them across the organization. You may also need to train different teams on a company's specific approach to enabling responsibility in an AI product.

Establish human and AI governance. Establish roles and responsibilities, mechanisms for AI performance review, and accountability for outcomes.

Integrate available tools. Evolve your standard machine learning pipelines including data collection and model building to the level at which responsible AI principles will be met. For this, you can opt for available methods and tools provided by industry leaders. For example, PwC offers its Responsible AI Toolkit — a suite of services, covering frameworks and leading practices, assessments, and technologies. Google and Microsoft also provide tools and frameworks that come under the umbrella name — Explainable AI and Responsible AI Resources respectively

Successful cases of responsible AI use

The world has already faced many consequences of AI failures. Luckily, people have this thing called the brain so they are capable of learning from mistakes. With the shift towards the responsible use of artificial intelligence, many companies have succeeded with their projects big time. Let’s take a look at a few real-world examples.

IBM helps a large US employer build a trustworthy AI recruiting tool

A large US corporation has teamed up with IBM to automate its hiring processes while keeping its AI unbiased. In terms of attracting and recruiting new candidates, it was crucial for the employer to drive diversity and inclusion while ensuring fair and trustworthy decisions of its ML models. The company wanted not only to put fairness in place by speeding up the identification of any bias in hiring but also to understand how AI models make decisions. For that, they made use of IBM Watson Studio — an AI monitoring and management tool on Cloud Pak for Data.

An insurance company develops its responsible AI framework

State Farm — a large group of mutual insurance companies throughout the United States — has incorporated AI into its claim handling process along with a responsible AI strategy. The company has established a governance system that assigns accountability for AI and allows for making faster and more informed decisions while creating even better customer and employee experiences. The company's AI model called the Dynamic Vehicle Assessment Model (DVAM) helps predict a total loss with a high level of confidence while enabling transparency regarding the method of processing insurance claims.

H&M Group sets up a Responsible AI team and creates its own practical checklist

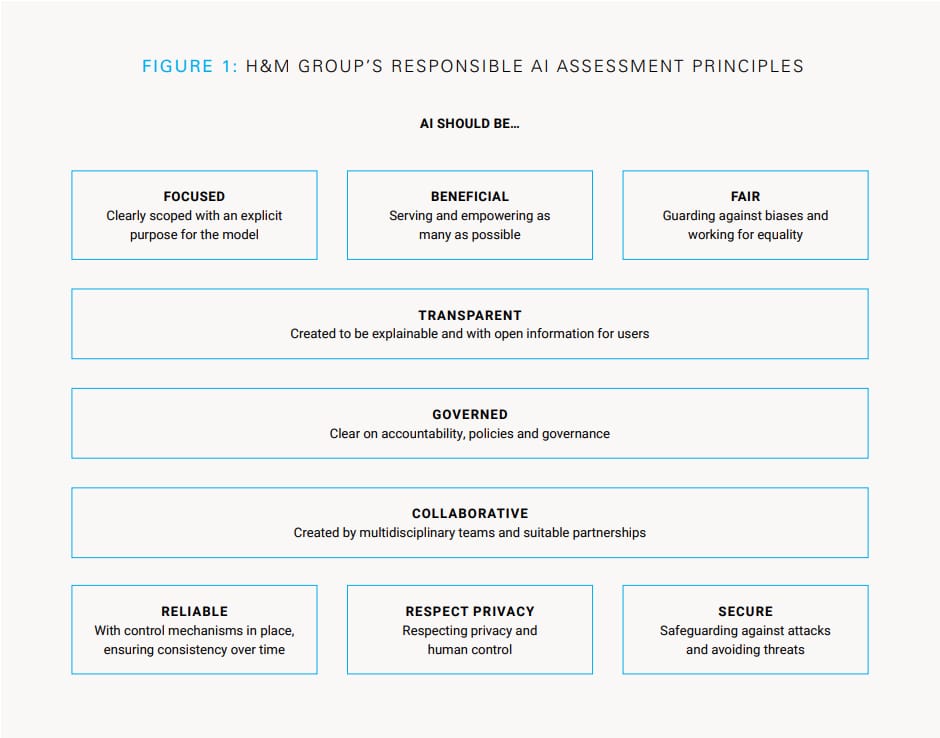

H&M Group — one of the world’s biggest fashion retailers — uses AI capabilities for its sustainability and supply chain optimization goals as well as personalized customer experience. In 2018, the company created a Responsible AI Team and established a practical Checklist that aimed to identify and mitigate potential harms of AI use.

H&M Group’s Responsible AI Assessment Principles. Source: UNICEF

From then on, all H&M Group’s AI products are designed in accordance with the 9 guiding principles as being focused, beneficial, fair, transparent, governed, collaborative, reliable, secure, and respectful of privacy. Every AI initiative of the retail giant goes through many stages of assessment with product teams performing Responsible AI Checklist reviews.

The future of responsible AI

Remember the I, Robot movie? The fictional robot was based on the ideas of Isaac Asimov, namely the first law of robotics stating that a machine should not harm a human, or by inaction allow a human to come to harm. In the situation when the robot could save the life of only one person, it calculated that the chances of saving Will Smith's character were higher than the chances of saving his child. This is a typical moral dilemma even humans would find difficult to solve. Should a robot prioritize the child's life? Should it save the person who can help society more? Can machines make accurate, bias-aware decisions at all?

These and other questions swirl about in the nascent field of AI.

As of now, when it comes to building technology and using data responsibly and ethically, organizations are at different stages of the journey. So, responsible AI is and will continue to be an active area of research and development with the aim of creating standardized guidelines for organizations operating in different industries.