Large language models (LLMs) are like ultimate know-it-alls, ready to chat about any topic you throw their way—whether it’s unsolved mysteries in physics, top-notch sushi recipes, or advanced guitar techniques. But here’s the catch: Their confidence doesn’t always equal accuracy. LLMs can base their answers on outdated or unreliable sources, mix up similar-sounding terms, or even invent facts when there’s no solid data to back them up.

Retrieval augmented generation (RAG) is a proven way to solve the issue of AI hallucination. In this article, we’ll share how we’ve built RAG to rein in AI’s imagination and ensure its responses stay laser-focused on the specific domain.

What is RAG?

Retrieval augmented generation, or RAG, is a method of improving the accuracy of large language models by connecting them to specialized sources of information. Since LLMs learn from large amounts of general, unlabeled text data, they often struggle to generate responses tailored to a specific organization or industry. RAG provides additional context to improve output quality without the need for model fine-tuning or re-training. This makes it a popular approach for developing AI-powered assistants, AI agents, and other conversational AI software.

At the core of RAG is a vector database that stores domain-specific information. Sitting between the chat interface and the LLM, this database holds unstructured data—in our case, texts—in the form of numerical representations called embeddings, which retain the meaning of words and phrases.

“We can think of vectors or embeddings as a form of communication between machines. Through embedding models, we transform complex data into sets of numbers that capture meanings and where similar data points have close vectors,” explains Oleksii Taranets, Solution Architect at AltexSoft, who worked on several RAG projects.

For more information about embedding types and vector database examples, check our article What is a Vector Database? An Illustrative Guide. Meanwhile, let’s see how it fits into the RAG workflow using the example of an AI travel assistant our team built as a proof of concept. For vector storage, our engineers used Elasticsearch.

How a RAG system works

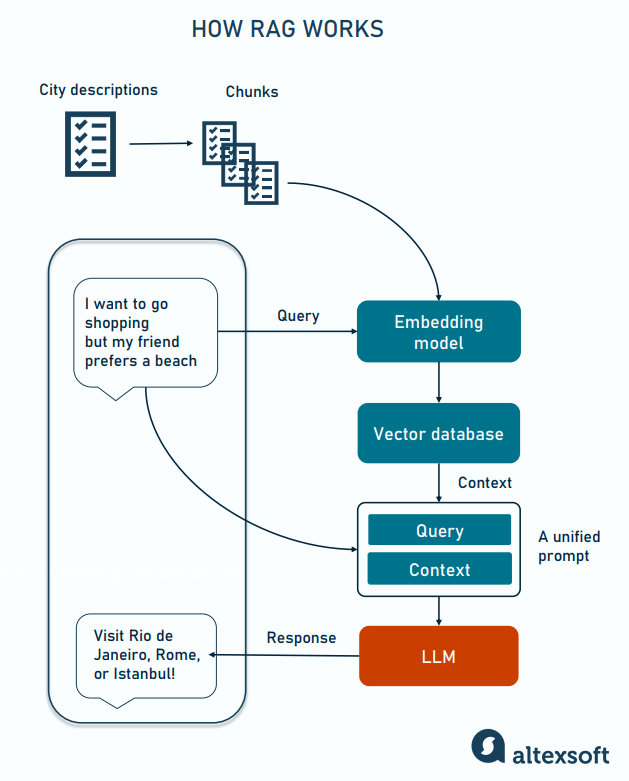

1. A traveler asks a bot to suggest a vacation destination: “I want to go shopping, but my friend would prefer a beach.”

2. The built-in embedding model turns the request into a vector and compares it with the information on popular destinations from the vector database. Vectors stored in the database are created by the same embedding model from external documents, in our case descriptions of the most visited cities.

2. The system retrieves the content based on the similarity to the user’s query and passes both query and search results to the LLM as a single prompt.

3. The LLM generates an answer based on the context provided.

4. The user gets a list of cities (for example, Rio de Janeiro, Rome, and Istanbul) that offer both beach and shopping experiences.

Learn about our hands-on experience building an AI travel agent and fine-tuning its responses with RAG.

While the process seems quite straightforward, the efficiency of the search and the accuracy of answers will largely depend on the amount of content each vector in the database contains—that is, on the chunks of text it represents.

Chunking: why size matters

Chunking is the process of splitting large parts of the text into smaller pieces before converting them into vectors with embedding models. How large should these pieces or chunks be? This is a crucial question to be answered since it impacts the AI chatbot’s performance and ability to understand what users want.

But first, let’s clarify why we need to chunk large documents and turn them into vectors at all.

Until recently, the most obvious reason for this was the context window of the chosen LLM or the maximum number of tokens (a unit of text with 1,000 tokens nearly equaling 750 English words) the model could process at a time. So, if your document doesn’t fit into the limit, you have no choice but to break it into parts.

You can say, "Hold on, but the modern LLMs can digest a lot!” For example, GPT Turbo and GPT-4.5 accept 128,000 tokens in a single prompt, which is about 300 pages of text. If that’s not enough, Gemini 1.5 Pro supports two million tokens, which is about 16 average-sized English books. Why not just put all the data into one prompt? “Technically, you can do it,” Oleksii agrees, “But you will quickly run into three issues relating to cost, performance, and quality”.

Let’s again illustrate this using an example of our internal project — an AI-based assistant for employees.

Cost. Imagine we have 100 pages of internal corporate documentation, each holding around 400 tokens. We want to use it as a source of information for an LLM — in our case, GPT-4 Turbo. With its context window of 128,000 tokens, the model readily accepts an employee’s query (for example, “How many days of paid sick leave can I take per year?”) plus all corporate policies, around 40,000 tokens, in one shot.

Now let’s make some calculations. OpenAI platform charges $10 per one million input tokens processed by GPT-4 Turbo. So, each request will cost the organization $0.40 plus a fraction of a cent for the response (though output pricing is three times higher, the answer will definitely take an order of magnitude less text). Scale it to thousands of questions daily — and it’ll be hell to pay. But if you cut the prompt to an equivalent of a page or even less, the price will drop 100 times.

Performance. Obviously, the LLM will take more time to process all 100 pages of corporate documentation than just a couple of pages related to sick leave policies.

Quality. Oleksii warns that quality will degrade even more than performance: “The LLM loses focus, spreading attention across too much data, which results in increased hallucination.”

So, chunking makes a lot of sense — but only if done right. If pieces of text are too small, they can lose the context. If they are too large, the LLM will struggle with irrelevant information. “Researchers found that chunk size between 256 and 512 tokens works the best,” Oleksii says. “It’s about 1,000 to 2,000 characters. However, you can’t just split text into random pieces. Each chunk must be a meaningful unit preserving a sort of complete idea. It will increase the chance of finding data semantically close to a query.”

How exactly can you do chunking for RAG? There are several popular ways we’ll look into below.

Chunking strategies

Chunking strategies differ by complexity and can be based on

- length (number of characters),

- text structure (sentences, paragraphs, etc.),

- document structure (for example, using tags in HTML document or array elements in JSON), and

- semantic meaning.

When developing the employee assistant, we tested three strategies using text splitters from LangChain — a toolkit for building GenAI applications.

Character/fixed-size chunking

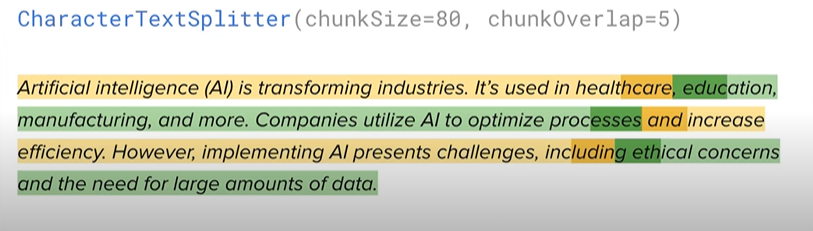

This type of chunking is the simplest and most straightforward way to prepare our document for RAG. A splitter uses two parameters — the length of a chunk (for example, 80 characters) and the chunk overlap (for example, 5 characters).

The example of fixed-sized chunking applied to short text

The chunk overlap is a piece of text shared between two neighboring pieces of text. In our case, the last five characters of the previous chunk and the first five characters of the next chunk will be repeated in both chunks, so 10 characters are duplicated on the boundaries. This ensures that no information is missing and also preserves connections between chunks.

Character chunking is intuitive, easy to implement, and cost-efficient as it doesn’t require computational resources to perform complex text analysis. On the dark side, it totally ignores the text structure and meaning, so the RAG system can’t capture semantic relationships accurately.

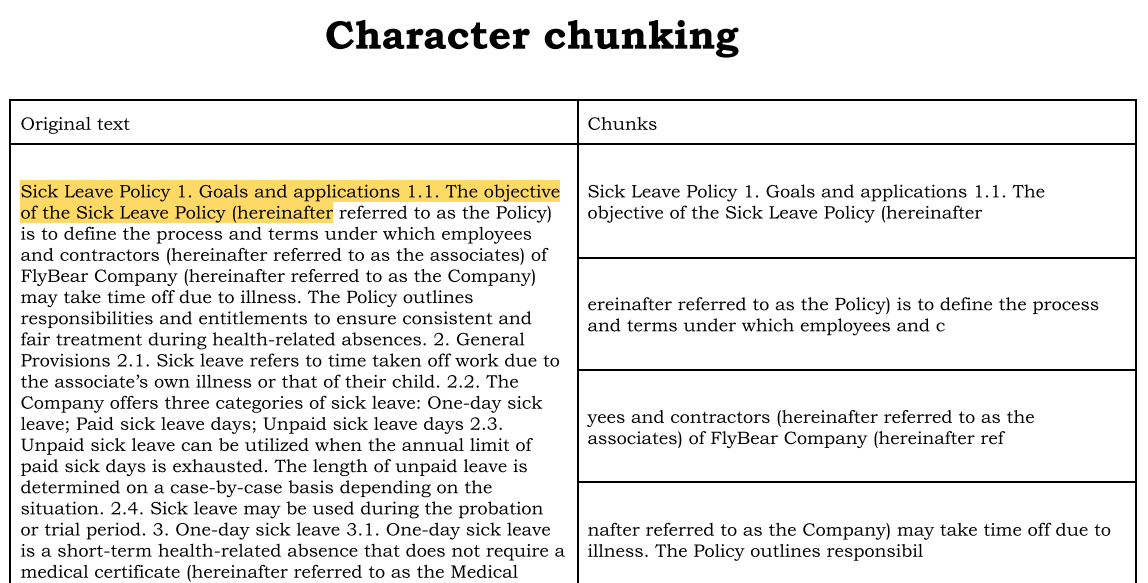

The employee assistant with character chunking. In the example below, you can see how character chunking applied to the sick leave policy document cuts the text into short, random pieces and even breaks up words.

Character chunking applied to the sick leave policy document

If asked, “How many days of sick leave can I have in a year?” the assistant would answer, “The annual number of sick leave days is 20.” This is not complete information: The response doesn’t consider one-day sick leaves without a medical certification and unpaid sick leaves. The assistant fails to find all relevant chunks and combine them into a single answer.

Since this strategy doesn’t fit our goal of building a reliable chatbot, we proceed with a smarter way to break down our data — recursive chunking.

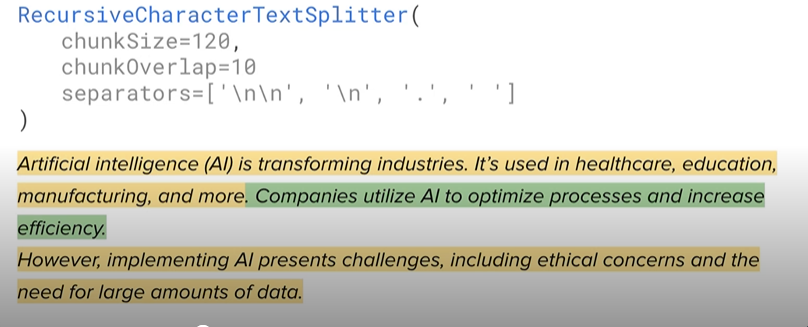

Recursive character chunking

With this approach, a splitter relies not only on the number of characters but also on specific separators to ensure that each chunk preserves meaningful context. To test the technique, we set a chunk size of 120 characters, an overlap of 10 characters, and four separators

- a paragraph break (\n\n),

- a line break (\n),

- a sentence break (a full period), and

- a word break (a space).

The example of recursive character chunking applied to short text

How does the splitter work? It starts by going to the first largest separator, which is a paragraph break, and checks whether all the prior text fits within the chunk size limits.

In the example above, we have one paragraph that is bigger than 120 symbols. So, the algorithm moves back to the second-largest separator, a line break, and splits the paragraph at this point. The chunk after the line break falls within the required length while the first piece is still too long. The algorithm goes down to the next separator — a period, and again, divides the remaining content into two parts. Finally, we have three chunks that are shorter or equal to 120 symbols.

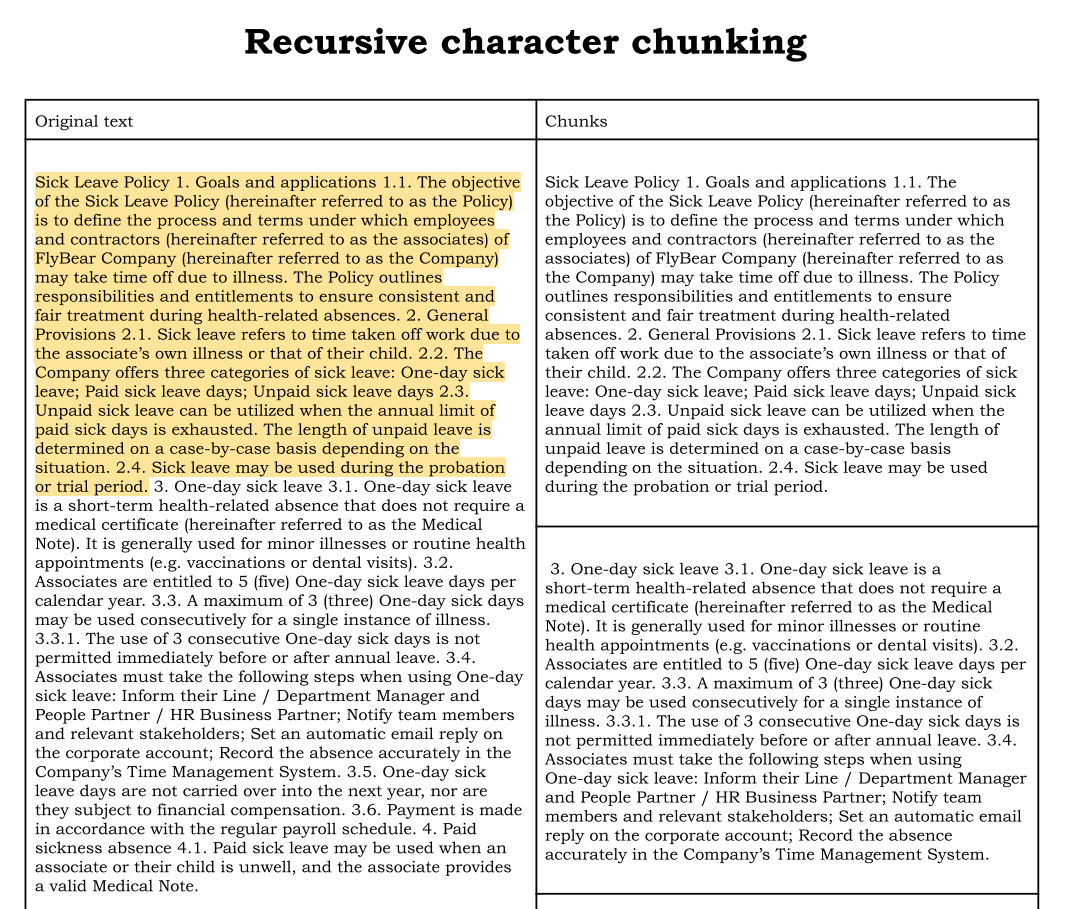

The employee assistant with recursive character chunking. To split our policy documentation, we increased the chunk size — and got meaningful pieces of content, without abrupt breaks in flow.

Recursive character chunking applied to the sick leave policy document

We asked the assistant the same question: “How many paid days of sick leave can I have in a year?” and got a more detailed response: “You can take up to 20 paid sick leave days in a year. Additionally, there are options for one-day sick leave and unpaid sick leave.”

Though more advanced than simple character chunking, the recursive type still considers text structure, not its meaning. In other words, the splitter doesn’t take into account what the piece of content is about. This level of understanding becomes possible with semantic chunking.

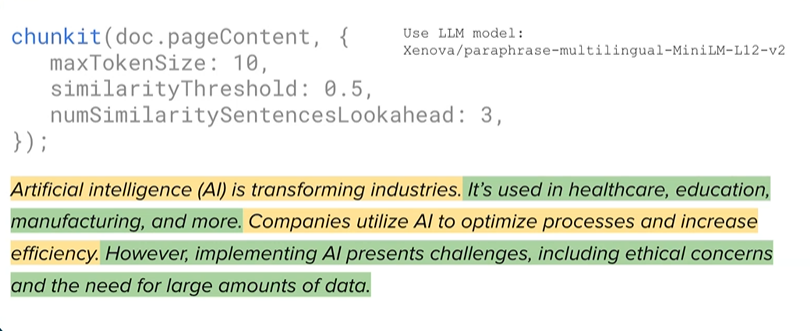

Semantic chunking

As the name suggests, semantic chunking goes beyond pure structure and considers meaning when forming chunks, allowing an AI bot to produce more accurate answers. Unlike simpler methods, this type of splitting involves machine learning.

The example of semantic chunking applied to short text

Let’s see how the content splitter with an embedding model under the hood works step by step:

- The system forms initial chunks that can’t exceed the pre-defined size — in our case, 10 tokens, which is about 7-8 English words.

- The embedding model (we used a free sentence transformer) maps initial chunks into vectors.

- The system calculates the similarity between the current chunk and the adjacent one (it can be parts of the same sentence) and compares it against the similarity threshold. (We set it at a moderate level of 0.5, with 1 meaning that vectors are identical and 0 signaling that they have nothing in common.).

- If the threshold is exceeded, two units are merged into a single chunk. If not, they remain separate.

- Overall, the lookahead parameter tells the system to inspect three chunks next to the current one and join them if the similarity is above 0.5.

When we tested the splitter on the short text presented above, it neatly cut it into chunks that coincided with sentences.

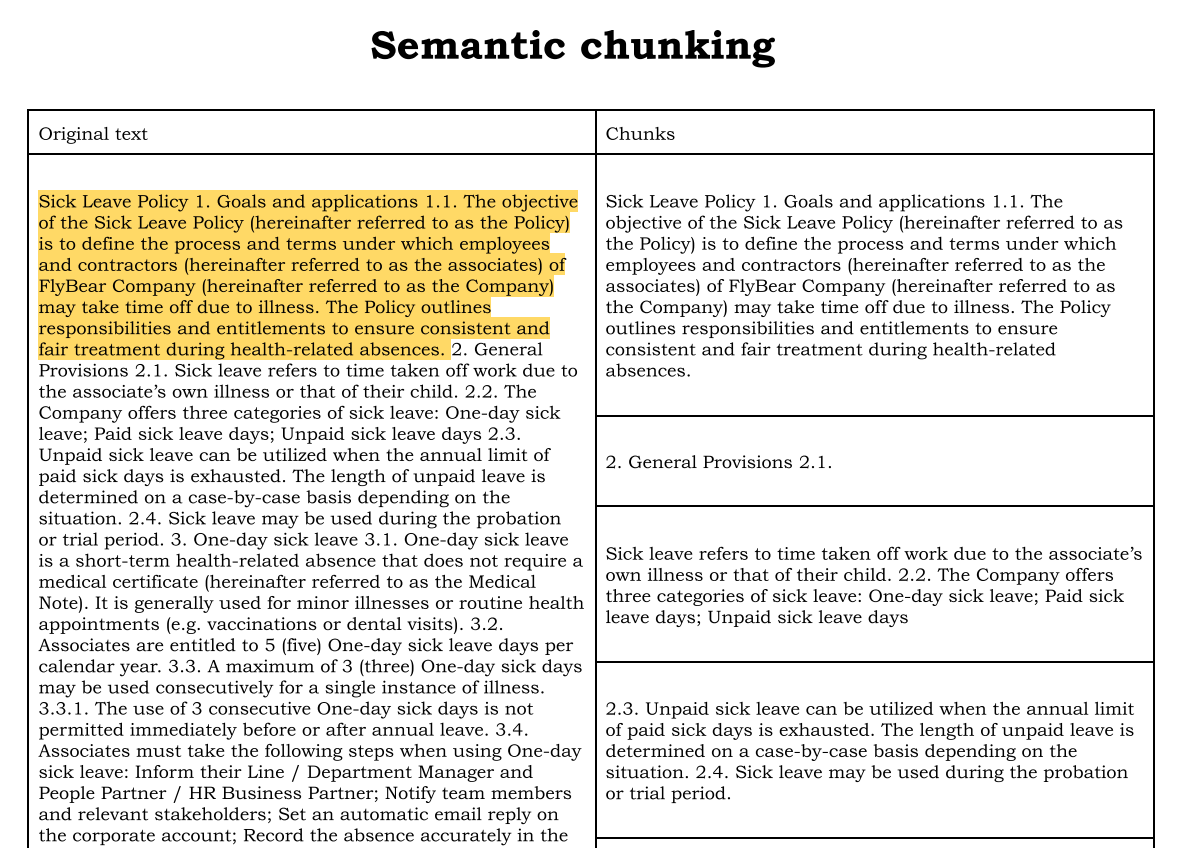

The employee assistant with semantic chunking. We experimented with different parameters for the sick leave policy document, and, ultimately, the text was divided into logical units.

Semantic chunking applied to the sick leave policy document

Now, the assistant found the numbers of both one-day sick leaves (5) and paid sick leaves, which require medical certification (20). Yet, it was still unable to handle a more complex task — calculating the total number of paid sick leave days (20). At this point, we faced the limitations of a basic RAG system or naive RAG.

Naive RAG limitations

A naive RAG relies on a single external source of information and directly passes the data to the large language model. Even when powered with semantic chunking, it fails to manage certain requests correctly. Specifically, it struggles with the following tasks.

Summarization. If you ask the assistant to summarize the document or highlight three key points of some text, the naive RAG wouldn’t be able to produce an overview since it works with pieces of text and doesn’t embrace the broader picture.

Comparison. The naive RAG focuses on the similarity between chunks — not on the differences. So, if we take our AI travel assistant as an example, it can only suggest cities that closely match your request and are alike in some sense. For example, both London and Paris offer great shopping opportunities. But if you want more details and ask the system to compare the two cities, you won’t get a proper answer.

Extended dialogues. With a naive RAG in the middle, the LLM misses the context of previous interactions. This lack of persistent memory across the conversation leads to repetitive answers and breaks the natural flow of the dialogue. The issue becomes especially obvious during extended communication, with many clarifying questions.

Is a naive RAG absolutely incapable and useless? Definitely, no. It’s a resource-effective and sufficient technique for a simple bot designed to answer FAQs, as long as it doesn’t require sources of information other than, for example, corporate documentation, nor is it supposed to handle prolonged conversations. However, for more complex tasks—like maintaining long dialogues or processing multi-component questions — the naive approach is not enough. For better results, we need to go a step further and master a more advanced technology, agentic RAG.

Agentic RAG

Agentic RAG takes advantage of AI agents — autonomous tools capable of reasoning, making decisions, and taking actions on behalf of a user. It combines the power of an LLM with access to functionality and data sources beyond the internal knowledge base.

Read our article about agentic AI for a deep dive into its capabilities and real-world use cases.

Unlike naive RAG, agentic systems:

- have short- and long-term memory enabling them to inform next workflows with the previous context;

- apply different types of embeddings depending on the data;

- combine vector search with traditional keyword search;

- perform web searches, use a calculator, query external databases, examine emails and chats;

- assess the retrieved content, prioritize the most valuable information, and remove irrelevant data before passing the prompt to the LLM;

- and more.

“Multiple agents can be applied to different stages of the RAG,” Oleksii says. “For instance, one agent preprocesses a query to refine and contextualize it before it enters the RAG system. During retrieval, another agent interacts with data to enhance the relevance of the result while in the post-processing phase, the third agent ensures that the final output meets the user needs.”

Agent types

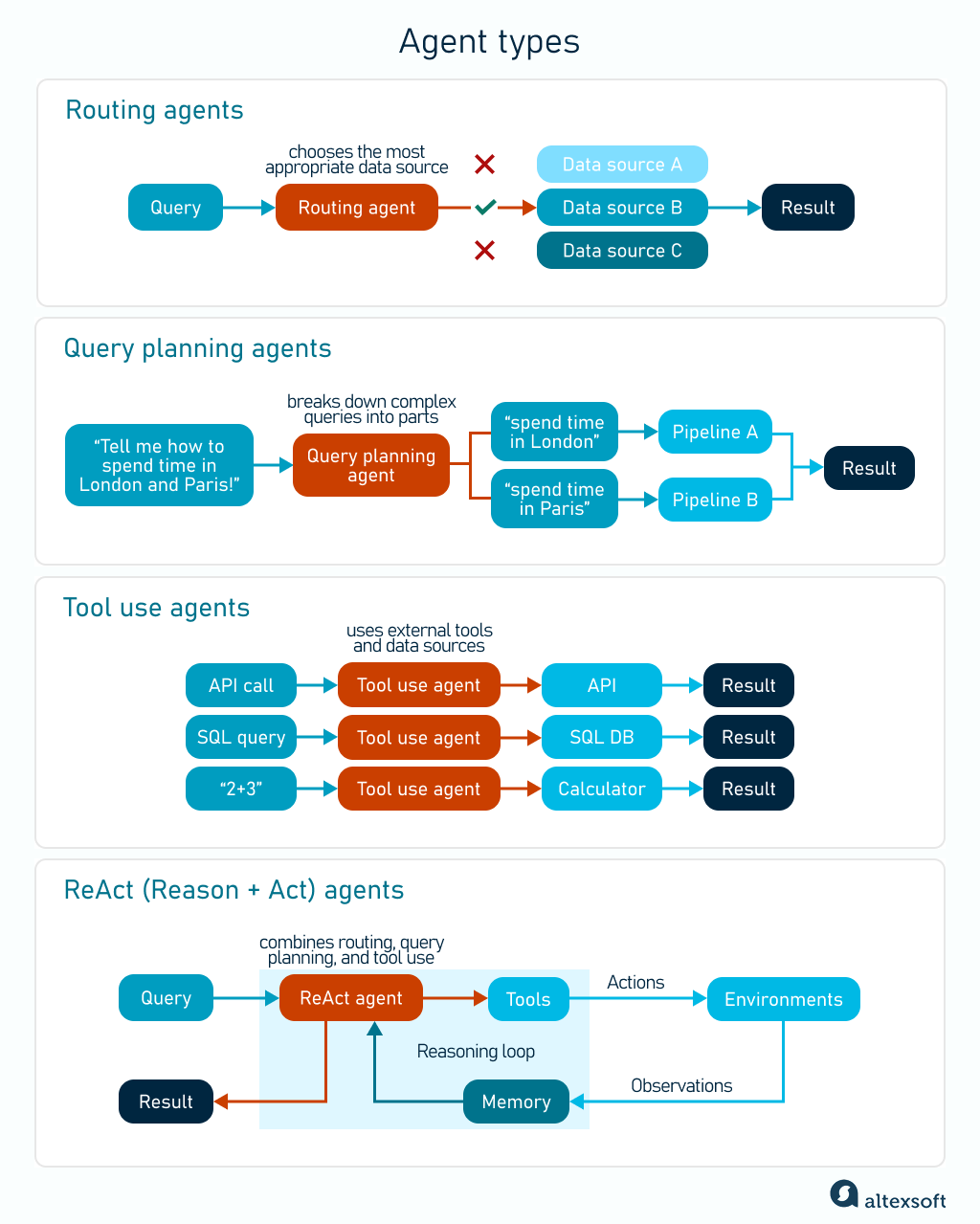

Depending on the implementation, the agentic RAG system can be relatively simple and low-cost or complex and resource-intensive. Below are examples of common agents that can be standalone instruments or part of a larger, multiagent infrastructure.

Agent types

Routing agents analyze the input prompt and decide on a data source to query. In multi-agent systems, they select whether to trigger a straightforward request-response pipeline or whether the right answer involves a more complex summarization flow.

Query planning agents break down complex queries into parts to be processed in parallel. This can be helpful when a user wants to compare activities available in different cities (“Tell me how to spend time in London and Paris!”). The agent will divide the prompt into two parts (“spend time in London” and “spend time in Paris”), submit it to two different pipelines, and then merge the results into a single response.

Tool use agents interact with external functionality and data sources to complete a particular task. For example, when building our AI travel assistant, our team integrated the LLM with a hotel API. “Now, I can ask the assistant to find the best hotel for my vacation, and it will collect all the necessary data and show me the most valid options,” Oleksii explains. “In the same way, we can use agents for database queries, accessing SDK tools, and more.”

ReAct (Reason + Act) agents combine routing, query planning, and tool use, iterating a complex query through a reasoning and action loop. Does this sound too complex? Let’s explain it again using an example of our internal employee assistant. This time, we’ve enhanced it with a ReAct agent, asking it to calculate the total number of paid sick leave days — something that a naive RAG failed to do. Here’s how ReAct solves the problem.

- Reasoning. The agent decides on the best way to solve the problem. It breaks down the complex question into sub-tasks: “Find the number of one-day sick leaves”, “Find the number of sick leave days requiring medical certification,” and “Sum up the two numbers.”

- Action. The agent queries a vector database and retrieves chunks relevant to the first sub-task.

- Analysis. The agent assesses the retrieved information and incorporates it into the next reasoning step.

In the next loop, it processes the second sub-question, again assessing the vector database and analyzing the output. The system iterates the query until a satisfying result is produced. One of the final loops involves selecting a calculator as a required tool to complete the task.

In our case, we finally received the expected answer: “The employee can take a maximum of 25 sick leave days, including 5 one-day sick leaves and 20 paid sick leave days requiring medical certification.”

The continuous reasoning and action loop enhances the model’s ability to handle complex, multistep tasks while reducing errors and hallucination.

Agentic RAG implementation options

Building an agentic RAG from scratch can be too time-consuming and requires significant expert resources. The best way to test this novelty approach is to use the LLM's function-calling capabilities or employ one of the existing agent frameworks.

Function calling allows a large language model to access external tools and perform tasks beyond text generation. The feature has become part of many popular LLM APIs, enabling agentic workflows. This includes OpenAI’s GPT 3.5 and newer versions, Anthropic’s Claude, Google’s Gemini, Meta’s open-source LLama, and others.

Agent frameworks facilitate building LLM-based applications. They come with a wide range of ready-to-use templates and building blocks for RAG flows. Our team built naive RAGs with LangChain and more advanced agentic RAGs with LlamaIndex, widely used by global enterprises. However, there are other alternatives to consider, such as CrewAI, which focuses on multi-agent flows, or Swarm, managed by OpenAI.

Whichever path you choose, the final result will largely depend on the quality of the data you have and the expertise with technologies and concepts related to RAG. This includes but is not limited to indexing techniques, vector databases, hybrid search, prompt engineering, LLM context and memory management, and model fine-tuning. What is even more important, RAG building involves a lot of experimentation and constant learning — since new techniques appear all the time. Have some fun and play with different strategies, tools, and parameters to achieve the best results!