As it sometimes happens, when one approach doesn’t work to solve a problem, you try a different one. When that approach doesn’t work either, it may be a good idea to combine the best parts of both. At least that’s often the case with technology tasks. And machine learning is no exception. You’ve probably heard of the two main ML techniques — supervised and unsupervised learning. The marriage of both those technologies gave birth to the happy medium known as semi-supervised learning.

In this article, we’ll dive into the term, explain how this ML process works, and what issues associated with the other two ML types it solves. While we're at it, we'll also review a few real-life semi-supervised examples.

What is semi-supervised learning? Semi-supervised learning vs supervised learning vs unsupervised learning

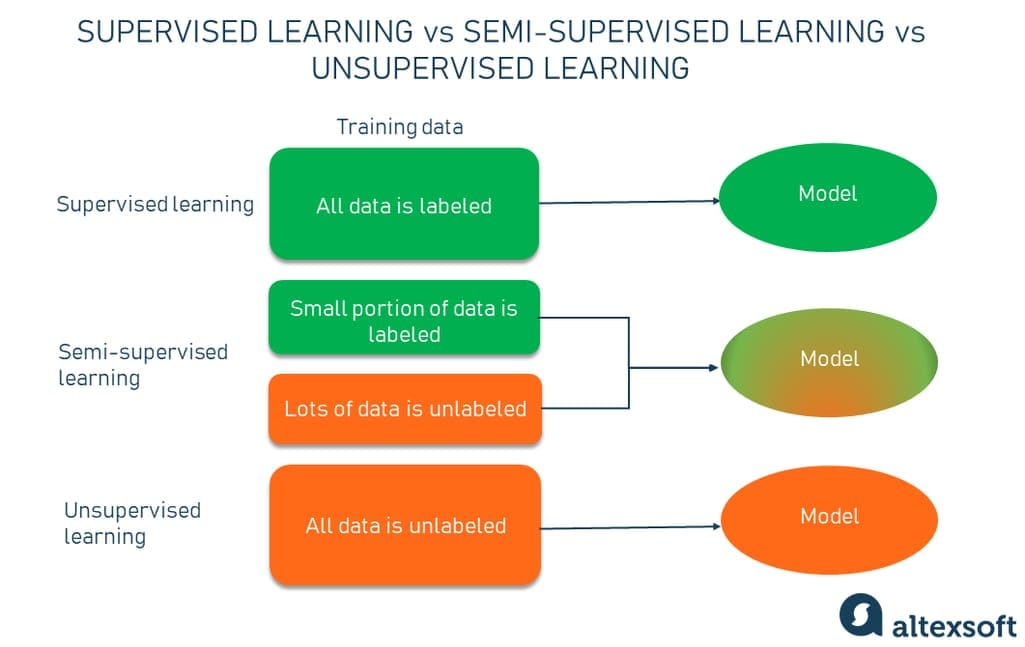

In a nutshell, semi-supervised learning (SSL) is a machine learning technique that uses a small portion of labeled data and lots of unlabeled data to train a predictive model.

To better understand the SSL concept, we should look at it through the prism of its two main counterparts.

Supervised learning is training a machine learning model using the labeled dataset. Organic labels are often available in data, but the process may involve a human expert that adds tags to raw data to show a model the target attributes (answers). In simple terms, a label is basically a description showing a model what it is expected to predict.

Supervised learning has a few limitations. This process is

- slow (it requires human experts to manually label training examples one by one) and

- costly (a model should be trained on the large volumes of hand-labeled data to provide accurate predictions).

Unsupervised learning, on the other hand, is when a model tries to mine hidden patterns, differences, and similarities in unlabeled data by itself, without human supervision. Hence the name. Within this method, data points are grouped into clusters based on similarities.

While unsupervised learning is a cheaper way to perform training tasks, it isn’t a silver bullet. Commonly, the scenario

- has a limited area of applications (mostly for clustering purposes) and

- provides less accurate results.

Semi-supervised learning bridges supervised learning and unsupervised learning techniques to solve their key challenges. With it, you train an initial model on a few labeled samples and then iteratively apply it to the greater number of unlabeled data.

- Unlike unsupervised learning, SSL works for a variety of problems from classification and regression to clustering and association.

- Unlike supervised learning, the method uses small amounts of labeled data and also large amounts of unlabeled data, which reduces expenses on manual annotation and cuts data preparation time.

Speaking of supervised learning, we have an informed 14-min video explaining how data is prepared for it. Make sure you check it out.

How is data prepared for machine learning?

Since unlabeled data is abundant, easy to get, and cheap, semi-supervised learning finds many applications, while the accuracy of results doesn’t suffer.

Let’s look at one of the real-world scenarios like fraud detection. Say, a company with 10 million users analyzed five percent of all transactions to classify them as fraudulent or not while the rest of the data wasn’t labeled with “fraud” and “non-fraud” tags. In this case, semi-supervised learning allows for running all of the information without having to hire an army of annotators or sacrifice accuracy. Below, we’ll explain how exactly this magic works.

Semi-supervised learning techniques: self-training, co-training, graph-based labeling

Imagine, you have collected a large set of unlabeled data that you want to train a model on. Manual labeling of all this information will probably cost you a fortune, besides taking months to complete the annotations. That’s when the semi-supervised machine learning method comes to the rescue.

The working principle is quite simple. Instead of adding tags to the entire dataset, you go through and hand-label just a small part of the data and use it to train a model, which then is applied to the ocean of unlabeled data.

Self-training

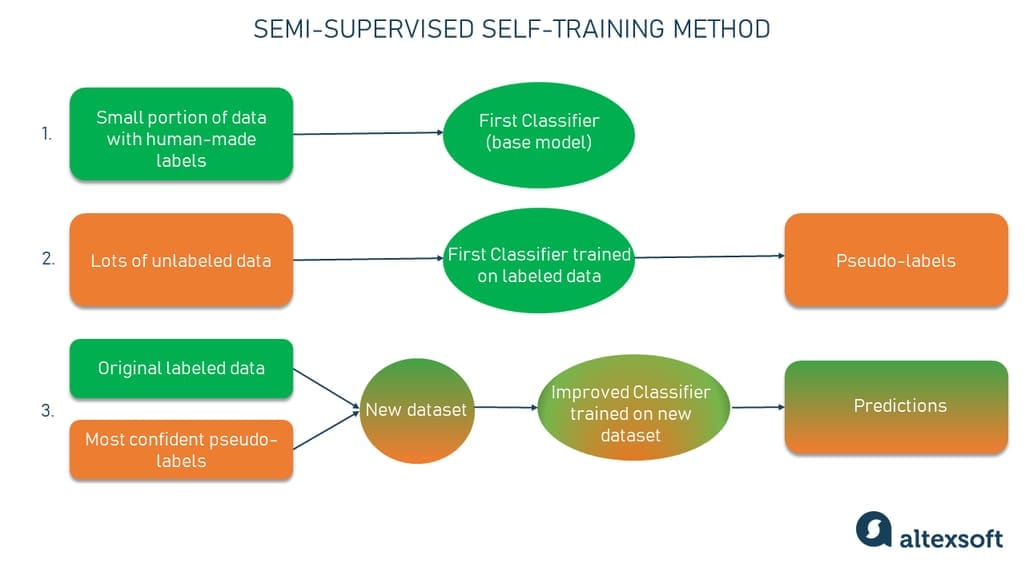

One of the simplest examples of semi-supervised learning, in general, is self-training.

Self-training is the procedure in which you can take any supervised method for classification or regression and modify it to work in a semi-supervised manner, taking advantage of labeled and unlabeled data. The standard workflow is as follows.

- You pick a small amount of labeled data, e.g., images showing cats and dogs with their respective tags, and you use this dataset to train a base model with the help of ordinary supervised methods.

- Then you apply the process known as pseudo-labeling — when you take the partially trained model and use it to make predictions for the rest of the database which is yet unlabeled. The labels generated thereafter are called pseudo as they are produced based on the originally labeled data that has limitations (say, there may be an uneven representation of classes in the set resulting in bias — more dogs than cats).

- From this point, you take the most confident predictions made with your model (for example, you want the confidence of over 80 percent that a certain image shows a cat, not a dog). If any of the pseudo-labels exceed this confidence level, you add them into the labeled dataset and create a new, combined input to train an improved model.

- The process can go through several iterations (10 is often a standard amount) with more and more pseudo-labels being added every time. Provided the data is suitable for the process, the performance of the model will keep increasing at each iteration.

While there are successful examples of self-training being used, it should be stressed that the performance may vary a lot from one dataset to another. And there are plenty of cases when self-training may decrease the performance compared to taking the supervised route.

Co-training

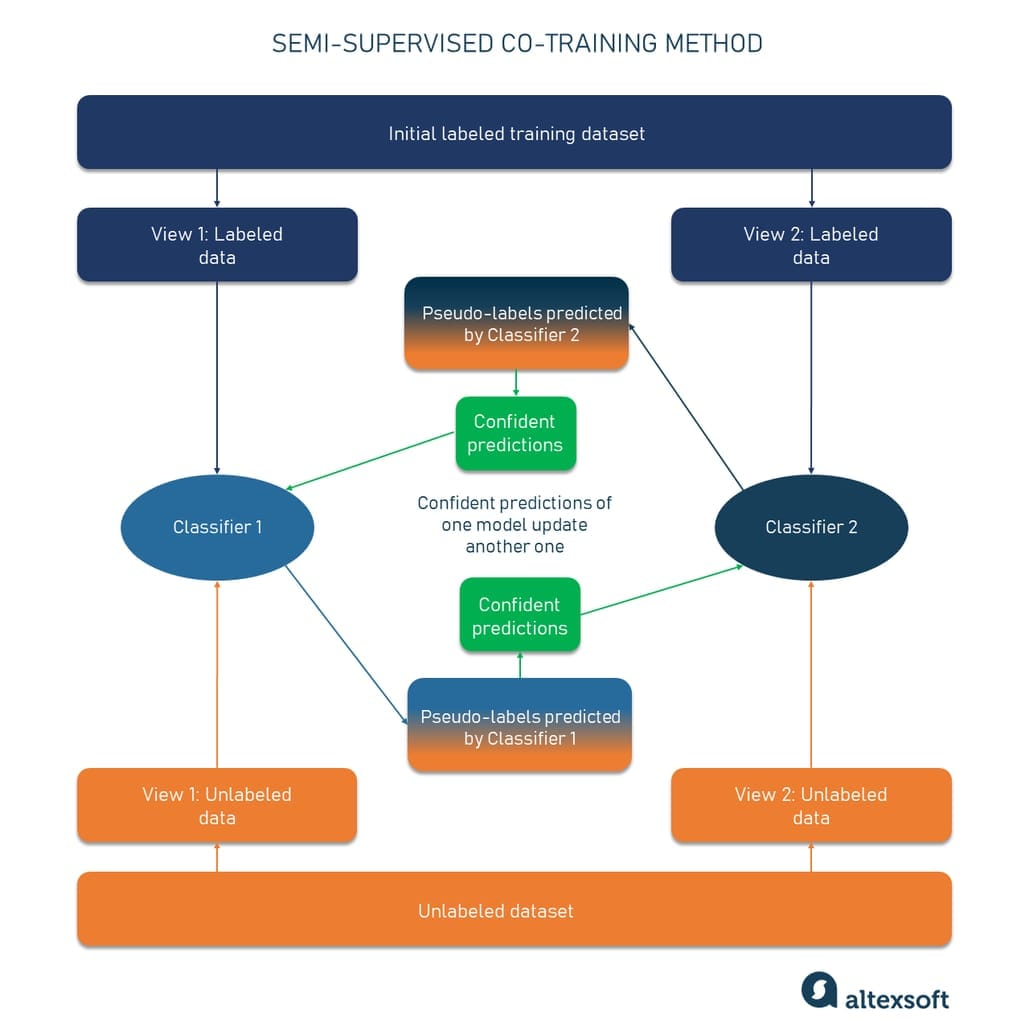

Derived from the self-training approach and being its improved version, co-training is another semi-supervised learning technique used when only a small portion of labeled data is available. Unlike the typical process, co-training trains two individual classifiers based on two views of data.

The views are basically different sets of features that provide additional information about each instance, meaning they are independent given the class. Also, each view is sufficient — the class of sample data can be accurately predicted from each set of features alone.

The original co-training research paper claims that the approach can be successfully used, for example, for web content classification tasks. The description of each web page can be divided into two views: one with words occurring on that page and the other with anchor words in the link leading to it.

So, here is how co-training works in simple terms.

- First, you train a separate classifier (model) for each view with the help of a small amount of labeled data.

- Then the bigger pool of unlabeled data is added to receive pseudo-labels.

- Classifiers co-train one another using pseudo-labels with the highest confidence level. If the first classifier confidently predicts the genuine label for a data sample while the other one makes a prediction error, then the data with the confident pseudo-labels assigned by the first classifier updates the second classifier and vice-versa.

- The final step involves the combining of the predictions from the two updated classifiers to get one classification result.

As with self-training, co-training goes through many iterations to construct an additional training labeled dataset from the vast amounts of unlabeled data.

SSL with graph-based label propagation

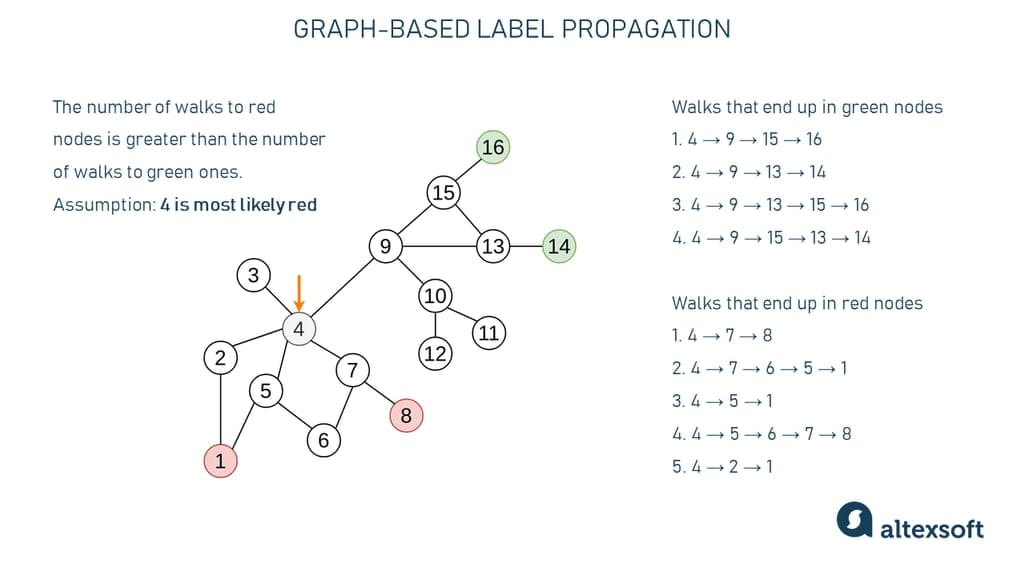

A popular way to run SSL is to represent labeled and unlabeled data in the form of graphs and then apply a label propagation algorithm. It spreads human-made annotations through the whole data network.

If you look at the graph, you will see a network of data points, most of which are unlabeled with four carrying labels (two red points and two green points to represent different classes). The task is to spread these colored labels throughout the network. One way of doing this is you pick, say, point 4, and count up all the different paths that travel through the network from 4 to each colored node. If you do that, you will find that there are five walks leading to red points and only four walks leading to green ones. From that, we can assume that point 4 belongs to the red category. And then you will repeat this process for every point on the graph.

The practical use of this method can be seen in personalization and recommender systems. With label propagation, you can predict customer interests based on the information about other customers. Here, we can apply the variation of continuity assumption — if two people are connected on social media, for example, it’s highly likely that they will share similar interests.

Challenges of using semi-supervised learning

As we already mentioned, one of the significant benefits of applying semi-supervised learning is that it has high model performance without being too expensive to prepare data. It doesn’t mean, of course, that SSL has no limitations. Let’s discuss them further.

Quality of unlabeled data

The effectiveness of semi-supervised learning heavily depends on the quality and representativeness of the unlabeled data. If the unlabeled data is noisy or unrepresentative of the true data distribution, it can degrade model performance or even lead to incorrect conclusions.

For example, if you’re using a dataset of product reviews for sentiment analysis, the unlabeled data might include reviews that are poorly written, contain sarcasm, or express neutral sentiment. If the model learns from these noisy unlabeled examples, it may misclassify similar reviews in the future, leading to lower accuracy and reliability in sentiment analysis predictions.

Sensitivity to distribution shifts

Semi-supervised learning models may be more sensitive to distribution shifts between the labeled and unlabeled data. If the distribution of the unlabeled data differs significantly from the labeled data, the model's performance may suffer.

Say that a model is trained on labeled images of cats and dogs from a dataset with high-quality photographs. However, the unlabeled data used for training contains images of cats and dogs captured from surveillance cameras with low resolution and poor lighting conditions. If the distribution of images in the unlabeled data differs significantly from the labeled data, the model may struggle to generalize from the labeled to the unlabeled images, resulting in lower performance on real-world images with similar characteristics.

Model complexity

Some semi-supervised learning techniques, such as those based on generative models or adversarial training, can introduce additional complexity to the model architecture and training process.

Consider a semi-supervised learning approach that combines self-training with a language model pretrained on a large corpus of text data. The model architecture may become increasingly complex due to the incorporation of multiple components. As the model complexity grows, it may become more challenging to interpret, debug, and optimize, leading to potential performance issues and increased computational resources required for training and inference.

Limited applicability

Semi-supervised learning may not be suitable for all types of tasks or datasets. It tends to be most effective when there is a sizable amount of unlabeled data available and when the underlying data distribution is relatively smooth and well-defined. Which is why you should choose semi-supervised learning in those areas where its benefits outweigh the complexities.

Semi-supervised learning examples

With the amount of data constantly growing by leaps and bounds, there’s no way for it to be labeled in a timely fashion. Think of an active TikTok user that uploads up to 20 videos per day on average. And there are 1 billion active users. In such a scenario, semi-supervised learning can boast of a wide array of use cases from image and speech recognition to web content and text document classification.

Speech recognition

Labeling audio is a very resource- and time-intensive task, so semi-supervised learning can be used to overcome the challenges and provide better performance. Facebook (now Meta) has successfully applied semi-supervised learning (namely the self-training method) to its speech recognition models and improved them. They started off with the base model that was trained with 100 hours of human-annotated audio data. Then 500 hours of unlabeled speech data was added and self-training was used to increase the performance of the models. As far as the results, the word error rate (WER) decreased by 33.9 percent, which is a significant improvement.

Web content classification

With billions of websites presenting all sorts of content out there, classification would take a huge team of human resources to organize information on web pages by adding corresponding labels. The variations of semi-supervised learning are used to annotate web content and classify it accordingly to improve user experience. Many search engines, including Google, apply SSL to their ranking component to better understand human language and the relevance of candidate search results to queries. With SSL, Google Search finds content that is most relevant to a particular user query.

Text document classification

Another example of when semi-supervised learning can be used successfully is in the building of a text document classifier. Here, the method is effective because it is really difficult for human annotators to read through multiple word-heavy texts to assign a basic label, like a type or genre.

For example, a classifier can be built on top of deep learning neural networks like LSTM (long short-term memory) networks that are capable of finding long-term dependencies in data and retraining past information over time. Usually, training a neural net requires lots of data with and without labels. A semi-supervised learning framework works just fine as you can train a base LSTM model on a few text examples with hand-labeled most relevant words and then apply it to a bigger number of unlabeled samples.

The SALnet text classifier made by researchers from Yonsei University in Seoul, South Korea, demonstrates the effectiveness of the SSL method for tasks like sentiment analysis.

Best practices for applying semi-supervised learning

Considering the challenges you can face when using SSL, here are some best practices and strategies that can help maximize the effectiveness and efficiency of semi-supervised learning approaches.

Ensure data quality

Ensure data preprocessing steps are applied consistently to both labeled and unlabeled datasets to maintain data quality and consistency. You can implement robust data cleaning and filtering techniques to identify and handle noisy or erroneous data points that may negatively impact model performance. Augment the labeled dataset with synthetic data generated through techniques such as rotation, translation, and noise injection to increase diversity and improve generalization.

Choose an appropriate model and evaluate it

Select semi-supervised learning algorithms and techniques that are well-suited to the task, dataset size, and available computational resources. Use appropriate ML evaluation metrics to assess model performance on both labeled and unlabeled data and compare it against baseline supervised and unsupervised approaches. Also, employ cross-validation techniques to assess model robustness and generalization across different subsets of the data, including labeled, unlabeled, and validation sets.

Make use of transfer learning

Leverage pretrained models or representations learned from large-scale unlabeled data (say, self-supervised learning) as initialization or feature extractors for semi-supervised learning tasks, facilitating better performance.

Control model complexity

You can employ regularization methods (entropy minimization, consistency regularization) to encourage model smoothness and consistency across labeled and unlabeled data, preventing overfitting and improving generalization. At the same time, you can balance model complexity by leveraging the rich information from large unlabeled datasets effectively, using techniques such as model ensembling or hierarchical architectures.

Design interpretable models

Models with interpretable architectures and mechanisms can help you understand the model’s decisions and predictions, enabling stakeholders to trust and validate model outputs. There are explainability techniques, such as feature importance and attention mechanisms that provide insights into model behavior and highlight relevant patterns learned from both labeled and unlabeled data.

Monitor performance

As we already mentioned, SSL models are developed iteratively, which allows refining and updating them based on performance feedback, new labeled data, or changes in the data distribution. A common practice is to implement monitoring and tracking mechanisms to assess model performance over time and detect drifts or shifts in the data distribution that may call for retraining or adaptation of the model.

When to use and not use semi-supervised learning

With a minimal amount of labeled data and plenty of unlabeled data, semi-supervised learning shows promising results in classification tasks while leaving the doors open for other ML tasks. Basically, the approach can make use of pretty much any supervised algorithm with some modifications needed. On top of that, SSL fits well for clustering and anomaly detection purposes too if the data fits the profile. While a relatively new field, semi-supervised learning has already proved to be effective in many areas.

But it doesn’t mean that semi-supervised learning is applicable to all tasks. If the portion of labeled data isn’t representative of the entire distribution, the approach may fall short. Say, you need to classify images of colored objects that have different looks from different angles. Unless you have a large amount of labeled data, the results will have poor accuracy. But if we're talking about lots of labeled data, then semi-supervised learning isn’t the way to go. Like it or not, many real-life applications still need lots of labeled data, so supervised learning won’t go anywhere in the near future.