Not long ago, setting up a data warehouse — a central information repository enabling business intelligence and analytics — meant purchasing expensive, purpose-built hardware appliances and running a local data center. With the consistent rise in data volume, variety, and velocity, organizations started seeking special solutions to store and process the information tsunami.

This demand gave birth to cloud data warehouses that offer flexibility, scalability, and high performance. Nowadays, Snowflake is one of the most popular options that meets important business requirements.

This article is a great place to start for anyone considering Snowflake as part of their modern data stack. We’ll dive deeper into Snowflake's pros and cons, unique architecture, and features to help you decide whether this technology is the right choice for your company.

Data warehousing in a nutshell

Before we get into Snowflake technology, let's deal with the key concepts of data warehousing to gain a common understanding.

The main idea of any data warehouse (DW) is to integrate data from multiple disjointed sources (e.g., CRMs, OLAP/OLTP databases, enterprise applications, etc.) within a single, centralized location for analytics and reporting. Traditionally, it is a relational database that stores all data in tables and allows users to run SQL (Structured Query Language) queries.

By the type of deployment, data warehouses can be categorized as

- on-premise — hardware and software are installed locally;

- cloud-based — resources are deployed either in public or private cloud environments; and

- hybrid cloud — the aforementioned capabilities are available under one roof.

Depending on the type and capacities of a warehouse, it can become home to structured, semi-structured, or unstructured data.

- Structured data is highly organized and commonly exists in a tabular format like Excel files.

- Unstructured data comes in all forms and shapes, from audio files to PDF documents, and doesn’t have a pre-defined structure.

- Semi-structured data is somewhere in the middle, meaning it is partially structured but doesn't fit the tabular models of relational databases. Examples are JSON, XML, and Avro files.

The data journey from different source systems to a warehouse commonly happens in two ways — ETL and ELT. The former extracts and transforms information before loading it into centralized storage, while the latter allows for loading data prior to transformation.

These are the basics needed to explore the world of Snowflake and how it works.

What is Snowflake?

Developed in 2012 and officially launched in 2014, Snowflake is a cloud-based data platform provided as a SaaS (Software-as-a-Service) solution with a completely new SQL query engine. As opposed to traditional offerings, Snowflake is natively designed for the public cloud, meaning it can’t be run on-premises. The platform provides fast, flexible, and easy-to-use options for data storage, processing, and analysis. Initially built on top of Amazon Web Services (AWS), Snowflake is also available on Google Cloud and Microsoft Azure. As such, it is considered cloud-agnostic.

Modern data pipeline with Snowflake technology as part of it. Source: Snowflake

With Snowflake, multiple data workloads can scale independently, making it appropriate for data warehousing, data lakes, data science, data sharing, and data engineering.

BTW, we have an engaging video explaining how data engineering works

Snowflake is considered a more serverless offering, meaning as a user, you don’t have to select, install, configure, or manage any software and hardware (virtual or physical) except for the number and size of compute clusters (more on this later). Also, Snowflake has a unique architecture that can scale up and down based on the requirements and workloads. For example, when the number of queries increases, the system instantly mobilizes more computing resources.

What is Snowflake used for

Snowflake is a cloud-based data warehousing and analytics platform that can be used for various purposes. Here are some of its key use cases.

Data ingestion. Snowflake offers a data ingestion service called Snowpipe. It allows enterprises to stage data as soon as it becomes available from external storage locations such as Amazon S3 and Azure Blob. With features like auto-ingest and cloud provider notification, Snowpipe enables seamless and uninterrupted data loading into tables.

Business intelligence and analytics. Snowflake enables organizations to gain insights from their data through interactive reporting and advanced analytics. The solution’s compatibility with popular business intelligence tools such as QuickSight, Looker, Power BI, and Tableau enhances its ability to provide valuable insights for organizations.

Data sharing and collaboration. Snowflake offers a seamless and secure way for users to share and collaborate on their data via Snowflake Marketplace. The Marketplace is a centralized platform where users can discover and access data assets, such as datasets and data services, that are published by other organizations. Snowflake verifies the data assets to ensure that they meet certain standards of quality and security. Users can easily discover data assets relevant to their needs, compare different offerings, and quickly obtain access to the data they need.

In September 2024, Snowflake introduced new functionality to support the travel and hospitality industries. The platform helps companies connect their data and applications to enhance dynamic pricing, operational efficiency, reputation management, and sustainability tracking. Integrated third-party data, like weather and economic indicators, aids decision making. Clients include major travel and hospitality companies such as Hyatt, Tripadvisor, Marriott, JetBlue, and Booking.com.

Machine learning. Snowflake supports machine learning use cases, enabling data scientists and analysts to build, train, and deploy machine learning models within the Snowflake platform. This includes loading, transforming, and managing large datasets, as well as integrating them with popular machine learning libraries such as TensorFlow and PyTorch. Additionally, Snowflake integrates directly with Apache Spark to streamline data preparation and facilitate the creation of ML models. With support for programming languages like Python, R, Java, and C++, Snowflake empowers users to leverage these tools to develop sophisticated ML solutions.

Snowflake architecture overview

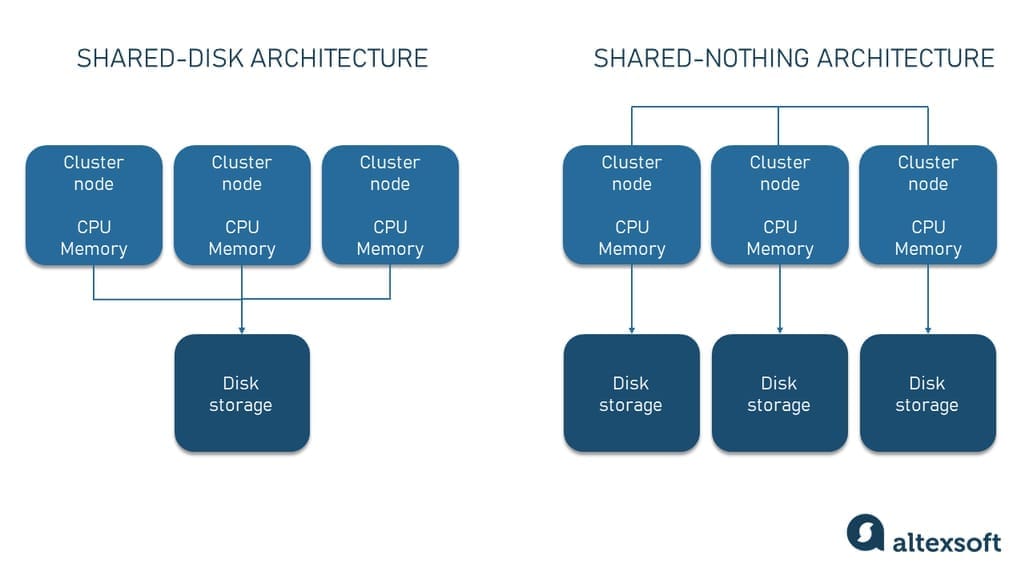

In most cases, if you want to build a scalable data warehouse, you need massively parallel processing (MPP) to handle multiple concurrent workloads. So, you either use shared-disk or shared-nothing MPP architecture for that.

Shared-disk vs shared-nothing architecture

- The shared-disk architecture uses multiple cluster nodes (processors) that have access to all data stored on a shared memory disk. Nodes have CPU and Memory but no disk storage, so they communicate to a central storage layer to get data.

- The shared-nothing architecture stores and processes portions of data on different cluster nodes in parallel and independently. Each node has its own disk storage.

Snowflake combines the benefits of both architectures in its new, unique hybrid design.

Similar to shared-nothing architecture, Snowflake uses MPP-based computing to process queries concurrently, with each node locally storing a portion of the entire data. As for the similarity with shared-disk architecture, there is a centralized data repository for a single copy of data that can be accessed from all independent compute nodes. As such, data management is as simple as in shared-disk architecture with performance and scale-out benefits of the shared-nothing architecture.

Snowflake architecture diagram

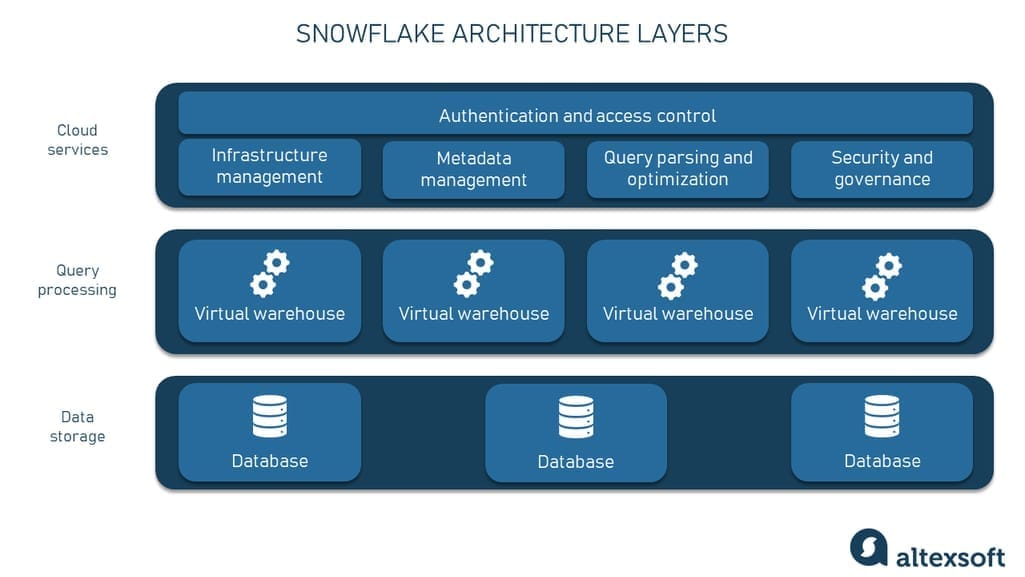

Snowflake has a multi-cluster, shared-data architecture that consists of three separate tiers, namely

- data storage layer,

- query processing (compute) layer, and

- cloud services (client) layer.

Physically separated but logically integrated, each layer can scale up and down independently, enabling Snowflake to be more elastic and responsive.

To understand how Snowflake works, we’ll walk you through all the layers and explain their features.

Database storage layer

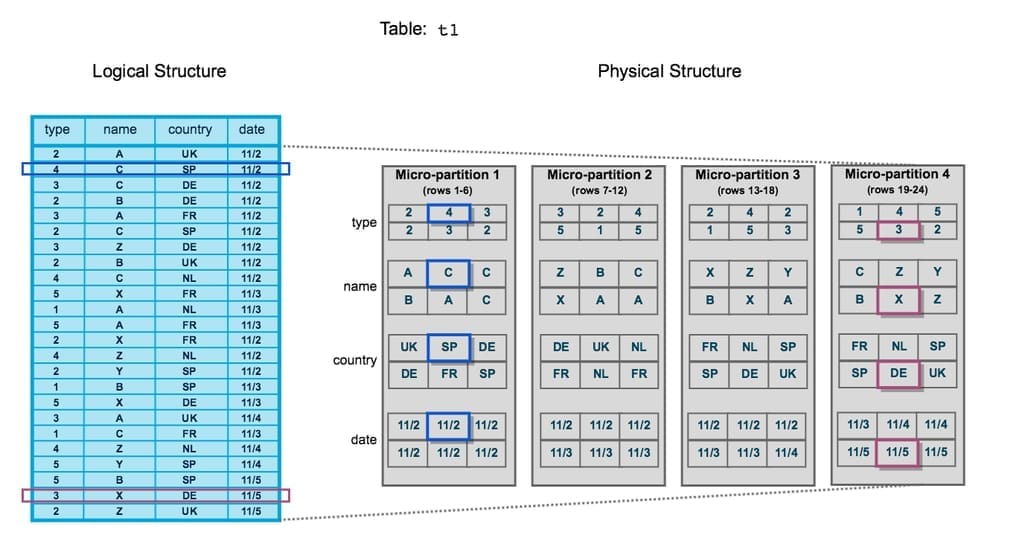

The database storage layer, as the name suggests, handles tasks related to secure, reliable, and elastic storage of data that comes from disparate sources. With loading, data is optimized, compressed, and reorganized into an internal columnar format. It is broken down into so-called micro-partitions. For example, if the table contains transactions, micro-partitions are the days of transactions. Each day is a separate micro-partition – a separate file.

This optimized data is stored in a cloud object storage such as S3 by AWS, Google Cloud Storage, or Microsoft Azure Blob Storage. Customers can neither see nor access these data objects. They use Snowflake to run SQL query operations.

Query processing layer

Query processing or compute layer provides the means for executing various SQL statements. It consists of multiple independent compute clusters with nodes processing queries in parallel. Snowflake calls these clusters virtual warehouses. Each warehouse is packed with compute resources, such as CPU, memory, and temporary storage required to perform SQL and DML (Data Manipulation Language) operations. Users can:

- retrieve rows from tables,

- load data into tables,

- unload data from tables, and

- delete, update, or insert separate rows in tables, etc.

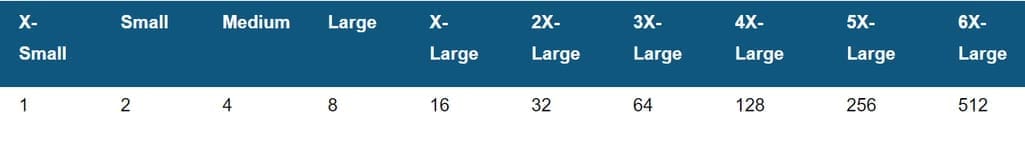

Virtual warehouses come in ten sizes from X-Small to 6X-Large: Each increase in size to the next larger warehouse doubles the computing power.

Since warehouses don’t share compute resources with one another, there’s no impact on the performance of other machines if one goes down. Besides, nodes do not store any data, so losing them isn’t critical. If a failure occurs, Snowflake will recreate a new instance in minutes.

Cloud services layer

Cloud services or client layer hosts a bunch of services that coordinate activities across Snowflake.

Services managed in this layer include

- authentication and access control (authenticating users and connections, managing encryption and keys);

- infrastructure management (managing virtual warehouses and storage);

- metadata management (storing metadata and handling queries that can be executed from it); and

- query parsing and optimization.

The layer also runs on compute instances provided by Snowflake from different cloud providers. These services pull together all Snowflake components into a cohesive whole.

The pros of Snowflake Data Warehouse

In this section, you will find things that make Snowflake a real deal and may serve as reasons to consider this cloud data warehouse as a solution.

Adequate security and data protection

With Snowflake, data is highly secure. Users can set regions for data storage to comply with regulatory guidelines such as HIPAA, PCI DSS, and SOC 1 and SOC 2. Security levels can be adjusted based on requirements. The solution has built-in features to encrypt all data at rest and in transit, regulate access levels, and control things like IP allows and blocklists.

To achieve better data protection, Snowflake offers two advanced features — Time Travel and Fail-safe. Time Travel gives you an opportunity to restore tables, schemas, and databases from a specific time point in the past. By default, there’s one day of data time travel. However, Enterprise users can choose a period of time of up to 90 days. The fail-safe feature allows for the protection and recovery of historical data. Its 7-day period starts right after the Time Travel retention period ends.

Great performance and scalability

Thanks to the separated storage and computing, Snowflake has the ability to run a virtually unlimited number of concurrent workloads against the same single copy of data. This means that multiple users can execute multiple queries simultaneously.

While benchmarks can be configured to perform in a certain way and fit particular use cases, most show great results for Snowflake performance. Snowflake can process 6 to 60 million rows of data in 2 seconds to 10 seconds, which is pretty impressive. Out of the box, Snowflake has what it takes to outperform other cloud warehouse solutions with no prior fine-tuning.

When it comes to scalability, Snowflake has a unique auto-scaling and auto suspend feature to start and stop warehouses depending on whether they are active or inactive. For comparison, autoscaling in Redshift is quite limited. Moreover, Snowflake can handle both vertical and horizontal scaling. Vertical scaling means the platform adds more computer power to existing virtual warehouses, e.g., upgrading CPUs. With horizontal scaling, more cluster nodes are added.

Data caching

The virtual warehouse memory is used for caching. When a query is executed, data from different tables in storage is cached by different compute clusters. Then all subsequent queries can use this cache to generate results. With data in the cache, queries run up to 10 times faster.

Micro partitions

A really powerful element of the tool is that data stored in Snowflake comes in the form of micro-partitions. These are continuous units of storage that hold data physically. They are called “micro” because their size ranges from 50 to 500 MB before compression. Besides, resizing the micro-partition blocks can be executed by both users and Snowflake automatically.

How Snowflake stores data in micro-partitions. Source: Snowflake

Within each micro-partition, data is stored in a columnar data structure, allowing better compression and efficient access only to those columns required by a query. As shown in the picture above, 24 rows from the table are stored and sorted in 4 micro-partitions by columns. Repeated values are stored only once. Let’s imagine that you need data from two different tables for your SQL query to be executed. So, instead of copying both tables fully to the compute cluster, Snowflake retrieves only relevant micro-partitions. As a result, the query needs less time to be completed.

Support for both ETL and ELT processes

Snowflake supports both ETL and /ELT processes for inserting data in scheduled bulks or batches. It easily integrates with ETL tools like Informatica, Talend, Fivetran, and Matillion, offering versatile data integration and transformation. There’s also pre-connection with Apache Airflow for orchestration of ETL/ELT data pipelines.

Near real-time and real-time data ingestion

Snowflake was fundamentally designed for batch data processing. But its service, Snowpipe, also enables continuous data ingestion from source files in micro-batches, making information available to users almost instantaneously. Finally, in 2023, the company introduced streaming capabilities. The new Snowpipe Streaming API supports real-time use cases and is a powerful tool for loading data from streaming sources—for example, Kafka—into Snowflake. It reads data faster and more efficiently than Snowpipe, handling real-time use cases.

Snowflake Data Exchange hub

Snowflake provides a tool called Data Exchange for securely sharing and collaborating on data with a chosen group of participants you invite. As a data provider, you can create a database and publish data that becomes accessible to consumers within the exchange's centralized platform.

This tool allows you to seamlessly share data with trusted business partners, such as internal teams, vendors, suppliers, or external collaborators. You can either target specific consumers with private listings or make your data publicly available through the Snowflake Marketplace, enabling both internal and external users to discover and access it.

Snowflake data model

Snowflake allows for multiple approaches to data modeling — or ways to organize data in a database — not only for snowflake-type schemas, as its name might wrongly suggest. For example, you can opt for data vault to design your warehouse. By the way, the snowflake schema and the Snowflake data cloud are not relatives; they are just namesakes. Marcin Zukowsky, a cofounder of Snowflake, explained the choice of name for the company: firstly, real snowflakes are born in clouds; secondly, each snowflake is unique; and finally, all the co-founders love winter sports. With the modeling schema, it’s even simpler: it's shaped like a snowflake.

Light learning curve

Many people think that properly setting up and using a data warehouse is a difficult task requiring solid knowledge of different tech stacks. Things are different with Snowflake since it is fully SQL-based. Chances are, you have some experience using BI or data analysis tools that work on SQL. Most of what you already know can be applied to Snowflake. Not to mention that SQL is an easy-to-learn language, which is a significant benefit for general users. To that we add an intuitive UI that fits the needs of both users with and without coding experience.

Read our article to learn about SQL and NoSQL databases, along with other types of databases and DBMS.

Zero management

One of the real strengths of Snowflake is the serverless experience it provides. Well, almost serverless, to be exact. As a user you don’t have to go behind the scenes of Snowflake's work. The platform handles all the management, maintenance, upgrades, and tuning tasks. It also takes care of all aspects of software installation and updates. And it goes for all types of users — general end-users, business analysts, or data scientists.

With almost zero management, you can be up and running in Snowflake in minutes, start loading your data, and derive insights from it. Why “almost” then? You still need to set the number of warehouses and configure the sizes of compute clusters per warehouse. These tasks require knowledge of SQL as well as an understanding of how data warehouse architecture works. Hence, we can't say that Snowflake is completely serverless.

Multiple access options

Users can access data in various ways, such as the Snowflake Web UI, the Snowflake Client command-line interface, and a set of connectors and drivers like ODBC and JDBC.

Web UI. A web-based user interface is leveraged to interact with cloud services. Users can manage their accounts and other general settings, monitor the usage of resources, and query data as well.

Command-line interface. Snowflake provides a Python-based CLI Client called SnowSQL to connect to the data warehouse. It is a separate downloadable and installable utility for executing all queries, including both data definition and data manipulation types

Connectors. There’s a rich set of connectors and drivers for users to connect to cloud data. Some of them include Connector for Python for writing Python apps that connect to Snowflake, ODBC driver for C and C+ development, and JDBC driver for Java programming.

Snowflake ecosystem: tools and extensions

There's an extensive ecosystem of various tools, extensions, and modules that provide native connectivity to Snowflake.

For data integration purposes, a few popular tools and technologies to natively use with Snowflake include:

- Hevo Data is an official Snowflake ETL Partner that provides a no-code data pipeline to bring information from various sources to Snowflake in real time;

- Apache Kafka software uses a publish/subscribe model to write and read streams of records and is available through the Snowflake Connector for Kafka to load data from its topics; and

- Informatica Cloud and Informatica PowerCenter are cloud data management tools that work collaboratively with Snowflake.

For machine learning and data science purposes, consider the following platforms:

- Amazon SageMaker — a cloud machine-learning platform to build, train, and deploy machine learning (ML) models — has no requirements for connecting to Snowflake and

- Qlik AutoML — a no-code automated machine learning platform — is a readily available integration with no requirements.

For BI and analytics purposes, you can choose from a variety of tools and integration provided by Snowflake, namely:

- Looker — business intelligence software and big data analytics platform powered by Google Cloud — is validated by the Snowflake Ready Technology Validation Program;

- Power BI — Microsoft business intelligence platform — can be connected to Snowflake via ODBC driver; and

- Tableau — one of the leading analytics platforms — is also among Snowflake partner integrations.

The toolset isn't exhaustive; there are far more technologies that Snowflake uses to extend its capabilities.

Awesome documentation

Snowflake’s documentation is truly a gem. Neatly organized and well-written, it explains all the aspects of the technology from general concepts of the architecture to detailed guides on data management and more. Whether you are a business user with no tech background or an experienced solution architect, Snowflake has resources for everyone.

The cons of Snowflake data warehouse

The sky’s the limit, right? No matter how great a solution is, there are always some weaknesses that may be critical to customers considering certain technologies as a part of their stack. Snowflake is no exception.

No on-premises deployment

Snowflake was designed in the cloud and for the cloud only. All components of Snowflake’s service for computing needs and persistent data storage run only in public or private cloud infrastructures. This means that users cannot deploy Snowflake on local servers, to be managed in-house.

Unpredictable pricing

Unlike traditional data warehouses, Snowflake gives you the flexibility to pay only for what you use. On-demand pricing means you pay based on the amount of data you store and compute hours you use. Compute resources are charged per second, with a 60-second minimum. In case you want to move from a large to an X-large warehouse, you will double your compute power and the number of credits billed per hour.

While on-demand pricing can be convenient, the final sum is unpredictable, as there are no restrictions on data. One sloppy script, and before you know it, the entire year's budget can vanish within days.

Relatively small community

No matter how great a certain technology is, it still may prompt inquiries about implementation and problem-solving. And here’s where a big community of experienced users can be an advantage.

Snowflake has a relatively small community compared to, say, the 100,000+ members supporting Cloudera. This is common for proprietary solutions compared to open-source products. But Snowflake is also inferior to its paid competitors: it has 13,000 users on an unofficial subreddit (the BigQuery subreddit has around 18,000).

However, it's important to note that even though a smaller community may be reflected in these metrics, Snowflake's community is still active and growing. On top of that, Snowflake's ease of use compared to other solutions might make it less likely for users to run into problems. In case you have some questions, you can fill out a form on the website and they will contact you via phone or email.

Cloud-agnostic approach: a weakness?

The cloud-agnostic nature of Snowflake can be both an advantage and a disadvantage, depending on a company's needs. On the one hand, there's no vendor lock-in and you are free to run Snowflake in the Amazon, Google, and Microsoft public clouds of your choice. However, each of these public clouds offers its own cloud data warehouse tool: Amazon Redshift, Google BigQuery, and Microsoft Azure SQL DW, respectively. In some cases, choosing a more tightly coupled cloud ecosystem can be more beneficial than going the Snowflake path.

Snowflake alternatives and competitors

Having looked at Snowflake architecture and toolset, you probably have a general understanding of whether this solution meets your needs. Overall, itis a good fit for companies in search of an easily deployed data warehouse with nearly unlimited, automatic scaling and top-notch performance.

In case you think Snowflake doesn’t have exactly what your project requires, you can look at the alternative vendors on the market. Here are a few possible options.

Snowflake vs Apache Hadoop

The Apache Hadoop is an open-source framework for the distributed processing of large data sets across clusters of computers. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. However, for the sake of scalability in handling big data, systems like Hadoop may compromise on the security and convenience of SQL. Besides, the Hadoop programming environment is quite complex.

Snowflake vs Databricks

Initially built as a processing engine managed by Apache Spark, Databricks is a cloud platform with a hybrid architecture of a data lake and a data warehouse known as a data lakehouse. It includes Delta Lake storage and a SQL engine called Databricks SQL Analytics. Just like Snowflake, the platform is equipped with services for all your data and analytics needs, from loading to storage to query processing to machine learning and so on. It's not serverless, though. Databricks requires a specialized set of skills to manage and maintain its infrastructure, e.g., experience in configuring Spark, which in turn calls for expertise in Scala and Python.

Read our dedicated article on data lake vs data warehouse to learn about their differences and real-life examples of how companies are combining them.

Snowflake vs AWS Amazon Redshift

Amazon Redshift is another alternative to consider. It is also a cloud-based data warehouse designed to tackle Business Intelligence use cases among other things. Redshift is a more serverless offering that has a shared-nothing MPP architecture to enable users to run multiple complex analytical queries at the same time.

Redshift lets you store data separately from the computing power that processes it; however, unlike Snowflake, where you can easily add more CPU without affecting ongoing tasks, Redshift's solution isn't as flexible.

What is the advantage of Redshift? It is a native AWS service that is built to work in unison with other AWS technologies. So, if you are already using AWS technology, Redshift might be a better option for you. At the same time, the solution comes with more baggage than Snowflake because users have to optimize the platform in order to get the most out of the solution.

Snowflake vs Salesforce Data Cloud

While Snowflake excels at general-purpose data handling, Salesforce Data Cloud is specialized for customer data management. It ingests marketing data from all sources: CRM systems, marketing tools, apps, websites, etc. -- and de-duplicates it in a data lake, so all data related to a specific customer is collected as a unified profile under a single ID. This type of cloud platform is called a customer data platform (CDP), and Gartner named Salesforce Data Cloud the leading CDP in 2024.

Salesforce Data Cloud supports zero-copy integration with other platforms, including Snowflake, Redshift, BigQuery, and Databricks. This means data from other platforms can be accessed and viewed in Salesforce Data Cloud without physical movement or duplication. This ensures data integrity, reduces storage costs, and increases productivity.

If you are already working with the Salesforce platform and prioritize deep customer insights and real-time data over broader data warehousing and analytics, you may prefer Salesforce Data Cloud over Snowflake.

For a more detailed comparison of Snowflake’s main competitors, please read our dedicated article comparing major cloud data warehouses, including our hero.

How to get started learning Snowflake

If you want to implement or migrate to Snowflake, we have prepared a few useful links with information on how to make that happen and what to start with.

Snowflake Documentation. This is a general reference for all the services provided by Snowflake —from the Getting Started info pack explaining how to create an account to detailed step-by-step guides on how to use REST API to access unstructured data.

Snowflake ecosystem of partner integrations. Chances are you are already using some sort of software to work with data which is already pre-integrated with Snowflake. There’s a wide array of third-party partners and technologies that provide native connectivity to Snowflake. They range from data integration solutions to Business Intelligence tools to machine learning and data science platforms and more.

Pricing page. This link will explain the details of Snowflake's pricing plans. At the moment, there are four plans — Standard, Enterprise, Business Critical, and Virtual Private Snowflake. Also, there you will find an informative pricing guide and contacts for Snowflake consultants.

Community forums. Even with a not-so-big community, it is easy to find answers to all general and technical questions related to Snowflake, including information about its SQL language, connectors, administration, user interface, and ecosystem. There are 13 Community Groups clustered under major topics on the website. You can visit Snowflake Lab on GitHub or go to the aforementioned StackOverflow or Reddit forums where data experts and enthusiasts share their experiences.

Snowflake University and Hands-on Lab. In its online University, Snowflake provides a variety of courses for people with different levels of expertise: for those who are new to Snowflake and for advanced learners preparing for SnowPro Certification exams, e.g., Zero to Snowflake in 90 Minutes.

YouTube channel. Snowflake has a lot of explanatory, educative, and customer success videos on the official YouTube Channel.