Are you struggling with writing test cases that actually make QA activities more efficient? You're not alone. This guide will help you understand test cases better and write them like a pro. We’ll also discuss whether you have to write them at all. So let’s dive in.

What is a test case?

A test case is a set of conditions, inputs, actions, or sequences designed to validate a specific aspect of a software application. In other words, it’s a detailed guide explaining how to determine whether a particular feature or functionality works as expected.

Who writes test cases?

Typically, a QA engineer or someone on the testing team (e.g., a QA analyst) writes test cases. Often, business analysts are also involved in this process to ensure the software meets the requirements.

Sometimes, software engineers also take part in creating test cases, though it makes sense to delegate this task to someone not involved in writing the code – for a fresh perspective.

When to write test cases?

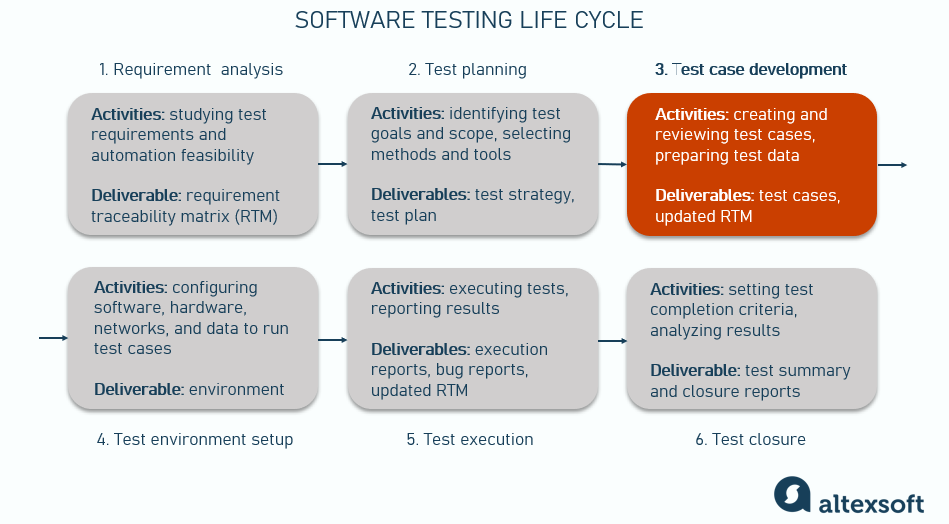

Test cases are written right after understanding the requirements and defining a test plan. Preparing and executing tests early helps catch issues before the cost of error is too high.

Test case development in the testing lifecycle

Note that while test cases are typically written along with or after the functionality is developed, in test-driven development (TDD), test cases are written before the actual code. This way, test cases actually define the system’s expected behavior.

Why write test cases – if at all?

Test cases are a traditional type of testing documentation and a staple for many projects. When well-written, they provide a clear, structured way to validate software. This way, test cases streamline testing efforts, saving time and resources. They also act as a record of the testing process, which is useful for reporting and onboarding new team members.

However, sometimes, test cases aren’t the best choice of format.

In Agile frameworks, for example, the fluid nature of requirements can make test cases less effective. Agile is all about flexibility and the ability to adapt to changing project needs. If your project expects frequent pivots or updates in requirements, maintaining a rigid set of test cases can become a burden rather than a boon.

So, how do you know if test cases are right for your project? Here are a few criteria to consider.

Do you need test cases: generalized advice

Stability of requirements. Test cases can provide a clear, structured path for validation in Waterfall projects when requirements are well-defined and stable, with few anticipated changes.

Domain and regulatory compliance. In environments where regulatory compliance is critical (like finance or healthcare), test cases help ensure that every piece of functionality is tested and meets stringent standards.

Team size and composition. Larger teams or teams with a dedicated testing group might manage test cases more effectively than smaller or more generalized teams.

Complexity and risk. For complex systems where the cost of failure is high, detailed test cases can help mitigate risk by ensuring thorough testing across all scenarios.

Test automation. It all depends on how your manual and automation testing activities are set up, but as a rule, test cases serve as a basis for creating automation scripts.

Time and resources. If your team is tight on resources—whether it’s time, staff, or budget—crafting and maintaining detailed test cases might not be feasible.

Overall, test cases are a recommended practice. However, while most teams use them to support their QA activities, others prefer a different approach.

For example, suppose you are a dedicated team of experienced testers working on the same project for a long time and knowing the product inside and out. In that case, you might find that traditional test cases aren’t necessary and take too long to write and maintain.

Here are a few alternatives worth considering.

- Exploratory testing – testers use their skills and intuition to explore the software, identify defects, and learn about the software's behavior without predefined cases.

- Checklists – simpler than formal test cases, checklists can provide guidance you on what to test without specifying how to test it, offering flexibility in execution.

- Scenarios – they're another way to provide a higher-level overview of what needs to be tested without going into too much detail (e.g., with Gherkin “Given-When-Then” format).

One size or style doesn't fit all. Teams decide what works best for them, the project, and the client, trying to operate most efficiently in inevitable constraints.

All that said, let’s get back to test case basics. And since we’ve mentioned scenarios, let’s clarify how they are different from test cases.

Test case vs test scenario

It’s common to confuse test cases with test scenarios, but they have different roles and levels of detail in software testing.

A test scenario is a high-level description of what to test, focusing on user actions and system behavior. It is more general and does not include detailed steps or data.

Example: Verify the login functionality of the application.

A test case is a detailed guide that includes specific steps, conditions, inputs, and expected results to validate a particular aspect of the test scenario.

Example: Navigate to the login page. Enter valid credentials. Click the login button. Verify that the dashboard loads correctly.

So test scenarios define what needs to be tested, often referring to the associated user story, while test cases detail how to test, offering the step-by-step instructions needed to execute those scenarios.

Types of test cases

There are many types of test cases, each serving a different purpose. Here are the most common ones.

Functional test cases validate specific functionality against requirements.

Example: Ensuring the login feature accepts valid credentials.

Nonfunctional test cases focus on performance, usability, scalability, and security. This category can be broken down further into relevant types (security test cases, usability test cases, etc.).

Example: Checking if the login page loads within 2 seconds under heavy traffic.

Regression test cases ensure that new changes don’t break existing functionality.

Example: Testing the login feature after updating the password reset functionality.

Negative test cases validate the system’s behavior with invalid or unexpected inputs.

Example: Entering incorrect credentials on the login page and ensuring an appropriate error message is displayed.

Unit test cases focus on individual components or functions in isolation.

Example: Testing a function that calculates a user's age based on their birth date.

Integration test cases ensure different modules or systems work together seamlessly.

Example: Verifying the integration between the login and user account modules.

User acceptance test (UAT) cases validate the system against user requirements and expectations.

Example: Checking the entire login process, from user input to dashboard access.

To learn more about user acceptance testing, visit our dedicated post or watch the video below.

All these and other types of test cases can and often should work together to cover all bases. For example, you might run functional and nonfunctional tests side by side to check both features and performance.

Mixing and matching these approaches gives you a well-rounded view of how the system performs overall.

Test case structure

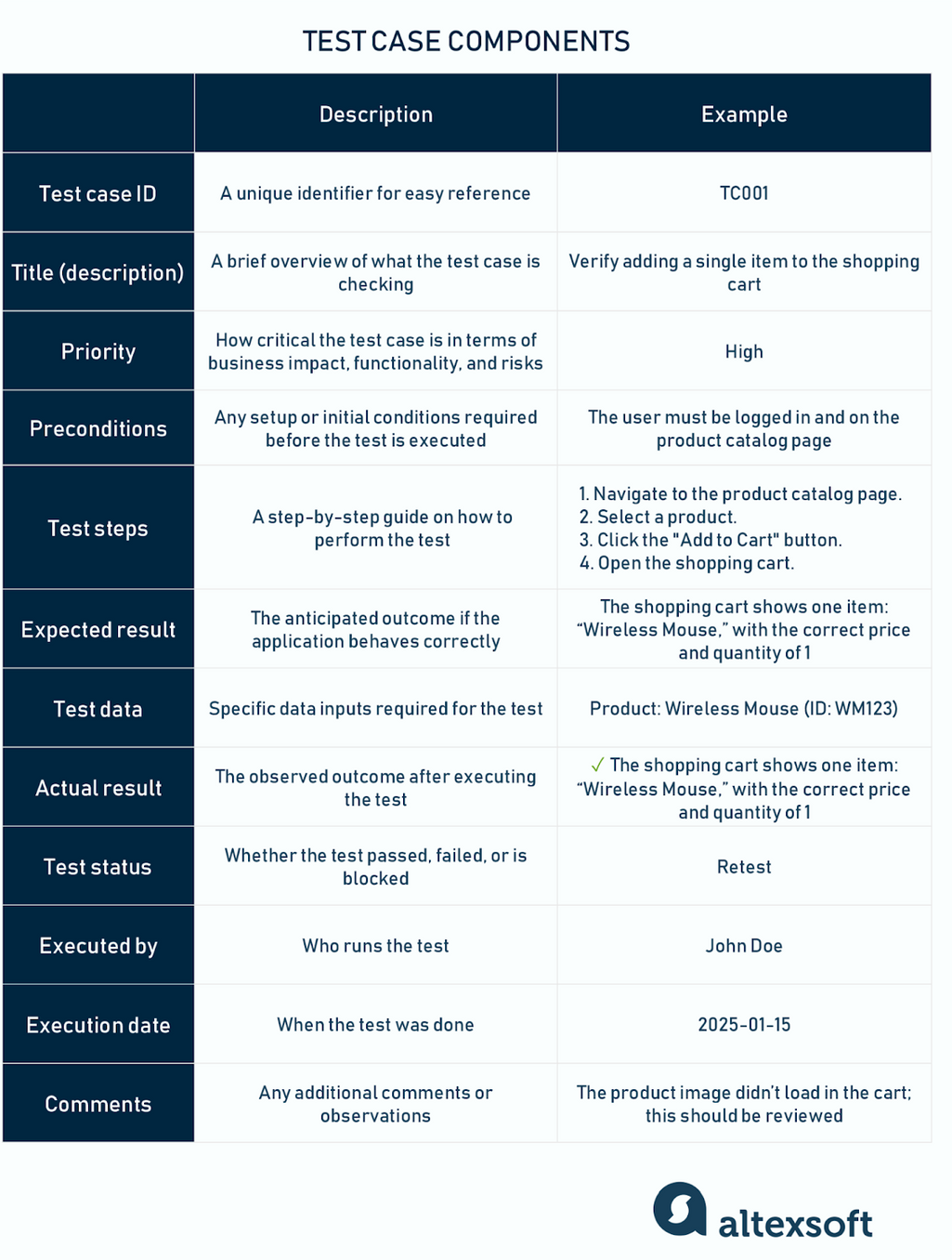

Each test case typically includes the following components.

Test case components

Test case ID: A unique identifier for easy reference.

Title/Description: A brief overview of what the test case is checking.

Priority: How critical the test case is in terms of business impact, functionality, and risks.

Preconditions: Any setup or initial conditions required before the test is executed.

Test steps: A step-by-step guide on how to perform the test.

Test data: Specific data inputs required for the test.

Expected result: The anticipated outcome if the application behaves correctly.

All the above components are written before running tests. The rest is filled out after you execute the test.

Actual result: The observed outcome after executing the test.

Executed by: Who runs the test.

Execution date: When the test was done.

Status: Whether the test passed, failed, or is blocked.

Remarks/Notes: Any additional comments or observations.

Note that the structure of a test case isn’t set in stone – it can change depending on the project, team, or tool you're using. At its core, a test case should always include an ID, title, steps, expected results, and actual results.

Other details, like preconditions, test environment, or who created/executed the test, might also be added to provide more details and streamline the process.

How to write test cases?

Okay, now that we’ve covered the boring theoretical basics, we’re getting to the more practical stuff. So let’s dive into the nuts and bolts of writing award-winning test cases.

Understand the requirements

Before you start writing anything, read and understand the requirements. What is the software or feature supposed to do? If you’re uncertain, refer to the project documentation and ask questions. Hint: The people who are likely to know best are the business analyst or the product owner.

The better you understand how the system must perform, the clearer your test cases will be.

Break it down

Once you have a complete picture of the requirements, break them up into smaller, testable pieces.

For example, if you’re testing the online shopping cart feature, the requirement might be “The shopping cart should allow users to add, update, and remove items."

Here, you can write test cases for a lot of specific pieces of functionality in a common user flow as in these examples:

- Adding items – a user can add an item to the cart and the correct quantity and price are displayed;

- Updating quantities – a user can increase or decrease the quantity of an item in the cart and the total price updates accordingly;

- Removing items – a user can remove an item from the cart, and the cart updates correctly;

- Empty cart behavior – an empty cart displays a "Your cart is empty" message;

- Persistence – the cart retains its state across sessions if the user is logged in;

- Limit handling – the cart does not accept quantities beyond the stock limit or negative values; and so on.

This breakdown allows you to test each functionality independently, making it easier to identify and resolve issues.

Identify test scenarios

Think about all the ways users might interact with the feature. For example:

- What happens if the user tries to add more items than are available in stock?

- What if they try using special characters when entering item quantity?

- What happens to the cart if the user logs out and logs back in?

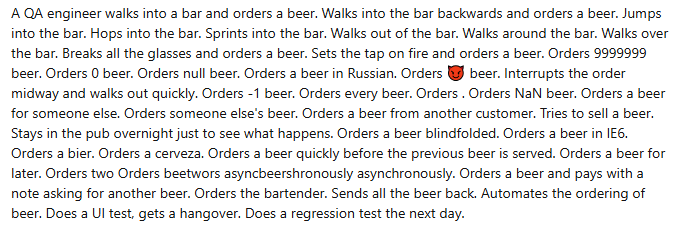

It takes all kinds. Source: Mailtrap

By running through these scenarios, you’ll catch all the ways users might try to break things—and ensure your feature stays bulletproof.

Tip: When crafting test scenarios, refer to acceptance criteria (AC) and use them as a checklist for how your software must work. These criteria define the conditions that a software product must meet to be accepted by a user, customer, or other stakeholders.

Essentially, AC outline the expected behavior and performance of the product, so writing your scenarios based on them will help you cover the key areas. Note that usually it takes multiple test cases to check each AC.

Write the test case itself

Then, you basically have to fill out all the test case components we listed above. To make it easier for you, we’ve prepared a few examples and templates (they are in the next section).

Add preconditions. Some tests might require setup. For example, “The user must have an existing account.” or “The user's account has a valid payment method saved.”

As we said, this part is optional, but adding it helps save time, avoid silly mistakes, and let anyone repeat the test without guessing. Plus, if something goes wrong, you’ll know it wasn’t because of the faulty setup.

Provide test data. If your test case needs data (e.g., usernames, passwords, or product names), include it.

Write clear steps. For each scenario, write steps that anyone can follow. Make them simple but specific. For example, for a scenario “User adds a single item to the cart and views the cart” the steps might be

- Open the website [Website name] and navigate to the product catalog.

- Select a product and click the "Add to Cart" button.

- Navigate to the cart page by clicking the cart icon in the top-right corner.

- Verify that the selected product is listed in the cart with the correct product name, quantity (default should be 1), price, and total cost.

- Confirm that the "Proceed to Checkout" button is visible and functional.

- (Optional) Remove the item from the cart to ensure the cart updates correctly.

Such a detailed guide will allow you to validate the functionality thoroughly.

Define the expected result. For every test step, write what should happen. For example, “The cart page displays the selected product with the correct product name, quantity, price, and total cost” or “The system should display an error message: 'Item is not available.'”

This helps you know if the test passes or fails.

A bit of arguable wisdom. Source: Mailtrap

Review, refine, update

Once you’ve written the test cases, review them. Are the steps clear? Did you cover all the scenarios? Is it easy to follow?

Have a colleague check your test cases—they might spot something you left out.

Even when you start testing, you might find things you missed or need to tweak. Keep updating your test cases as needed.

Those steps don’t seem too difficult, do they? But of course, nothing beats a hands-on illustration.

Test case examples and templates

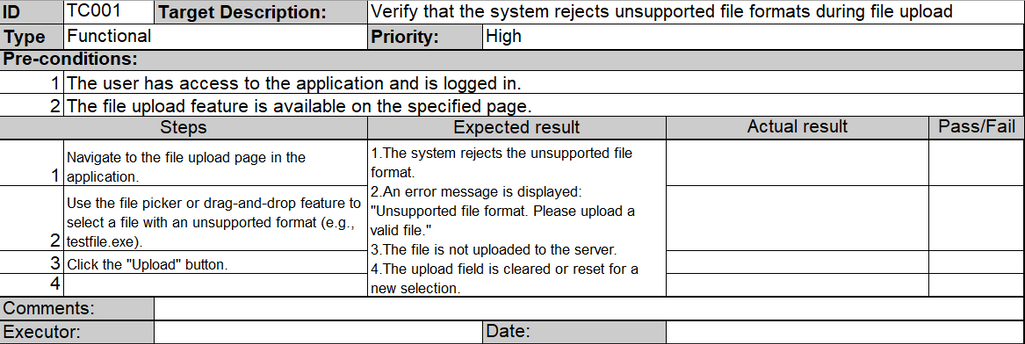

Here’s how a negative test case might look for uploading the unsupported file format. Remember that part of a test case is filled out after running the test.

Negative test case example

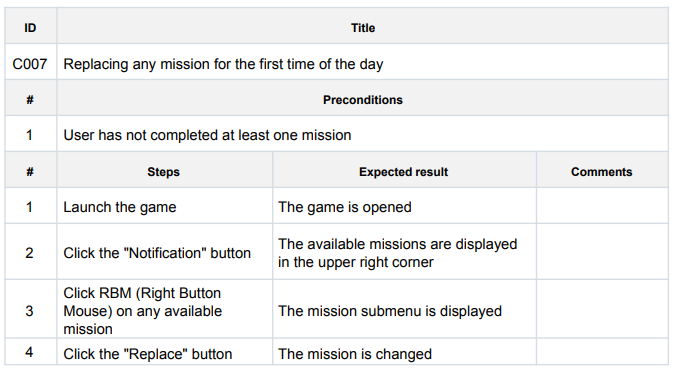

And here’s another example from QATestLab of how simplistic a test case can look. While it takes less time to write, we suggest you add more details.

Replacing the game mission test case example. Source: QATestLab

As for templates, well, you can definitely write test cases in Word or Excel. For example, here’s a downloadable doc template from Katalon and a handy spreadsheet template from ProjectManager.

It might work for small teams and projects. But as the number of test cases grows, managing test cases will become too difficult. A good way out is to use specialized software.

Test case management software

Using test management tools to create and manage test cases can make your life a lot easier.

Common features of test management software

So here’s what it can do:

- provide customizable templates;

- enable cloning or reusing test cases (that’s very helpful in case of regression testing);

- organize test cases (you can group, categorize, and tag test cases for easy navigation and management);

- link test cases to requirements, defects, or user stories to ensure comprehensive coverage and accountability;

- track changes made to test cases; and so on.

Test case management software also allows you to assign test runs and supports collaboration in general. It also provides handy dashboards and reports to analyze test coverage, results, and team performance.

Besides, it often comes integrated with other tools you use (issue trackers like JIRA, CI/CD pipelines, test automation frameworks, etc.) so you can easily share and consolidate all your project data.

Popular test management software platforms

Some of the popular tools recognized for their functionality include BrowserStack, TestCollab, TestRail, PractiTest, TestLink, etc.

Many teams that work with Jira use its extensions for test cases XRay or Zephyr.

Most platforms have similar features and should help you cover all bases for your testing activities, so we won’t discuss them in detail here.

How to choose test management software

Before picking the right test management solution, identify which features you need, who will use it, and which other tools you want it to be integrated with. When comparing options, focus on these key criteria.

Cost. Does it fit your budget while offering the features you need?

Integration. Does it integrate smoothly with other tools you’re currently using?

Customization options. Can you tailor workflows, fields, and reports to fit your project needs?

Ease of use. Will your team find it user-friendly and intuitive?

Scalability. Can it handle your growing number of test cases and team size over time?

Collaboration. Does it support team collaboration with role-based access and real-time updates?

Reporting. Are the dashboards and reports insightful and easy to generate?

Check with the vendor if there is training provided, whether customer support is included, and how regular updates are. Read reviews and test the tool with a free trial or demo to see if it truly fits your workflow before committing.

Managing test cases: common mistakes and best practices

Some common pitfalls when writing and managing test cases include

- lack of detail,

- ambiguity or unclear descriptions,

- lack of coverage, and

- doing everything on your own.

Below, we suggest some practical tips that will help you avoid these and other pitfalls.

Keep it small and simple. A test case should contain no more than 10-15 steps. If there are more, break them down. Don’t try to check multiple aspects in a single test.

Make it detailed and specific. Write your test case so someone else can follow it without asking you questions. Avoid vague instructions like “Check the functionality.” Be specific instead.

Consider edge cases and negative scenarios. To ensure comprehensive test coverage, include edge cases and negative scenarios. Users can try to interact with the system in all sorts of ways, so that’s the time for you to be creative.

Yeah, it can take a lot of creativity. And time. Source: SawderJuke on Reddit

For example, for edge cases, it can be checking how the system processes a zero-dollar transaction or how it handles a date input set to February 30.

Though these scenarios always sound weird, not testing them can lead to critical bugs slipping through.

Prioritize. You probably can’t test everything all at once, so start with the most critical test cases. Priorities help testers and teams decide the sequence in which test cases should be executed, especially when time or resources are limited. There are 3 common priority levels.

- High Priority (P1) – test cases covering critical functionality or high-risk areas. Failure in these areas could result in a system crash, significant business impact, or a poor user experience.

Example: Testing payment processing in an eCommerce application.

- Medium Priority (P2) – test cases that verify important but noncritical functionality. Failure in these areas may not stop the application but could cause moderate inconvenience to users.

Example: Testing email notifications or user profile updates.

- Low Priority (P3) – test cases covering minor or optional features, edge cases, or aesthetic aspects. Failures here typically have little to no impact on the application's core functionality or business processes.

Example: Testing how the application performs on less commonly used browsers like Opera Mini or older versions of Internet Explorer.

Organize. Keep your test cases organized. Use a spreadsheet, test management tool, or your team’s preferred format. Label each test case with a name or ID so it’s easy to find later.

Collaborate. Involving other stakeholders and incorporating their ideas in writing test cases helps leave nothing unexplored. Consider picking the brains of developers, business analysts, product owners, and end users.

Maria is a curious researcher, passionate about discovering how technologies change the world. She started her career in logistics but has dedicated the last five years to exploring travel tech, large travel businesses, and product management best practices.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.