It’s getting harder to distinguish AI-generated images from real ones. If you frequent social networks, you’ve probably noticed how readily people repost synthesized visuals, from realistic cute kittens to stunning landscapes to impressive paintings. Some users do not even realize that all those pictures are made by computers, others just enjoy AI images or think that AI is a better artist than humans.

Just imagine: More than 34 million pictures are produced by AI per day. We owe the lion’s share of this impressive amount to just four “artists” — DALL-E, Stable Diffusion, Adobe Firefly, and Midjourney. Understanding how they work and mastering them is becoming vital for millions of professionals in graphics and game designing, content creation, e-commerce, marketing, and other domains. This article compares capabilities and outlines the limitations of AI generators that are on everybody’s lips.

How AI image generators work

AI image generators synthesize visuals from text prompts using a set of advanced deep learning models, also called neural networks. They are trained on massive datasets containing millions of text-to-image pairs. As a result, generators gain the ability to associate descriptions with relevant pictures and produce new visuals accordingly.

How AI image generation works

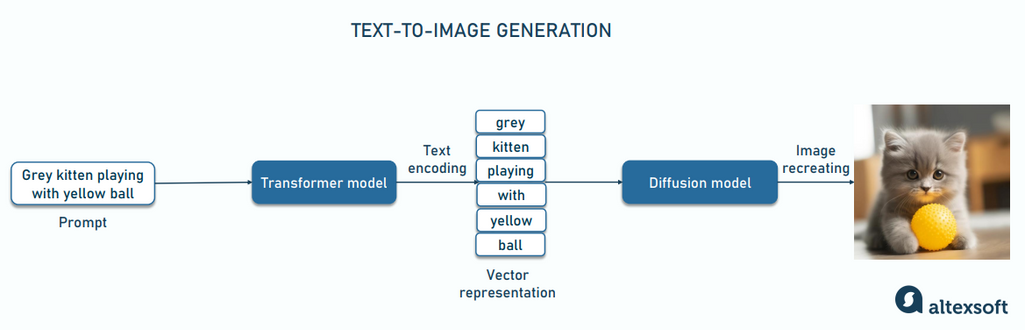

Modern AI generators accomplish text-to-image conversion in two steps, each relying on a specific type of generative model — namely

- a transformer-based model for encoding text, and

- a diffusion model for producing visuals.

Two-stage process of AI image generation

Transformers, designed by Google to boost natural language processing, now power Google Search and Translate, fuel large language models (LLMs) behind tools like ChatGPT and Bard, and improve speech recognition and text autocompletion. They also successfully work in domains beyond language — including image recognition and sound generation.

As LLM capabilities grow and applications built on top of LLMs are getting more popular, we recommend reading our comprehensive article on LLM API integration for business apps.

In the AI image generation process, transformers are responsible for grasping the meaning of a prompt. They use the self-attention mechanism to understand how different parts of the sentence relate to each other and then transform data points with all interconnections and dependencies into a digital representation — vector.

Diffusion models are inspired by the concept of diffusion, which you may recall from physics classes — that’s when particles spread from concentrated to more dispersed states. During training, a diffusion model gradually adds noise to images until they become unrecognizable and then accomplishes the reverse process, learning how to restore visuals from the noise.

As part of text-to-image architectures, diffusion models use information from the vector to recreate a picture that conveys the prompt's ideas.

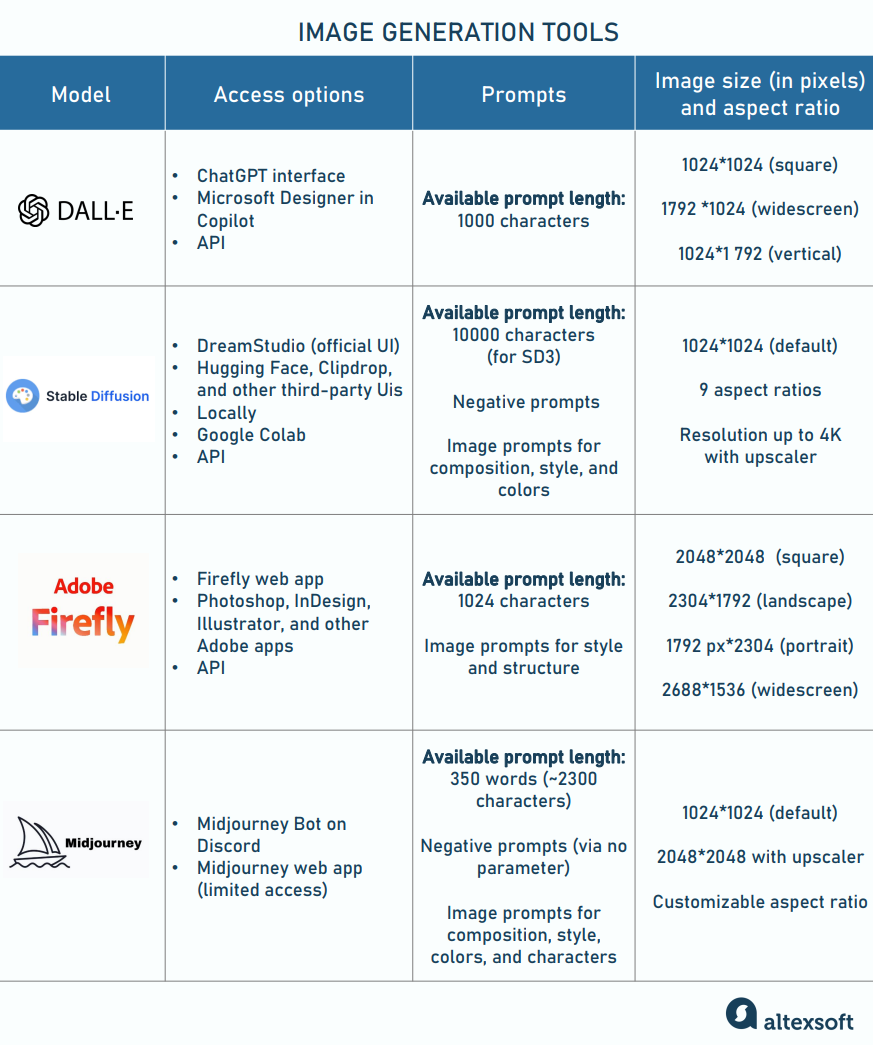

While relying on the same approaches, image generation tools differ greatly in access options, prompts supported, results accuracy, image quality, pricing terms, and other aspects. We compare the four most used instruments, DALL-E, Stable Diffusion, Adobe Firefly, and Midjourney, each with peculiarities to be aware of.

The top image generators compared

DALL-E image generator: handling and finetuning complex prompts

DALL-E is an AI text-to-image generator from OpenAI, integrated with ChatGPT. Interestingly, the name hints at surrealist artist Salvador Dali and Pixar’s movie WALL-E. For text understanding, DALL-E employs a version of the GPT (generative pre-trained transformer) that leverages 12 billion parameters.

DALL-E 2 vs DALL-E 3

Two model versions, DALL-E 2 and DALL-E 3, coexist today. When creating the third edition, Open AI relied on a dataset with improved, highly descriptive image captions, 95 percent of which were synthetic or produced by AI (namely, by GPT-4).

While DALL-E 3 follows prompts better than its predecessor, it still has much room for improvement when it comes to

- object placement (it struggles with words like “behind,” “to the right of,” “over,” and so on);

- incorporating text into the image; and

- generating images for specific terms — such as plant or bird species.

DALL-E 3 is accessible directly in the ChatGPT interface and through Microsoft’s Designer (former Bing Image Creator) built into Copilot (former Bing Chat). You can also integrate both versions into your application via OpenAI APIs.

How to use DALL-E

Due to the chatbot interface, DALL-E is extremely easy to use. Relying on the powerful LLM behind it, the tool processes complex descriptions and automatically enhances them before converting the text to unique, realistic visuals. You can choose from three available aspect ratios — square, widescreen, and vertical. The tool generates two images at a time and lets you edit them by adding new textual details and applying different styles and effects.

The more specific you are, the more relevant the visual you’ll get. The maximum length of a prompt for the latest model’s version is 1000 characters (140-250 words with spaces), which is a large improvement from 400 characters with the previous iteration, DALLE-2 (between 60 and 100 words with spaces). At the same time, neither version supports image inputs and negative prompts that are used to avoid unwanted.

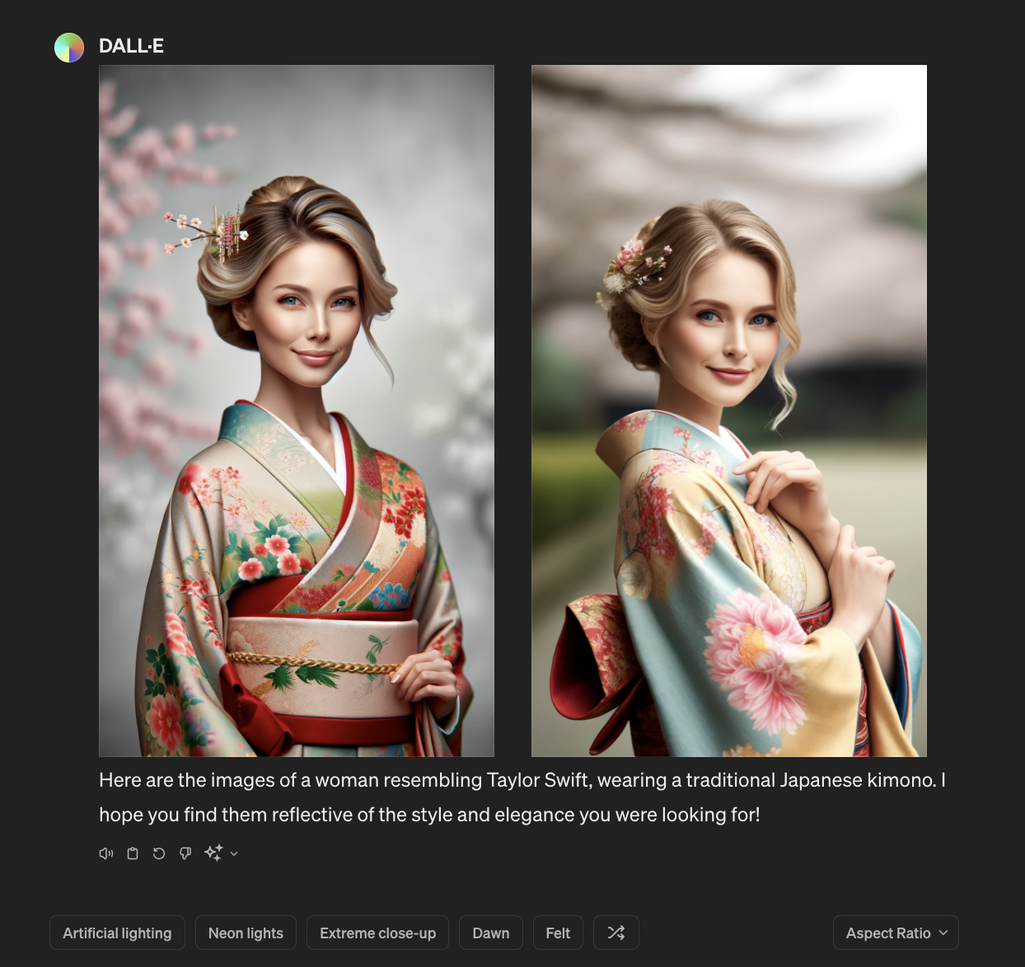

Note that DALL-E bans violent content, images in the style of a living artist, and pics of a specific person. For example, if your request is to portray Taylor Swift in a kimono, the tool will answer with an image of “a woman styled similarly to a popular singer-songwriter.”

A girl in a kimono resembling Taylor Swift created by DALL-E

DALL-E 2 produces pictures with a maximum resolution of 1024 x 1024 pixels, while the third version allows for a size of 1024 x 1792 pixels or 1792 x 1024 pixels. Besides, it lets you choose between standard and HD image quality. The latter excels in highlighting details and capturing textures, but the image will cost more and take longer to generate. Pictures are downloadable in WBEP format only. The outputs created with DALL-E belong to you, so you can freely reprint or sell them.

DALL-E price

DALL-E 3 is part of ChatGPT subscriptions: Plus ($20 per user/month), Team ($25 per user/month), or Enterprise (available on request). It’s not included in the free package.

If you integrate DALLE-E into your app via API, you’ll pay per image generated. The price depends on the tool’s version and the pic resolution, varying from $0,016 for a 216 x 216 image from DALL-E 2 to $0.12 for a 1024 x 1792 image from DALL-E 3 in HD quality.

Stable Diffusion models: available for downloading and retraining

Powered by Stability AI, Stable Diffusion (SD) is a collection of latent diffusion models initially trained on 2.3 billion text-to-image pairs and excelling in a photorealistic style. Most of SD versions are open source and downloadable, so you can run and finetune them on local machines or in the cloud environment.

SDXL, Stable Diffusion 3, and others

Several editions of the SD model are in use today, including:

- Stable Diffusion v1, pre-trained on 256 x 256 images and then enhanced on 512 x 512 images;

- Stable Diffusion v2, working at 512 x 512 and 768 x 768 resolutions;

- Stable Diffusion XL (SDXL), optimized for 1024 x 1024 images;

- SDXL Turbo, which is several times faster than SDXL;

- Stable Diffusion 3, the latest edition, and

- SD 3 Turbo, a faster SD 3 version.

SD 3 benefits from a new Multimodal Diffusion Transformer (MMDiT) architecture that enables a bi-directional flow of information between text and image data units within a neural network. This approach sets SD 3 apart from previous generators where text impacts imaging but not vice versa.

The improvements from older versions include a better understanding of multi-subject prompts and more accurate text representation within generated images. SD 3 also offers a faster, more efficient way of model training that makes AI image generation cheaper.

DreamStudio and alternative ways to get in touch with Stable Diffusion

There are multiple ways to access representatives of the SD family.

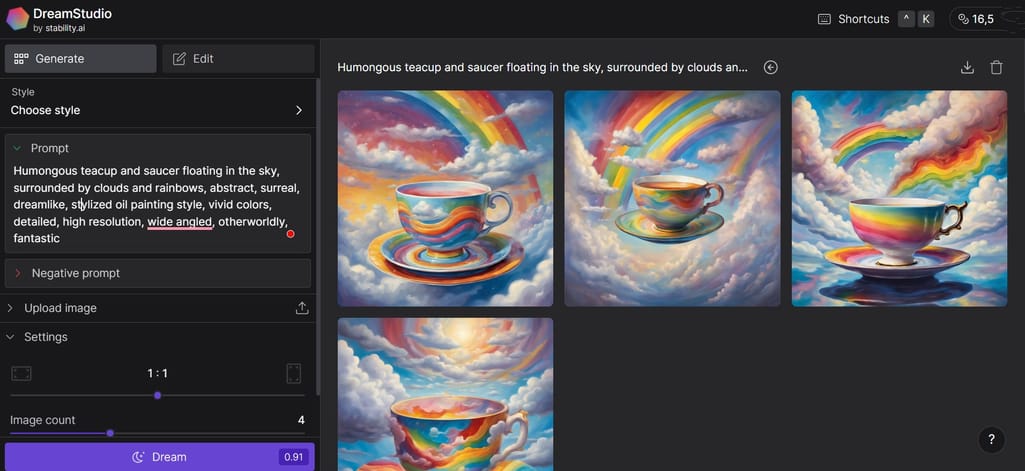

Via its official web interface. DreamStudio (DS) is a web-based application by Stability AI that lets you generate images with SD v1 or SDXL model and edit them. By default, each prompt produces four pictures, but you can change this number from 1 to 10. The tool allows you to apply different styles and make basic edits.

Via other web interfaces. Many websites have integrated SD models, allowing you to try and test various versions through a user-friendly interface. Examples include the image editing tool Clipdrop, the machine learning platform Hugging Face, the AI art generator NightCafe, and others.

DreamStudio, the official web interface by Stability AI to access SD models

On your computer. You can download Stable Diffusion v1, Stable Diffusion v2, SDXL, or SDXL Turbo on your machine for free (SD 3 is not downloadable at the time of writing the article). This option addresses privacy concerns and lets you finetune a model on a custom dataset — providing you have sufficient computing resources. To work with Stable Diffusion, install SD management tools — such as ComfyUI, Stable Diffusion web UI, or Stability Matrix.

Note that system requirements differ from edition to edition, but generally, they involve at least 16 GB of RAM, 8 GB of video RAM, and 10 GB of install space. This minimum will allow you to produce 256 x 256 pictures. For 512 x 512 pixels, you should choose graphics cards with 12 GB of VRAM or more. Top-tier NVIDIA graphics processing units (GPUs) are considered ideal for SD.

On Google Colab. Generative AI can be rather slow and computationally expensive when you run it locally. That’s where Google Colab, a cloud-based analog of Jupiter Notebooks, comes in handy. The tool enables you to experiment with machine learning algorithms taking advantage of Google’s powerful GPUs and Tensor Processing Units (TPUs) that speed up AI computing. You can download SD versions on Google Drive and launch them on Colab to create images or retrain a chosen model on your custom dataset.

Via APIs. Stability AI provides REST API access to SD v1, SDXL, SD 3, and SD 3 Turbo, enabling you to make 150 calls within 10 seconds. You may also reach tools for editing images, upscaling them up to 4K resolution (3840 x 2160 pixels), and refining existing pictures and sketches. Stability AI lets you use API keys to try out its services on Google Colab or Fireworks AI (for SD 3 only).

How to use Stable Diffusion

Unlike DALL-E, SD supports two types of inputs: text prompts and images accompanied by text prompts. The maximum prompt length varies from version to version. SD 3, for example, allows for up to 10,000 characters— so your description can be almost endless. But, of course, you typically get along with fewer words. The key idea here is to be as specific as possible: Clearly define the desired art style, elements, and colors.

If you work via DreamStudio, locally, on Google Colab, or connect your app through API, you can further tailor your description with a negative prompt.

You may also add weights at the end of words to make them more or less important. The default weight of each word is 1, so apply

- “+” or a number between 1.1 and 2 to increase emphasis; and

- “-” or a number between 0 and 0.9 to reduce emphasis.

If you want to highlight and weight a phrase, put it on the bracket. A weight must follow a word or phrase directly, without a space. Weighing also works for negative prompts. Besides that, SD allows you to use images as a part of the prompt to impact the output’s composition, style, and colors.

SD normally lets you choose from nine aspect ratios (width-to-height proportions) for your pictures: 21:9, 16:9, 5:3, 3:2, 1:1, and vice versa. Outputs are downloadable in PNG (by default), JPEG, and WEBP formats. But this can change depending on the tool you use to access Stable Diffusion.

Stable Diffusion price

Whether you access SD models via web interfaces or APIs, pricing is based on the computing resources needed to fulfill your request. For 10 dollars, you can buy 1000 credits, which is enough to generate 1,000 images with SDXL. You also have 25 free credits to try the services. Learn more about credits here.

At the same time, models downloaded on your computer are free — as long as you use them for research or personal purposes. To gain commercial rights, you must apply for Stability AI membership starting at $20 per month.

Adobe Firefly AI: safe for commercial use

Adobe Firefly is a component of the Adobe Creative Cloud, which also includes tools like Adobe Express, Photoshop, and Illustrator. The Firefly AI was trained on licensed images from Adobe Stock and public content with expired copyrights that make outputs safe for commercial use. This way, Adobe tried to distance itself from competitors like DALL-E with their copyright issues.

Two model versions are now accessible: Firefly Image 2 and Firefly Image 3 (beta). The latter offers improved prompt understanding, higher image quality, a broader range of styles, and other advances.

For end users, there are two options to access generative features:

- on a standalone Adobe Firefly website, and

- within other Adobe products — such as Adobe Photoshop, Adobe InDesign, Adobe Illustrator, and Adobe Express.

In any case, you must have an Adobe account to work with the Firefly model. Enterprises can integrate the image generator directly into their workflows via Firefly REST API.

How to use Adobe Firefly

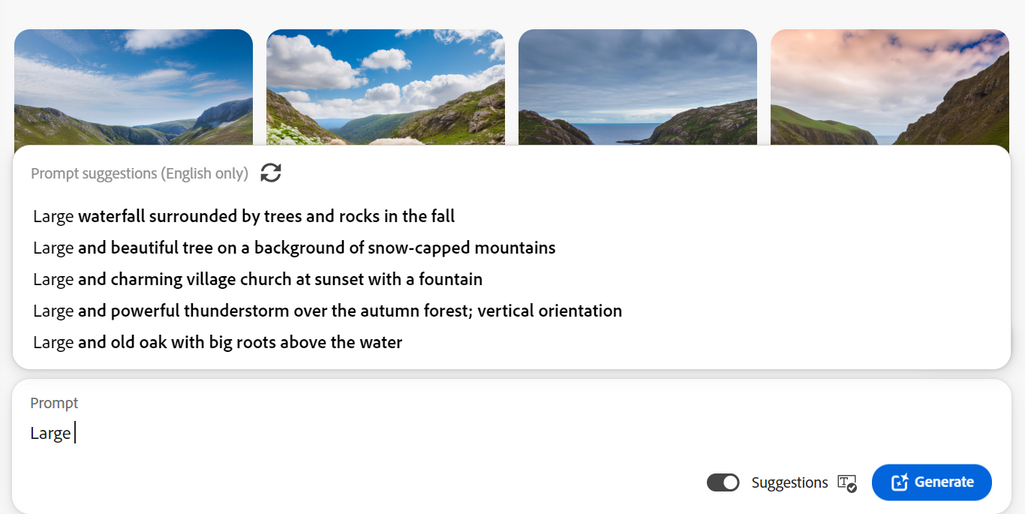

Firefly is designed to produce photorealistic and artistic images from simple, direct text prompts in over 100 languages, with a maximum length of 1024 characters (160-200 words). Model creators recommend “using at least three words” and describing “not just what you want to see but how you want it to look.” If you run out of ideas, try a suggestions tool that can offer creative ideas — but in English only.

Adobe Firefly has pretty many ideas about what you would like to see in the pictures

Similar to DALL-E, Firefly doesn’t have a negative prompt option to exclude unwanted elements. At the same time, you can upload pictures to direct the tool in terms of a desired structure and style.

From a prompt, you get four different outputs in one of the available aspect ratios — landscape (4:3), portrait (3:4), square (1:1), or widescreen (16:9). No matter the parameters, you can further tweak pictures by applying different styles, colors, effects, camera angles, and more. Firefly lets you add texts, graphic elements, frames, and more. The latest features available in the preview are

- Generative Fill — to remove objects and backgrounds and paint new ones with the help of text prompts; and

- Generative Expand — to scale an image, change its aspect ratio, and fill the empty space with the help of prompts. Additions will naturally blend with the original picture.

After you’re done, you may download images in JPEG format or submit them to a shared library. Adobe Firefly stands out from competitors by offering images twice the size of most AI generators — 2048 x 2048 pixels by default.

If you’re on a free plan, the content will have an Adobe watermark on it. To remove this element, you must purchase a subscription. Yet, even paid users are prohibited from utilizing Firefly-generated pictures to train or finetune other machine learning algorithms and AI systems.

Adobe Firefly pricing

Similar to Stable Diffusion, Adobe sets prices based on how much computing resources a certain AI feature requires. Each subscription covers a particular amount of so-called generative credits, which you can spend on different services, each having its own value. Say, every text-to-image generation operation costs you 1 credit, while Generative Fill adds extra credit every time you apply it. Pricing also depends on the number of additional services and available Adobe apps.

For example, with a free plan, you get 25 credits monthly and access to the Firefly website only. The premium plan ($4.99 monthly) grants you 100 credits. The single apps plan ($9.99 monthly) comes with 500 credits and additionally enables you to use one more Adobe app — whichever you choose. You can compare different plans for individuals, students and teachers, and businesses here.

Midjourney AI: the best at artwork creation

Midjourney (MJ) is an independent research lab and text-to-image model renowned for its ability to produce award-winning artistic pictures. It’s very popular among graphic and video game artists, cartoonists, and other creative people.

The latest model update is MJ Version 6 which comes with a better understanding of longer prompts and more granular color control. But you can also switch to earlier iterations or try NiJi version, tailored for anime and manga style.

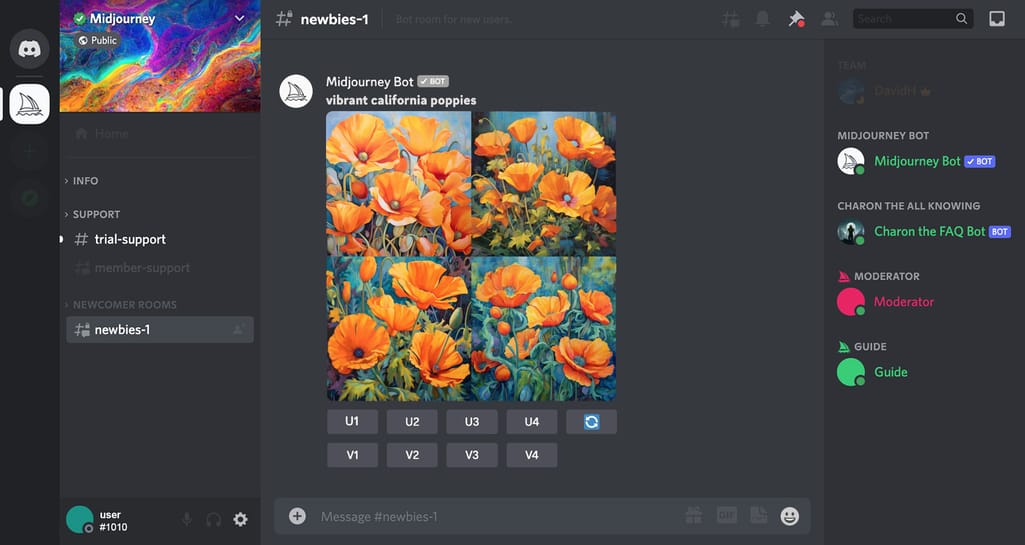

Midjourney Discord and Midjourney Bot

Unlike other AI generators, MJ doesn’t have an API since its stated goal is to bring creative power to individuals, not to corporations. Individuals, in turn, have had no other way to access MJ than through the Discord chat app, originally designed for gamers. In December 2023, the lab finally launched a dedicated web interface. Yet, as of mid-2024, the tool is still at the alpha stage, with only users who generated at least 100 images on Discord eligible to enjoy it. All that said, you must interact with Discord first.

To start working with AI technology, you must

- register a Discord account,

- join the Midjourney server (the server here is the same as the community) on Discord; and

- purchase a subscription on the Midjourney website (there is no free plan),

Midjournet Bot on the Discord Midjourney server

Discord’s MJ server is where you get all the necessary official information and support, along with the ability to get in touch with over 20.2 million members. At the same time, you can run the bot on other Discord servers, including those you created.

How to use Midjourney

Midjourney V6 supports text prompts of up to 350 words (around 1800-2300 characters), but even a single word results in four beautiful images. In general, the best outputs come from simple, to-the-point phrases. If you run out of words, you can upload a picture that inspires you and apply the "describe" command to get four text prompts for further work.

Besides text, prompts for the MJ bot may incorporate

- emojis to convey the mood;

- image prompts (links to images on the Internet) to be used as references to style, composition, color palette, or character; and

- parameters to change a model version, set aspect ratio, style, desired quality, and more. Read a list of available parameters here.

For example, by default, the bot will produce 1024 x 1024 images, but you can choose any width and height by applying the aspect ratio parameter (- - ar) with the desired width and height (4:5 for a common portrait, 16:9 for a typical landscape, etc.).

There is also a parameter to add negative prompts. You can place “-- no” before objects you don’t want to see in the picture (like "room, -- no cats"). Keep in mind that it’s the most effective way to avoid undesired elements since the bot may be insensitive to phrases like Please, draw a room without cats! or Don’t show cats in the room!

Among other helpful tools to finetune results are

- text generation that lets you highlight the phrase you want to incorporate into the image with double quotation marks, leading to accurate spelling;

- upscalers to double the size of your image; and

- pan to expand an image without changing its content.

It’s worth noting that the MJ bot, while leveraging rich functionality, is not as intuitive as dedicated web apps of other AI generators. So you must thoroughly learn the MJ documentation to fully reveal its potential.

All images generated by the bot automatically appear on the Midjourney website, become visible to other users, and can be downloaded in PNG format. To avoid exposure, you must create pictures in the Stealth Mode, which is available at higher subscription tiers (Pro and Mega) only.

Midjourney price

Midjourney does not offer a free plan. It has four subscription levels, with a 20 percent discount for annual billing. Pricing ranges from $10 to $120 per month and defines how much GPU time you can consume.

One hour of intensive GPU usage translates into about 60 images. So, with the cheapest Basic Plan ($10), you can create approximately 200 pictures a month. The Standard Plan ($30) allows for unlimited image creation — if you switch to a slower generation. The Pro Plan ($60) lets you activate the Stealth Mode. You must buy the Pro or Mega Plan ($120) if your business grosses more than one million dollars a year. Learn more pricing details here.

Experimenting with AI generators: Which one understands you best?

We tested the four image generators across several parameters to showcase how differently DALL-E, Stable Diffusion (SDXL accessed via DreamStudio, Adobe Firefly, and Midjourney handle the same task. Below, we present results for comparison, along with an initial prompt.

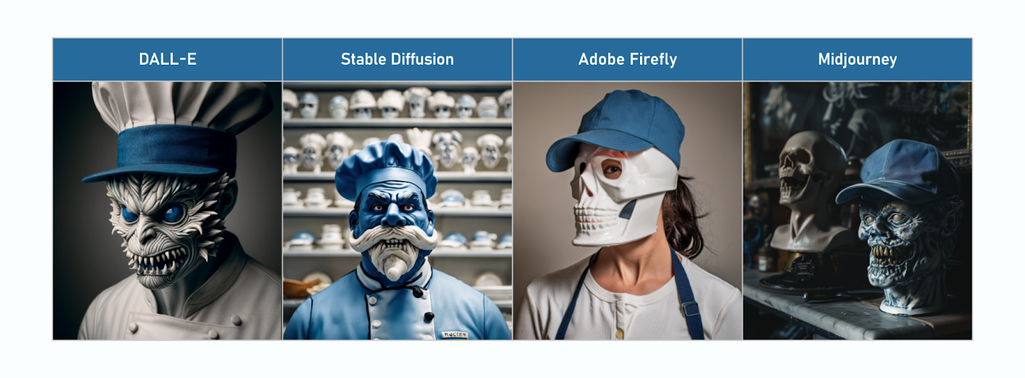

Prompt: cinematic photography, a Chef statue; porcelain monster mask, portrait shot; blue baseball cap.

SD missed a cap, while MJ missed a chef

In this test, SD overused the blue color and missed the mention of a baseball cap, while DALL-E merged it with a chef's hat. Only Firefly pictured a chef as a long-haired woman. Midjourney, on the other hand, ignored the word chef at all.

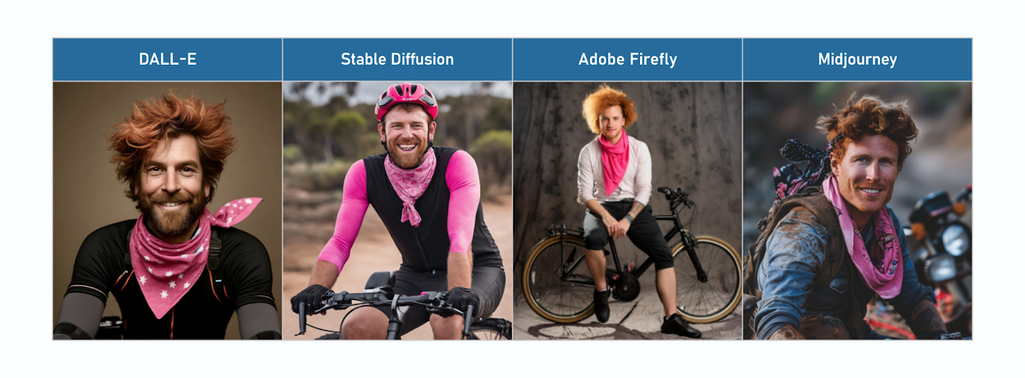

Prompt: realistic 30-year-old Aussie cyclist with red, blown-out hair, sitting on his electric bike, pink starred bandana tied around the neck, faces the camera and smiles. Full body photo. The background is deep, dirty, and neutral.

Only Adobe created a full-body image

Of all generators, Firefly followed the prompt most accurately — except for the star pattern of the bandana. All other images are not full-body, nor do they present an electric bike. The cyclist by Stable Diffusion wears a helmet instead of blown-out hair and has issues with leg positioning.

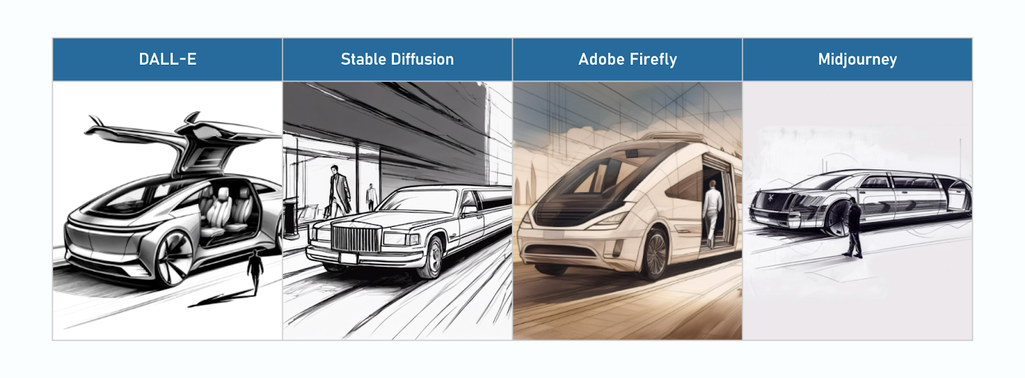

Prompt: sketch of a fully automated, sleek & stylish limousine with an open rear door and somebody walking away.

DALL-E and MJ definitely exaggerated the limousine’s size

Models definitely have a different understanding of how a fully automated and stylish limousine should look. DALL-E and Midjourney created too large vehicles compared to human beings. Stable Diffusion didn’t notice that a rear door should be open, while in the Midjourney visual, the door looks broken rather than open. None of the visuals properly interpreted the phrase walking away: The figures approach, pass by, or enter a vehicle instead of going in the opposite direction

Common limitations of AI image generators

Despite all advances, AI image generators are still far from producing perfect outputs. Many issues stem from the data on which models were trained.

Amplifying Western stereotypes. Typically, training data for AI generators consists of English text-image pairs representing the Western world with all its traditional stereotypes, gender prejudices, and racial biases. Open AI recognized that visuals from DALL-E 3 have a “tendency toward a Western point of view” and “disproportionately represent individuals who appear White, female, and youthful.”

Stable Diffusion demonstrates similar biases — as revealed in the University of Washington study. It found that SD sexualizes women from Latin America, Mexico, India, and Egypt. Also, if asked to picture a person, the model would most likely produce a portrait of a light-skinned man.

Messing up with limbs. Like many amateur artists, AI struggles with depicting human hands, feet, and other body parts. This pitfall has a simple explanation: in AI datasets, limbs on human images are less visible than, for example, faces or clothes resulting in extra legs, odd looking palms, and other abnormalities.

Hands are particularly difficult for AI to render. With its 27 bones, 27 joints, 34 muscles, and over 100 ligaments, a human hand can take infinite positions, make a wide range of moves, and grasp an endless number of objects. Not always all five fingers are shown, and sometimes they are clenched in fists. Since AI doesn’t really understand the anatomy of hands and how they work, it often fails to reproduce these complex objects correctly.

Small size. The big problem with AI art is low resolution. While 1024*1024 pixels work for the web, even twice that size won’t be enough for printing. You have to use another type of AI-fueled software — for example, Gigapixel7 or Let’s Enhance.io — to upscale low-res pictures and make them suitable for professional print.

New versions of current models are already being trained. With each iteration, AI generators become more skillful and sophisticated, so it’s quite possible that the next releases will understand, polish, and visualize our ideas even better than in our wildest dreams. A prospect both thrilling and daunting.