In today's data-driven world, organizations amass vast amounts of information that can unlock significant insights and inform decision-making. A staggering 80 percent of this digital treasure trove is unstructured data, which lacks a pre-defined format or organization.

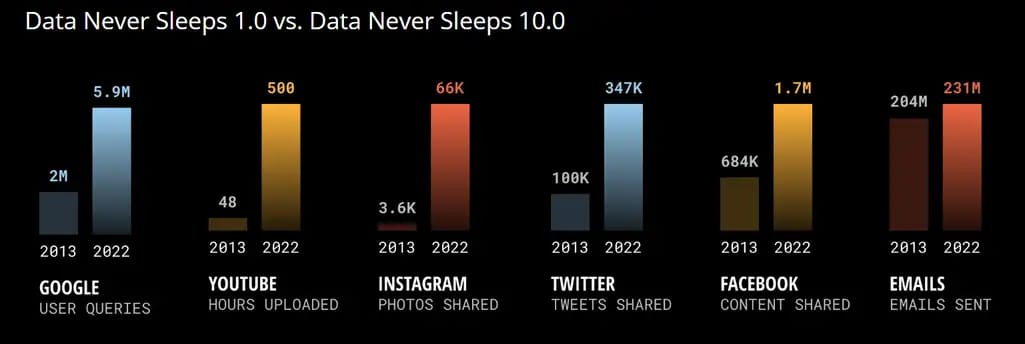

To illustrate the sheer volume of unstructured data, we point out the 10th annual Data Never Sleeps infographic, showing how much data is being created each minute on the Internet.

How much data was generated in a minute in 2013 and 2022. Source: DOMO

Just imagine that in 2022, users sent 231.4 million emails, uploaded 500 hours of YouTube videos, and shared 66k photos on Instagram every single minute. Of course, tapping into this immense pool of unstructured data may offer businesses a wealth of opportunities to better understand their customers, markets, and operations, ultimately driving growth and success.

This article delves into the realm of unstructured data, highlighting its importance, and providing practical guidance on extracting valuable insights from this often-overlooked resource. We will discuss the different data types, storage and management options, and various techniques and tools for unstructured data analysis. By understanding these aspects comprehensively, you can harness the true potential of unstructured data and transform it into a strategic asset.

What is unstructured data? Definition and examples

Unstructured data, in its simplest form, refers to any data that does not have predefined structure or organization. Unlike structured data, which is organized into neat rows and columns within a database, unstructured data is an unsorted and vast information collection. It can come in different forms, such as text documents, emails, images, videos, social media posts, sensor data, etc.

Imagine a cluttered desk with piles of handwritten notes, printed articles, drawings, and photographs. This mess of information is analogous to unstructured data. It's rich in content but not immediately usable or searchable without first being sorted and categorized.

Unstructured data types

Unstructured data can be broadly classified into two categories:

- human-generated unstructured data which includes various forms of content people create, such as text documents, emails, social media posts, images, and videos; and

- machine-generated unstructured data, on the other hand, which is generated by devices and sensors, including log files, GPS data, Internet of Things (IoT) output, and other telemetry information.

Whether it’s human- or machine-generated, unstructured data is challenging to handle, as it typically requires advanced techniques and tools to extract meaningful insights. However, despite these challenges, it is a valuable resource that can provide businesses with unique insights and a competitive edge when analyzed properly.

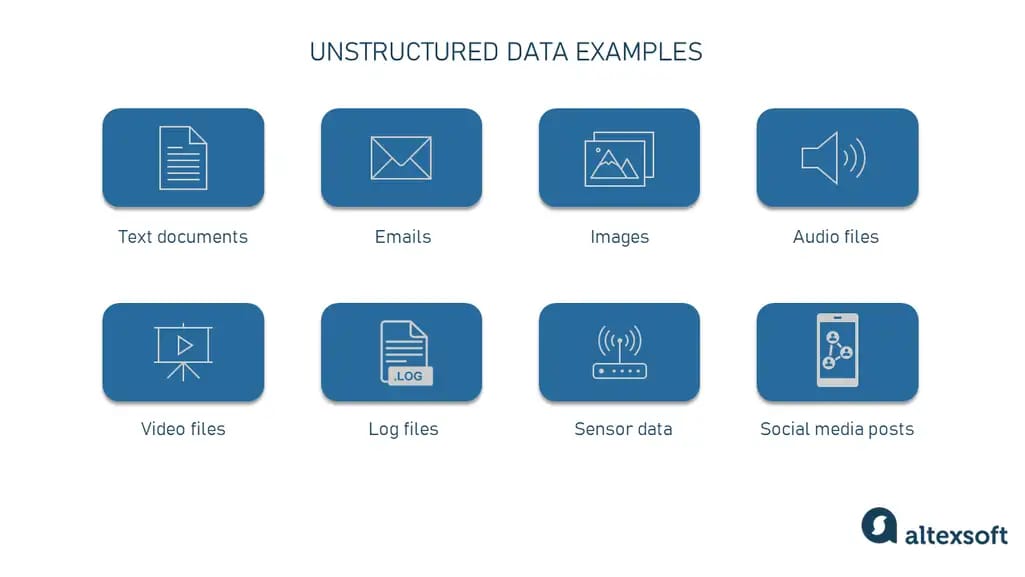

Unstructured data examples and formats

Numerous unstructured data types and formats vary widely regarding the content they hold and how they store information. Let's explore a few examples to understand the concept of unstructured data better.

Some examples of unstructured data

Text documents. You may encounter unstructured data in the form of text documents, which can be plain text files (.txt), Microsoft Word documents (.doc, .docx), PDF files (.pdf), HTML files (.html), and other word processing formats. They primarily contain written content and may include elements like text, tables, and images.

Emails. As a form of electronic communication, emails often contain unstructured text data and various file attachments, such as images, documents, or spreadsheets.

Images. Image files come in various formats, such as JPEG (.jpg, .jpeg), PNG (.png), GIF (.gif), TIFF (.tiff), and more. These files store visual information and require specialized techniques such as computer vision to analyze and extract data.

Audio files. Audio data is usually presented in formats such as MP3 (.mp3), WAV (.wav), and FLAC (.flac), to name a few. These files contain sonic information that requires audio processing techniques to extract meaningful insights.

Video files. Video data comes in popular formats such as MP4 (.mp4), AVI (.avi), MOV (.mov), and others. Analyzing videos requires combining computer vision and audio processing techniques since they contain visual and auditory information.

Log files. Generated by various systems or applications, log files usually contain unstructured text data that can provide insights into system performance, security, and user behavior.

Sensor data. Information from sensors embedded in wearable, industrial, and other IoT devices can also be unstructured, including temperature readings, GPS coordinates, etc.

Social media posts. Data from social media platforms, such as Twitter, Facebook, or messaging apps, contains text, images, and other multimedia content with no predefined structure to it.

These are just a few examples of unstructured data formats. As the data world evolves, more formats may emerge, and existing formats may be adapted to accommodate new unstructured data types.

Unstructured data and big data

Unstructured and big data are related concepts, but they aren’t the same. Unstructured data refers to information that lacks a predefined format or organization. In contrast, big data refers to large volumes of structured and unstructured data that are challenging to process, store, and analyze using traditional data management tools.

The distinction lies in the fact that unstructured data is one type of data found within big data, while big data is the overarching term that encompasses various data types, including structured and semi-structured data.

So now it’s time to draw a clear distinction between all the types of information that belong to the big data world.

Unstructured vs semi-structured vs structured data

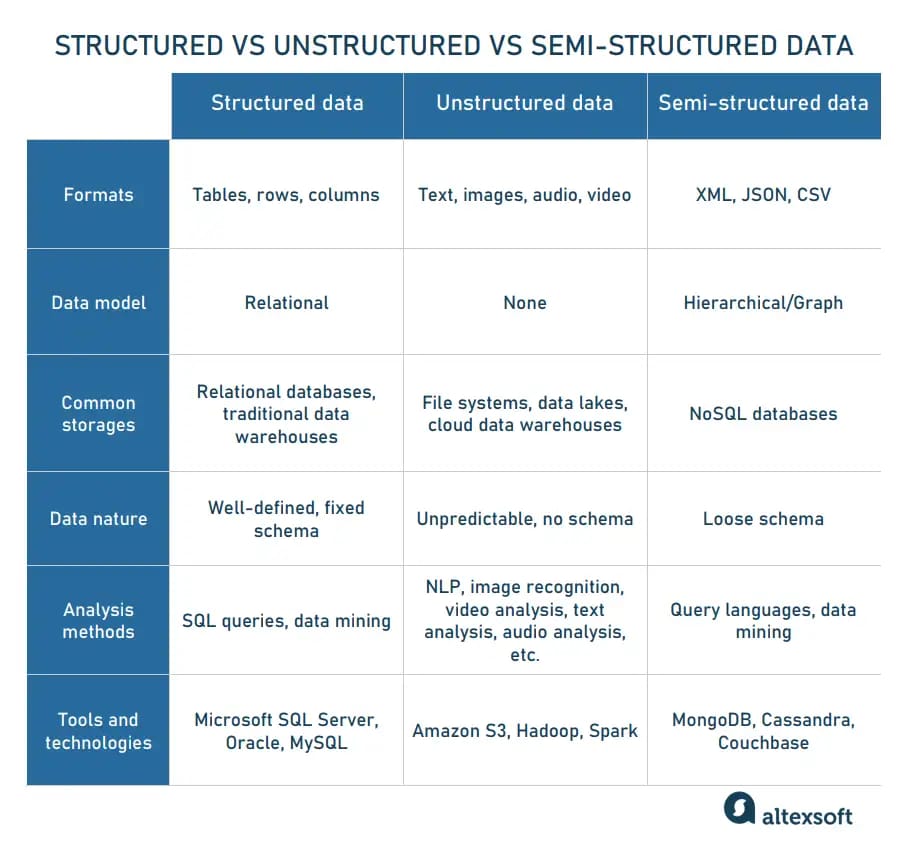

Structured, unstructured, and semi-structured data have distinct properties that differentiate them from one another.

Structured, unstructured, and semi-structured data compared.

Structured data is formatted in tables, rows, and columns, following a well-defined, fixed schema with specific data types, relationships, and rules. A fixed schema means the structure and organization of the data are predetermined and consistent. It is commonly stored in relational database management systems (DBMSs) such as SQL Server, Oracle, and MySQL, and is managed by data analysts and database administrators. Analysis of structured data is typically done using SQL queries and data mining techniques.

Unstructured data, on the other hand, is unpredictable and has no fixed schema, making it more challenging to analyze. Without a fixed schema, the data can vary in structure and organization. It includes various formats like text, images, audio, and video. File systems, data lakes, and Big Data processing frameworks like Hadoop and Spark are often utilized for managing and analyzing unstructured data.

Semi-structured data falls between structured and unstructured data, having a loose schema that accommodates varying formats and evolving requirements. A loose schema allows for some data structure flexibility while maintaining a general organization. Common formats include XML, JSON, and CSV. Semi-structured data is typically stored in NoSQL databases, such as MongoDB, Cassandra, and Couchbase, following hierarchical or graph data models.

Read our dedicated article for more information about the differences between structured, unstructured, and semi-structured data.

How to manage unstructured data

Storing and managing unstructured data effectively is crucial for organizations looking to harness its full potential. There are several key considerations and approaches to ensure optimal management of this valuable resource.

Tools and platforms for unstructured data management

Unstructured data collection

Unstructured data collection presents unique challenges due to the information's sheer volume, variety, and complexity. The process requires extracting data from diverse sources, typically via APIs. For the rapid collection of vast amounts of information, you may need to use various, data ingestion tools and ELT (extract, load, transform) processes.

Application Programming Interfaces (APIs) enable the interaction between different software applications and allow for seamless data extraction from various sources, such as social media platforms, news websites, and other online services.

For example, developers can use Twitter API to access and collect public tweets, user profiles, and other data from the Twitter platform.

Data ingestion tools are software applications or services designed to collect, import, and process data from various sources into a central data storage system or repository.

- Apache NiFi is an open-source data integration tool that automates the movement and transformation of data between systems, providing a web-based interface to design, control, and monitor data flows.

- Logstash is a server-side data processing pipeline that ingests data from multiple sources, transforms it, and sends it to various output destinations like Elasticsearch or file storage, in real-time.

Once the unstructured data has been collected, the next step is to store and process this data effectively. This requires investment by organizations in advanced solutions to handle unstructured data's inherent complexity and volume.

Unstructured data storage

The complexity, heterogeneity, and large volumes of unstructured data also demand specialized storage solutions. Unlike structured data, you can't just keep it in SQL databases. The system must be equipped with the following components to store unstructured data.

- Scalability. Unstructured data has the potential to grow exponentially. Storage solutions must have the capacity for both horizontal scaling (adding more machines) and vertical scaling (adding more resources to an existing machine) to meet the expanding storage needs.

- Flexibility. Since unstructured data can have variable formats and sizes, storage solutions need to be adaptable enough to accommodate diverse data types and adjust to changes in data formats as they occur.

- Efficient access and retrieval of information. To achieve this, storage solutions should offer low-latency access, high throughput, and support for multiple data retrieval methods, such as search, query, or filtering. This ensures that data is accessed and retrieved quickly and efficiently.

- Data durability and availability. Unstructured data storage solutions must ensure data durability (protection against data loss) and availability (ensuring data is accessible when needed). That's why there must be some sort of data replication, backup strategies, and failover mechanisms.

- Data security and privacy. Storage solutions must provide strong security measures such as encryption, access control, and data masking to safeguard sensitive information. These robust security measures ensure that data is always secure and private.

There are several widely used unstructured data storage solutions such as data lakes (e.g., Amazon S3, Google Cloud Storage, Microsoft Azure Blob Storage), NoSQL databases (e.g., MongoDB, Cassandra), and big data processing frameworks (e.g., Hadoop, Apache Spark). Also, modern cloud data warehouses and data lakehouses may be good options for the same purposes.

Data lakes offer a flexible and cost-effective approach for managing and storing unstructured data, ensuring high durability and availability. They can store vast amounts of raw data in its native format, allowing organizations to run big data analytics while providing the option for data transformation and integration with various tools and platforms.

- Amazon S3, as the data lake storage platform, enables organizations to store, analyze, and manage big data workloads, including backup and archiving. It provides low-latency access, virtually unlimited storage, and various integration options with third-party tools and other AWS services.

- Google Cloud Storage can also be used as a data lake system. It allows organizations to store and access data on Google Cloud Platform infrastructure. It offers global edge-caching, multiple storage classes, automatic scaling based on demand, and an easy-to-use RESTful API for efficient data access.

- Microsoft Azure Blob Storage, designed for large-scale analytics workloads, is a scalable cloud storage service specifically suitable for unstructured data, including text and binary data. It provides low-latency access to data and integrates with other Azure services like Azure Databricks and Azure Synapse Analytics for advanced processing and analysis. The service also supports features like Azure CDN (Content Delivery Network) and geo-redundant storage that help optimize its performance.

NoSQL databases can also be useful when handling unstructured data: They provide flexible and scalable storage options for different information formats, allowing for efficient querying and retrieval.

- MongoDB is a commonly used open-source NoSQL database that stores and manages vast amounts of unstructured data in a flexible JSON-like format. It has horizontal scalability and a rich query language, simplifying data manipulation.

- Apache Cassandra is a NoSQL database known for its high scalability and distribution, used to handle enormous amounts of unstructured data across several commodity servers. It offers high availability, tunable consistency, and a robust query language in CQL (Cassandra Query Language).

Big data processing frameworks

Processing unstructured data can be computationally heavy due to its complexity and large volume. To address this challenge, solutions are available to distribute this immense workload across multiple clusters. Utilizing these distributed computing systems allows you to efficiently process and manage unstructured data, ultimately enhancing your company's decision-making capabilities.

Big data processing frameworks presented below can manage massive amounts of unstructured data, providing distributed processing capabilities over clusters of computers.

- Apache Hadoop is an open-source distributed processing framework that can analyze and store vast amounts of unstructured data on clusters. The Hadoop ecosystem also has various tools and libraries to manage large datasets. However, it may require more effort to learn than other solutions.

- Apache Spark is a high-speed and versatile cluster-computing framework. It supports the near real-time processing of large unstructured datasets. Furthermore, it provides high-level APIs in multiple languages, in-memory processing capabilities, and easy integration with multiple storage systems.

Read our article comparing Apache Hadoop and Apache Spark platforms in more detail.

Unstructured data search

Navigating through vast amounts of unstructured data requires advanced search capabilities to locate relevant information efficiently. Specialized search and analytics engines address this need by providing indexing, searching, and analysis features tailored to handle unstructured data. These tools help organizations extract valuable insights, discover hidden patterns, and make informed decisions based on their unstructured data.

The following tools have been specifically designed to support the unique challenges of unstructured data search and analysis.

- Elasticsearch is a real-time distributed search and analytics engine capable of horizontal scaling, complicated queries, and powerful full-text search abilities for unstructured data. Built on Apache Lucene, it integrates with a plethora of other data-processing tools and offers a RESTful API for efficient data access.

- Apache Solr is an open-source search platform constructed on Apache Lucene that presents potent full-text search, faceted search, and advanced analytics abilities for unstructured data. It supports distributed search and indexing (routing) and can be integrated effortlessly with big data processing frameworks like Hadoop.

If you require more advanced unstructured data analytics, there are different machine learning techniques out there to keep an eye on.

Unstructured data analysis

Proper analysis and interpretation of different data types such as audio, images, text, and video involve using advanced technologies — machine learning and AI. ML-driven techniques, including natural language processing (NLP), audio analysis, and image recognition, are vital to discovering hidden knowledge and insights.

Natural Language Processing (NLP), a subfield of artificial intelligence, is a technique that facilitates computer understanding, interpretation, and generation of human language. It is primarily utilized for analyzing text-based unstructured data like emails, social media posts, and customer reviews.

Text classification, a core NLP technique, simplifies text organization and categorization for easier understanding and utilization. This technique enables tasks like labeling importance or identifying negative comments in feedback. Sentiment analysis, a common text classification application, categorizes text based on the author's feelings, judgments, or opinions. This allows brands to understand audience perception, prioritize customer service tasks, and identify industry trends.

Another NLP approach for handling unstructured text data is information extraction (IE). IE retrieves predefined information, such as names, event dates, or phone numbers, and organizes it into a database. A vital component of intelligent document processing, IE employs NLP and computer vision to automatically extract data from various documents, classify it, and transform it into a standardized output format.

Image recognition identifies objects, people, and scenes within images. It is highly beneficial for analyzing visual data such as photographs and illustrations. Image recognition techniques such as object detection enable organizations to recognize user-generated content, analyze product images, and extract texts from scanned documents for further analysis.

Video analytics involves extracting significant information from video data, such as identifying patterns, objects, or activities within the footage. This technology can serve numerous purposes, including security and surveillance, customer behavior analysis, and quality control in manufacturing. Techniques, such as motion detection, object tracking, and activity recognition, enable organizations to gain insights into their operations, customers, and potential threats.

Audio analysis tools can process and analyze audio data, including voice recordings, music, and environmental sounds, to extract useful information or identify patterns. Audio analysis techniques, such as speech recognition, emotion detection, and speaker identification, are used in multiple industries like entertainment (content generation, music recommendation), customer service (call center analytics, voice assistants), and security (voice biometrics, acoustic event detection).

If your data project requires building custom ML models, you can opt for task-specific platforms to help you effectively uncover patterns, trends, and relationships from unstructured data. Quite a few machine learning and AI platforms provide capabilities to process and analyze various unstructured data types like text, audio, and image, which can be utilized to build and deploy AI models. For example, you can build or train your own ML models with the ones listed below. However, they require having a data science team in place to train models on your data.

- TensorFlow is an open-source machine learning framework accommodating many machine and deep learning algorithms. It has the capability to process unstructured data types and offers a wide set of libraries and tools to build, train, and deploy AI models.

- IBM Watson is a collection of AI services and tools featuring natural language processing, sentiment analysis, and image recognition, among others, for handling unstructured data. It provides an array of prebuilt models and APIs, as well as tools for creating customized models, making the integration of AI capabilities into already existing systems effortless.

Last but not least, you may need to leverage data labeling if you train models for custom tasks. In a practical sense, data labeling involves annotating or tagging raw data, like text, images, video, or audio, with relevant information that helps machine learning models learn patterns and perform specific tasks accurately.

For instance, when training NLP models for sentiment analysis, human annotators label text samples with their corresponding sentiment, like positive, negative, or neutral. Similarly, annotators label objects or regions within images in image recognition to help models learn to detect and classify them correctly. In video analytics, data labeling might involve tagging objects, tracking their motion, or identifying specific activities. Finally, for audio analysis, labeling can include transcribing speech, identifying speakers, or marking specific events within the audio.

Learn more about organizing data labeling for ML projects in our dedicated article.

Of course, these are just a few technologies in an ocean of others. The choice of certain tools heavily depends on the specific data project and business goals.

Best practices on how to make the most of unstructured data

Understanding and implementing best practices can help unlock the true potential of the unstructured data landscape. Next, let's explore effective strategies for managing and leveraging unstructured data, empowering businesses to uncover valuable insights and drive informed decision-making.

Develop a clear data strategy. Define your organization's objectives and requirements for unstructured data analysis. Identify the data sources, types of analytics to be performed, and the expected outcomes to guide your efforts.

Build data architecture. To effectively leverage unstructured data, allocate resources for creating a comprehensive data architecture that supports the storage, management, and analysis of various data types. A robust data architecture lays the groundwork for efficient processing, scalability, and seamless integration with other systems, making it crucial to enlist experienced data architects and other data team members to design, implement, and maintain said architecture.

Choose the right tools and platforms. Following the previous step, you must evaluate and select appropriate tools and platforms for unstructured data analytics based on your organization's specific needs, data types, and resources. Consider the scalability, flexibility, and integration capabilities of the solutions.

Invest in data governance. Establish robust data governance policies and processes to ensure data quality, security, and compliance. Implementing data cataloging, classification, and metadata management can facilitate easier access and retrieval of unstructured data, enabling more thorough analysis.

Build a skilled analytics team. Assembling a multidisciplinary team with expertise in data science, machine learning, and domain knowledge is a must, as such a team can effectively analyze unstructured data. It is essential to provide training and support to develop their skills and ensure they stay up to date with industry trends.

Foster a data-driven culture. Encouraging a data-driven mindset throughout the organization can be achieved by promoting data literacy and emphasizing the importance of data-driven decision-making. Sharing insights gained from unstructured data analysis with relevant stakeholders and departments can support collaborative decision-making and foster a data-driven culture.

Pilot and iterate. To ensure the feasibility and effectiveness of unstructured data analytics initiatives, it’s better to begin with small-scale pilot projects. Take the knowledge gained from these pilots to refine your approach and scale up successful projects to achieve sustained success.

Ensure data security and privacy. Implement robust security measures and adhere to relevant data protection regulations to safeguard the privacy and security of your unstructured data. Keeping data anonymized or pseudonymized when necessary can help maintain privacy. It is also important to maintain transparency with stakeholders regarding data handling practices.

Measure and optimize. Regularly evaluating the performance and impact of unstructured data analytics efforts by tracking relevant metrics and KPIs is crucial. Doing so enables optimization of processes, tools, and techniques to maximize the value derived from unstructured data.