Programming is about solving problems. We usually build APIs to solve one of two (or both) very specific problems -- reaching desired functionality by connecting various applications and opening your application for integration by others. The more detail we go into, the more problems that need solving. Who will be using your API? What network or performance constraints will they be dealing with? What talent and budget is needed to maintain that connection?

Previously, we covered the main differences between SOAP vs REST vs GraphQL vs RPC. Today, we want to explore a new and interesting API design style that promises to solve problems other styles weren’t fully able to. Let’s go.

Read our dedicated article to learn about WebSockets, a communication protocol for creating real-time applications.

What is gRPC and why was it introduced?

gRPC is a framework for implementing RPC APIs via HTTP/2. To understand what this means in comparison to other API building styles, let’s look at API design's timeline first.

Traditionally, there have been two distinct ways to build APIs: RPC and REST. There’s also SOAP, an ancient protocol that is mostly used in strictly standardized operations like banking and aviation. You will also hear about GraphQL, gRPC’s peer in terms of age. Let’s go through them one by one.

Watch an overview of main API types in our video

RPC (Remote Procedure Call) is classic and the oldest API style currently in use. It uses procedure calls to request a service from a remote server the same way you would request a local system -- via direct actions to the server (like SendUserMessages, LocateVehicle, addEntry). RPC is an efficient way to build APIs; RPC messages are lightweight and the interactions are straightforward.

But the more businesses open ways for integration creating the so-called API economy, the harder it is to integrate those systems. Written in different programming languages, tightly coupled with the underlying systems, RPC APIs are difficult to integrate and unsafe to share as they may leak inner implementation details.

When REST (Representational State Transfer) was introduced in 2000, it was meant to solve this problem and make APIs more accessible. Namely, it provided a uniform way to access data indirectly, via resources, using generic HTTP methods such as GET, POST, PUT, DELETE, and so on. REST APIs are basically self-descriptive. This was the main difference between RPC and REST since with RPC, you address the procedures and there’s little predictability of what procedures in different systems may be.

Although REST presented an improved format for interacting with many systems, it returned a lot of metadata, which was the main reason it couldn’t replace simple and lightweight RPC. This was also one of the reasons two new approaches emerged later: Facebook’s GraphQL and gRPC from Google.

There’s a strong urge to pit all the aforementioned methods against each other and see who wins, but the decision will always come down to what your problem is and which tool is best at solving it. But we will make comparisons to REST as the dominant API design style in our analysis to give a more complex overview of gRPC.

gRPC benefits

gRPC offers a refreshed take on the old RPC design method by making it interoperable, modern, and efficient using such technologies as Protocol Buffers and HTTP/2. The following benefits make it a solid candidate for replacing REST in some operations.

Lightweight messages. Depending on the type of call, gRPC-specific messages can be up to 30 percent smaller in size than JSON messages.

High performance. By different evaluations, gRPC is 5, 7, and even 8 times faster than REST+JSON communication.

Built-in code generation. gRPC has automated code generation in different programming languages including Java, C++, Python, Go, Dart, Objective-C, Ruby, and more.

More connection options. While REST focuses on request-response architecture, gRPC provides support for data streaming with event-driven architectures: server-side streaming, client-side streaming, and bidirectional streaming.

Now, how does it achieve such performance? Let’s talk about gRPC innovations that make this possible.

gRPC main concepts

gRPC owes its success to the employment of two techniques: using HTTP/2 instead of HTTP/1.1 and protocol buffers as an alternative to XML and JSON. Most of the gRPC benefits stem from using these technologies.

Protocol Buffers for defining schema

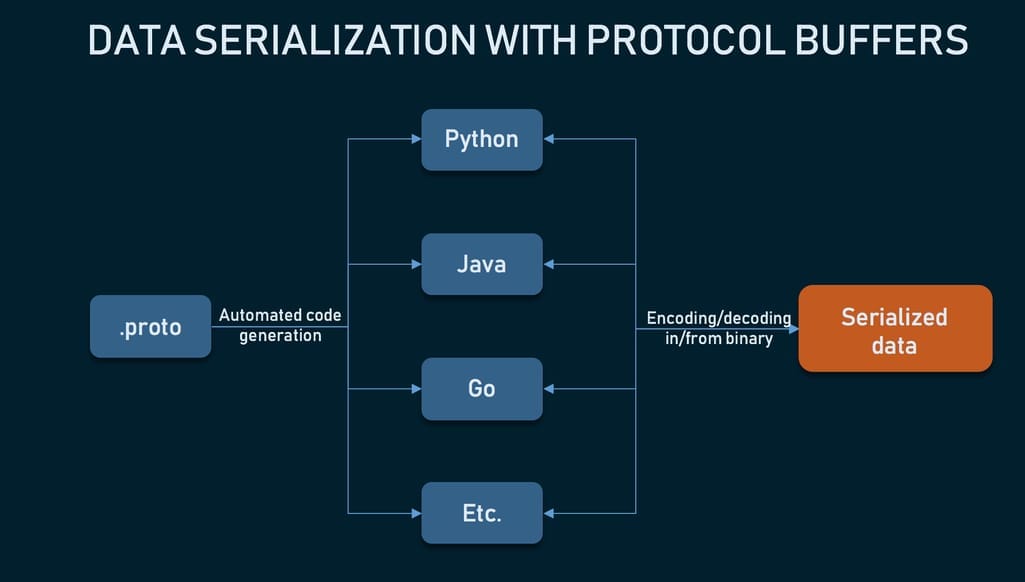

Protocol buffers are a popular technology for structuring messages developed and used in nearly all inter-machine communication at Google. In gRPC, protocol buffers (or protobufs) are used instead of XML or JSON in REST. Here’s how they work.

Protocol buffers are a popular serialization method outside of gRPC use cases - the source code on GitHub currently has nearly 47k stars

- In a .proto text file, a programmer defines a schema -- how they want data to be structured. They use numbers, not field names, to save storage. They can also embed documentation in the schema.

- Using a protoc compiler, this file is then automatically compiled into any of the numerous supported languages like Java, C++, Python, Go, Dart, Objective-C, Ruby, and more.

- At runtime, messages are compressed and serialized in binary format.

What this means is that protobufs offer some great advantages over JSON and XML.

More efficient parsing. Parsing with Protocol Buffers is less CPU-intensive because data is represented in a binary format which minimizes the size of encoded messages. This means that message exchange happens faster, even in devices with a slower CPU like IoT or mobile devices.

Schema is essential. By forcing programmers to use schema, we can ensure that the message doesn’t get lost between applications and its structural components stay intact on another service as well.

The main goal of protobufs is optimization. By removing many responsibilities done by data formats and making it focus only on performing serialization and deserialization, we can ensure that the data is transmitted as fast as possible and in the most compact way. Which is especially valuable when working with microservices. And we will touch upon it a bit later.

HTTP/2 as the transport protocol

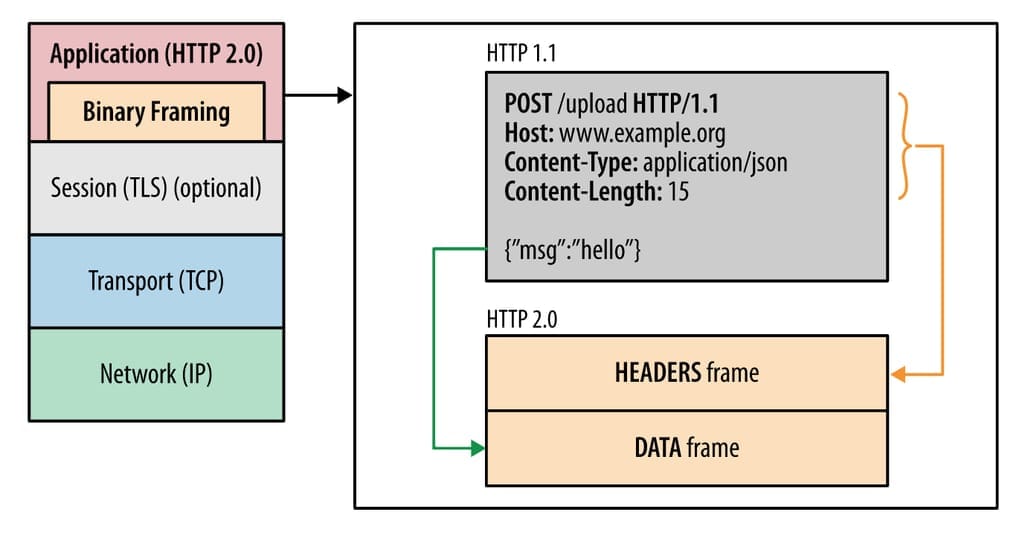

gRPC introduces many upgrades to the old RPC structure by using HTTP/2. This specification was published in 2015 and improved on the HTTP/1.1 design’s 20-year old condition. Here are some of the advatages the advanced protocol offers.

Binary framing layer. HTTP/2 communication is divided into smaller messages and framed in binary format. Unlike text-based HTTP/1.1, it makes sending and receiving messages compact and efficient.

HTTP/2 elements such as headers, methods, and verbs are encoded in the binary format during transmit. Each message consists of several frames Source: Developers.Google

Multiple parallel requests. While HTTP/1.1 allows for processing just one request at a time, HTTP/2 supports multiple calls via the same channel. More than that, communication is bidirectional -- a single connection can send both requests and responses at the same time.

Streaming. Real-time communication is not only possible with HTTP/2, but also has high performance thanks to binary framing -- each stream is divided into frames that can be prioritized and run via a single TCP connection, thus reducing the network utilization and processing load.

Along with these benefits, HTTP/2 also presents some challenges. Being fairly new, it’s currently supported only by 50.5 percent of all the websites. This is one of the reasons why gRPC is mostly featured on the backend, which we will explain more in the following sections.

gRPC weaknesses

It’s fair to hope that gRPC disadvantages will be overcome with time. Unfortunately, they hamper its mainstream adoption in a big way.

Lack of maturity

The maturity of specific technology can be a big hurdle to its adoption. That’s apparent with gRPC too. Compared to its peer GraphQL that has over 14k questions on StackOverflow, gRPC currently has a little under 4k. With minimal developer support outside of Google and not many tools created for HTTP/2 and protocol buffers, the community lacks information about best practices, workarounds, and success stories. But this is likely to change soon. As with many open-source technologies backed by big corporations, the community will keep growing and attracting more developers.

Limited browser support

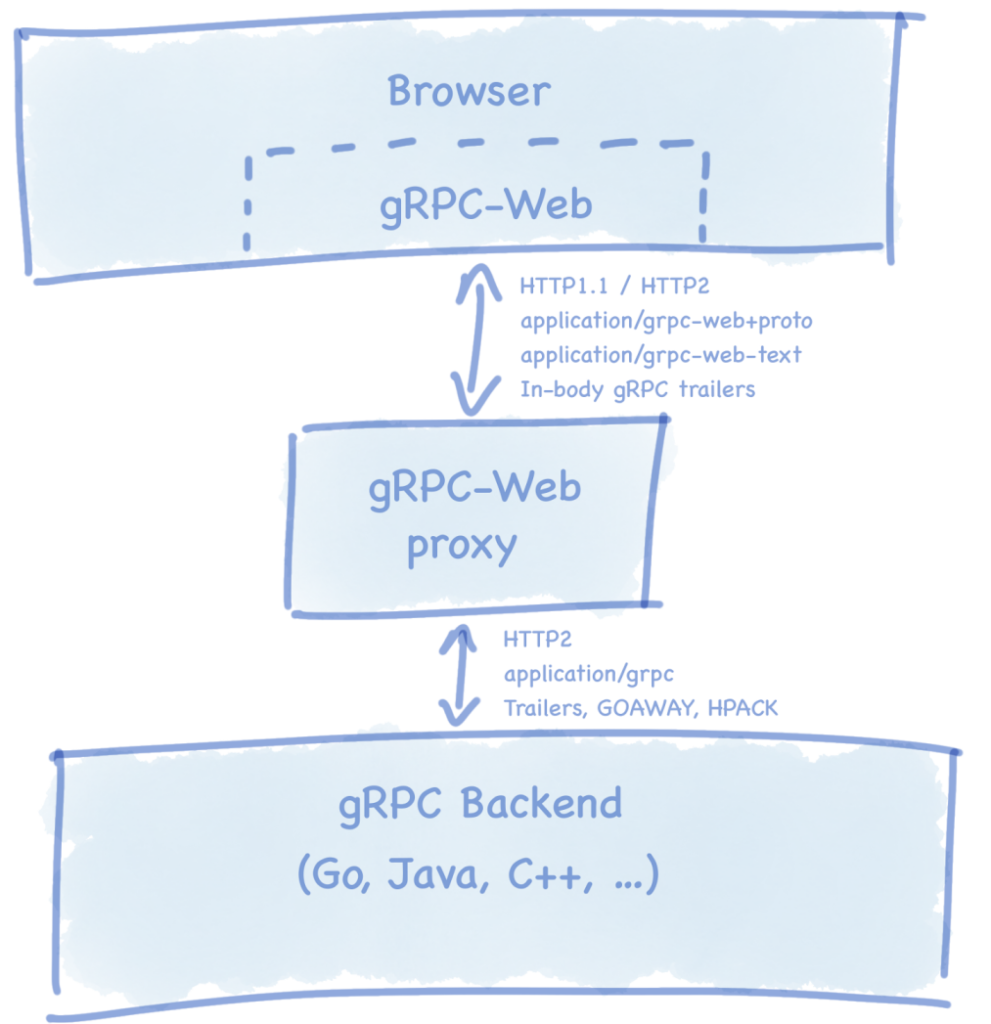

Since gRPC heavily relies on HTTP/2, you can’t call a gRPC service from a web browser directly, because no modern browsers can access HTTP/2 frames. So, you need to use a proxy, which has its limitations. There are currently two implementations:

- gRPC web client by Google implemented in JavaScript with an upcoming support for Python, Java, and more languages

- gRPC web client by the team from The Improbable for TypeScript and Go

They both share the same logic: A web client sends a normal HTTP request to the proxy, which translates it and forwards it to the gRPC server.

Using gRPC on front end Source: gRPC blog

Currently, not all gRPC features and advantages are available via web clients, although Google has a roadmap of upcoming features (like full streaming support and integration with Angular) that might ease the workload of using a proxy altogether.

Not human-readable format

By compressing data to a binary format, protobuf files become non-human readable, unlike XML and JSON. To analyze payloads, perform debugging, and write manual requests, devs must use extra tools like gRPC command line tool and server reflection protocol. Although protobuf files can be converted into JSON out of the box.

Steeper learning curve

Compared to REST and GraphQL, which primarily use JSON, it will take some time to get acquainted with protocol buffers and find tools for dealing with HTTP/2 friction. So, many teams will choose to rely on REST for as long as possible, which in turn will make it difficult to find gRPC experts.

When to use gRPC

That said, what are the conditions for using gRPC instead of REST?

- Real-time communication services where you deal with streaming calls

- When efficient communication is a goal

- In multi-language environments

- For internal APIs where you don’t have to force technology choices on clients

- New builds as part of transforming the existing RPC API might not be worth it

You might notice that many of these conditions apply to one specific use case -- microservices. After all, there’s a reason Netflix, Lyft, WePay, and more companies operating with microservices have transitioned to gRPC.

Microservices with gRPC

gRPC is unanimously accepted as the best option for communication between internal microservices thanks to two things: unmatched performance and its polyglot nature.

One of the greatest strengths of microservices contrasted with monolithic architecture, is the ability to use different technologies for each independent service -- whichever would fit best. Microservices must agree on the API to exchange data, data format, error patterns, load balancing, and more. And since gRPC allows for describing a service contract in a binary format, programmers can have a standard way to specify those contracts, independent of any language, that ensures interoperability.

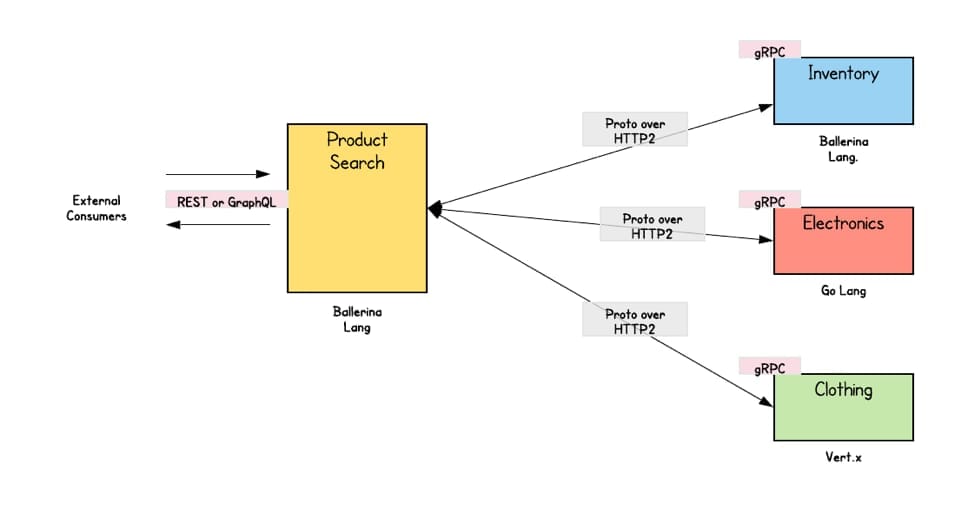

The example of microservices in the online retail case with internal services communication performed via gRPC and external communication using REST or GraphQL Source: TheNewStack

Besides, the ability to transmit multiple concurrent messages without a network overload makes gRPC a great choice for microservice-to-microservice communication that requires superfast message exchange, although REST or GraphQL is still recommended to use for external-facing microservices because of their text-based messaging directed at human consumers.

gRPC starter pack

Google is notoriously good at writing docs, so they should be a great learning source on your path to gRPC mastery. Still, here’s our list of recommended lessons and tools to look into.

Official guides: gRPC guide and Protocol Buffers guide. Choose a language and get started with tutorials, API reference guide, and examples.

Community: Gitter chat, subreddit, and Google Group. Post your questions to dedicated gRPC communities, but don’t forget about StackOverflow or even Quora where people are more active.

Tools: gRPC Ecosystem on GitHub. Find tools expanding gRPC capabilities.

Online classes: Microservices with gRPC and gRPC Master Class. Take top-rated classes on Udemy that will take you from understanding concepts like protobufs to building your first gRPC API.

No, you don’t need to switch from REST

gRPC is not the evolution of REST, nor is it a better way to build APIs. In a nutshell, gRPC is a way to use RPC’s lightweight structure along with HTTP with a few handy tweaks. It’s just another alternative for you to consider when you start designing a new API. As not many companies actually have the resources to transition to gRPC as Netflix did, you will probably consider it for a new microservices project, such as digital transformation -- and benefit greatly.

If you have experience working with gRPC, let us know in the comments how it went and whether you chose to stick to it. After all, the technology and its community will continue to grow, which will bring more benefits, eliminate challenges, and keep the conversation going.

Maryna is a passionate writer with a talent for simplifying complex topics for readers of all backgrounds. With 7 years of experience writing about travel technology, she is well-versed in the field. Outside of her professional writing, she enjoys reading, video games, and fashion.

Want to write an article for our blog? Read our requirements and guidelines to become a contributor.